Wikipedia:Bot requests/Archive 24

| This is an archive of past discussions about Wikipedia:Bot requests. Do not edit the contents of this page. If you wish to start a new discussion or revive an old one, please do so on the current main page. |

| Archive 20 | ← | Archive 22 | Archive 23 | Archive 24 | Archive 25 | Archive 26 | → | Archive 30 |

Vital articles

Wikipedia:Vital articles is one of the most important pages of the project, but lacks a great deal of easily discoverable information; assessment ratings. I've done the People section manually, but could a bot be programmed to find out the assessment of these articles and add the appropriate icon? For example, the bot should check Talk:Train, read the "class=B" assessment and add {{B-icon}} with a space before the Train entry in the Transportation section. For bonus points, it would be great to have FAC and GAN failures also listed. Skomorokh 18:27, 1 December 2008 (UTC)

Come on guys, this should not be too hard - there is already a user gadget that scrapes this sort of content; all that needs doing is to have the bot add a few icons here and there. Anyone? Skomorokh 15:58, 2 December 2008 (UTC)

Coding... I am about half way done. LegoKontribsTalkM 00:55, 3 December 2008 (UTC)

Coding... I am about half way done. LegoKontribsTalkM 00:55, 3 December 2008 (UTC)

- That's great, thank you for taking an interest. Skomorokh 23:44, 5 December 2008 (UTC)

BRFA filed Wikipedia:Bots/Requests for approval/Legobot II 3. I don't have FAC and GAN failures listed, but will get it in soon. LegoKontribsTalkM 07:12, 12 December 2008 (UTC)

BRFA filed Wikipedia:Bots/Requests for approval/Legobot II 3. I don't have FAC and GAN failures listed, but will get it in soon. LegoKontribsTalkM 07:12, 12 December 2008 (UTC)

- That's great, thank you for taking an interest. Skomorokh 23:44, 5 December 2008 (UTC)

DYK hook count bot

DYK posts about 210-280 new/expanded articles to the Main Page every week (every seven days). To make decisions about DYK, DYK is in need of a bot to keep an ongoing count of the number of hooks listed at Template talk:Did you know. See this discussion. The counting for the bot should be straight forward since all the hooks listed at Template talk:Did you know have level 4 headers and begin with ====[[. Each day set of hooks includes a consistenly worded level 3 header. We're looking for a table output that includes the total number of hooks in the DYK pipeline and a breakdown per day. Manually, I did a cound that found a total of 90 DYK hooks, with the breakdown as follows: November 30 (1), December 1 (2), December 2 (1), December 3 (4), December 4 (9), December 5 (28), December 6 (22), December 7 (16), December 8 (7). Is such a DYK hook count bot doable? -- Suntag ☼ 19:53, 9 December 2008 (UTC)

- Hello. Do you need an exact count? I ask because if you don't you can just scroll down to the last suggestion and hover your mouse over the [edit] button. You'll see something like "http://en.wikipedia.org/w/index.php?title=Template_talk:Did_you_know&action=edit§ion=177" at the bottom of your browser. Take that last number (177) and minus off 15 and you'll be within a few of the exact total. --ThaddeusB (talk) 02:37, 10 December 2008 (UTC)

- An ongoing exact count would make things easier and be useful. As noted here, DYK is using a 170 pipeline hook threshold to maintain a six hour update cycle. Additionally, no individual day should be too low on hooks. The bot onging update will help DYK monitor the hook pipeline. If the pipeline runs too low and/or one or more days are light on DYK hook suggestions, then someone at DYK will go to User:AlexNewArtBot/GoodSearchResult and see if they can develop some hooks to fill in the low days. If the pipeline is top heavy, then the DYK reviewers will know to apply to rules a little sterner. The TDYK page is not monitored by everyone at DYK as several work behind the scenes at the Queues to get the product to the Main page. The pipeline update table will be a significant DYK tool. I did try the float method and showed something else other than the URL string. -- Suntag ☼ 18:21, 10 December 2008 (UTC)

- Fair Enough. This will be pretty easy to code, so just let me know what location you want the table written to and how often you want it updated and I'll send in a BRFA. --ThaddeusB (talk) 22:52, 10 December 2008 (UTC)

- Place the table at Wikipedia:Did you know/DYK hook count. We can transcluded it from there. I don't know anything about what is needed for a bot update, but would an update every five minutes be too much? I assume that if there are no changes to the TDYK page the bot would not actually update the DYK hook count. Otherwise, every half-hour, hour, or whatever minimum time you feel is reasonable for a bot update. In reality, we don't really know how the TDYK page changes over time and the bot should help us see it better. -- Suntag ☼ 02:50, 11 December 2008 (UTC)

- I mentioned this on your talk page as well, Suntag, but I just wanted to give a heads-up to the coder that using ]]==== for the count might be better than using ====[[ , because the latter may double-count nominations with images (counting the ==== from the end of the header, and then the [[ from the beginning of the image on the next line). Also, it might be necessary to make it also count ]] ==== (with the space), since people not using the nomination template might create a header with a space like that. —Politizer talk/contribs 04:46, 11 December 2008 (UTC)

- I posted a 07:46, 11 December 2008 sorted data dump of the TDYK page at User:Suntag/Dump. In the edit view, please scroll down to the level 4 headers so that you can see the variations. It appears that each hook has a set of two ==== in the same line. -- Suntag ☼ 07:59, 11 December 2008 (UTC)

- Place the table at Wikipedia:Did you know/DYK hook count. We can transcluded it from there. I don't know anything about what is needed for a bot update, but would an update every five minutes be too much? I assume that if there are no changes to the TDYK page the bot would not actually update the DYK hook count. Otherwise, every half-hour, hour, or whatever minimum time you feel is reasonable for a bot update. In reality, we don't really know how the TDYK page changes over time and the bot should help us see it better. -- Suntag ☼ 02:50, 11 December 2008 (UTC)

- Fair Enough. This will be pretty easy to code, so just let me know what location you want the table written to and how often you want it updated and I'll send in a BRFA. --ThaddeusB (talk) 22:52, 10 December 2008 (UTC)

- An ongoing exact count would make things easier and be useful. As noted here, DYK is using a 170 pipeline hook threshold to maintain a six hour update cycle. Additionally, no individual day should be too low on hooks. The bot onging update will help DYK monitor the hook pipeline. If the pipeline runs too low and/or one or more days are light on DYK hook suggestions, then someone at DYK will go to User:AlexNewArtBot/GoodSearchResult and see if they can develop some hooks to fill in the low days. If the pipeline is top heavy, then the DYK reviewers will know to apply to rules a little sterner. The TDYK page is not monitored by everyone at DYK as several work behind the scenes at the Queues to get the product to the Main page. The pipeline update table will be a significant DYK tool. I did try the float method and showed something else other than the URL string. -- Suntag ☼ 18:21, 10 December 2008 (UTC)

Coding... --ThaddeusB (talk) 20:27, 11 December 2008 (UTC)

Coding... --ThaddeusB (talk) 20:27, 11 December 2008 (UTC)

BRFA filed Wikipedia:Bots/Requests_for_approval/WikiStatsBOT. A sample table can be seen at User:WikiStatsBOT/Test. Let me know of any changes you'd like seen made to the formatting. --ThaddeusB (talk) 21:50, 11 December 2008 (UTC)

BRFA filed Wikipedia:Bots/Requests_for_approval/WikiStatsBOT. A sample table can be seen at User:WikiStatsBOT/Test. Let me know of any changes you'd like seen made to the formatting. --ThaddeusB (talk) 21:50, 11 December 2008 (UTC)

- Looks good. The gang at DYK will really appreciate their Holiday season present. -- Suntag ☼ 01:02, 12 December 2008 (UTC)

- I'd guess that it might be convenient to have the data in the left column as wikilinks to the various days - December 12? --Tagishsimon (talk) 01:07, 12 December 2008 (UTC)

Done --ThaddeusB (talk) 01:46, 12 December 2008 (UTC)

Done --ThaddeusB (talk) 01:46, 12 December 2008 (UTC)

- I'd guess that it might be convenient to have the data in the left column as wikilinks to the various days - December 12? --Tagishsimon (talk) 01:07, 12 December 2008 (UTC)

- Looks good. The gang at DYK will really appreciate their Holiday season present. -- Suntag ☼ 01:02, 12 December 2008 (UTC)

- To whom it may concern, please see this discussion regarding the frequency of bot updates. --ThaddeusB (talk) 19:37, 12 December 2008 (UTC)

Looking for help alphabetizing big list; is there a bot?

I have been working on List of skin-related conditions, and want to (1) alphabetize the diseases under each header, and (2) alphabetize the synonym names for diseases within each parenthetical, and wanted to know if there was a bot, or some other automated way, to do this? I would prefer not to do it manually. Thanks again for all your help! kilbad (talk) 04:34, 13 December 2008 (UTC)

- No need for a bot for that, just a little script-assisted editing. FYI, I used

perl -pwe 'if(/^\*/){ s{\]\] \((.*)\)\s*$}{ "]] (".join(", ", sort { lc($a) cmp lc($b) } split(/, /, $1)).")\n" }e; }'to sort the parentheticals, andperl -nwe 'BEGIN { @x=(); } if(/^\s*$/ || /^==/){ print join("", sort { lc ($a) cmp lc($b) } @x).$_; @x=(); } elsif(/^\*/){ push @x, $_; } else { $n=$.+11; die "XXX Bad line at line $n\n"; }'to sort the lists. Anomie⚔ 17:04, 13 December 2008 (UTC)

DYK Purge bot

The page Template talk:Did you know has a lot of traffic adding {{tl:DYKsuggestion}} and removing that same template. The level 4 headers have an edit button. It is common to press the edit button for one DYK nomination, but to have the edit page open to a different DYK hook. It's annoying. See Section editing shenanigans. Purging the page seems to help, but is tedeous and not everyone knows about it. Is a bot available to purge that page every so often, such 5 minutes or more? Thanks. -- Suntag ☼ 01:40, 12 December 2008 (UTC)

- Purging won't actually help, except that it'll reload the page in your browser (so a purge-bot won't help at all). The problem is: you load a page where section "B" is assigned section number 7. Someone else removes section "A" above section "B" which changes the number for every section below where section "A" used to be, so section "B" now has section number 6, but clicking on the edit link without reloading the page will still try to edit section number 7, which is now section "C." Mr.Z-man 21:35, 12 December 2008 (UTC)

- All this time I thought that my purging caused the page to reload the transclusions in Wikipedia's computer. :( -- Suntag ☼ 22:41, 13 December 2008 (UTC)

New Request

I'm requesting 2 bots to be pre-approved to revert vandalism on this site under my command. They will go under the names of Probot 1 and Probot 2. When they're created, they will benefit the site well. Commander Lightning (talk) 01:38, 14 December 2008 (UTC)Commander Lightning (The Galactic Empire will CRUSH the Rebel Alliance )

- Pre-approved? No. BJTalk 01:39, 14 December 2008 (UTC)

- I think the page you are looking for is: Wikipedia:Bot approvals. That page is for getting a bot you wrote approved. This page is for requesting someone else write a bot for you. --ThaddeusB (talk) 02:31, 14 December 2008 (UTC)

- To add to the above, the person who writes the bot is also the one who will run the task. §hep • ¡Talk to me! 02:35, 14 December 2008 (UTC)

Request for NoobBot

I need someone to make a bot for the Newbie page for reverting vandilism (for example, deleting all text) and, if possible, checking spelling errors.Call it NoobBot.Make the bot and notify me when you make it.I will take the bot and keep up better versions of it, but I will give you credit.The version you will make is version 0.1 Beta.Thank you!User:Shock64User talk:Shock64

- We already have a handful of counter-vandalism bots working all of Wikipedia. (See category) Also, this page is not to request a bot to be made for you to run; it is to request tasks for other people's bots to do. If you want your own bot you generally have to write it see WP:MAKEBOT; if you wish to fix typos you might be intersted in WP:AWB. (But you need 500 mainspace edits to get access) §hep • ¡Talk to me! 23:34, 14 December 2008 (UTC)

Compile statistics on article referencing

Over at Wikipedia:Improving referencing efforts we're trying to improve article referencing. It would be helpful if we had statistics on the proportion of articles with references, average references per article, etc. Does anyone have a bot that could help with this? Thanks. - Peregrine Fisher (talk) (contribs) 21:32, 15 December 2008 (UTC)

- This is something that should be compiled off a database dump or on the toolserver, not by using a bot. To download the wikitext for every single page on wikipedia would take several weeks if you were to do it without violating the robots.txt rate limit of 1 page per second. (At one page per second with 2.66 million articles it would take over 30 days to complete the task, and if you add a maxlag in there your probably going to be looking at 45 or 50 days. )—Nn123645 (talk) 21:43, 15 December 2008 (UTC)

- Is there a better place to make this request that you can think of? Thanks. - Peregrine Fisher (talk) (contribs) 21:48, 15 December 2008 (UTC)

- I think there used to be a wikiproject on database dump analysis, I'm not sure if its still active. I have the articles xml dump for all the pages from the October 8th database dump, I can run a analysis on that for you. You might try pinging SQL, BJWeeks, X!, or someone else with a toolserver account to see if they want to do it. —Nn123645 (talk) 21:52, 15 December 2008 (UTC)

- You might try Wikipedia:Database reports. MBisanz talk 21:54, 15 December 2008 (UTC)

- See also User:WolterBot/Cleanup statistics. If you need more in-depth figures, let me know. --B. Wolterding (talk) 13:49, 16 December 2008 (UTC)

- That's an interesting page, but it seems to only be counting templates. For instance, I think there are way more than 130000 articles that don't have sources. I would love more in-depth figures. If a bot could check maybe 500 random articles and see what percentage has <ref.> tags, I think that would be a large enough sample. Ideally, the bot could do the same to a dump of WP from 6 months ago, and 1 year ago. That would be a pretty accurate way of checking to see if we're doing a good job refing articles or not, I think. Thanks. - Peregrine Fisher (talk) (contribs) 19:17, 16 December 2008 (UTC)

- I did something similar a few months ago, using all articles in the static html dump from June 2008. By counting the number of <ref></ref> tags I found that the average number of citations per paragraph was 2.07 for FA, 2.06 for GA, 0.87 for A class, 0.51 for B, 0.26 for Start, 0.14 for Stub and 0.15 for Unassessed articles. This was out of a total of 2,251,862 articles (disambig pages and obvious lists excluded); 1,625,072 (72%) had no <ref></ref>s, though some of these will have Harvard-style refs, e.g. (Smith 2000). Dr pda (talk) 23:46, 16 December 2008 (UTC)

- That's an interesting page, but it seems to only be counting templates. For instance, I think there are way more than 130000 articles that don't have sources. I would love more in-depth figures. If a bot could check maybe 500 random articles and see what percentage has <ref.> tags, I think that would be a large enough sample. Ideally, the bot could do the same to a dump of WP from 6 months ago, and 1 year ago. That would be a pretty accurate way of checking to see if we're doing a good job refing articles or not, I think. Thanks. - Peregrine Fisher (talk) (contribs) 19:17, 16 December 2008 (UTC)

- See also User:WolterBot/Cleanup statistics. If you need more in-depth figures, let me know. --B. Wolterding (talk) 13:49, 16 December 2008 (UTC)

- You might try Wikipedia:Database reports. MBisanz talk 21:54, 15 December 2008 (UTC)

(redent)Wow! Super cool. Would it be possible for you to do the same thing for the current version? I'm very curious to see how (or if) that 72% has changed. - Peregrine Fisher (talk) (contribs) 00:14, 17 December 2008 (UTC)

- The database dump I used was the static html one (which is basically the equivalent of downloading all pages on Wikipedia, 220 GB unzipped!). This is updated infrequently, so the June 2008 one is the most recent. The regular database dumps occur more frequently, but those are in xml format; I don't have the machinery set up to do this analysis on them. However I got the impression above that User:Nn123645 was willing to produce the statistics you wanted from the latest database dump. Dr pda (talk) 03:43, 17 December 2008 (UTC)

- Cool. Thanks for the help. - Peregrine Fisher (talk) (contribs) 03:46, 17 December 2008 (UTC)

Bulk-replace URL for Handbook of Texas Online

| Namespace | Handbook | Server |

|---|---|---|

| (Article) | 1177 | 1197 |

| Talk | ||

| User | ||

| User talk | ||

| Wikipedia | ||

| Wikipedia talk | ||

| File | 0 | 1 |

| Template talk | 1 | 1 |

| Portal | 1 | 1 |

Request for a bot-replacement of the text from "http://www.tsha.utexas.edu/handbook/online/articles/" to "http://www.tshaonline.org/handbook/online/articles/" when used as a URL fragment in references per http://www.tsha.utexas.edu/handbook/online/articles/HH/fha33.html and http://www.tshaonline.org/handbook/online/articles/HH/fha33.html. This bot should not update non-article pages and it should not update the URL if it is used other than as a reference. A report should be generated so changes can be easily audited. There are over 1000 uses of this string in articles. davidwr/(talk)/(contribs)/(e-mail) 00:33, 18 December 2008 (UTC)

- Why shouldn't it update the URL if its not used as a ref or on a non-article page? Unless there is a case of discussing the URL itself (like this section), I don't see how it could be anything other than an improvement. Mr.Z-man 00:58, 18 December 2008 (UTC)

- AnomieBOT can do this easily (it did something like this once before), but I'd like to read the answer to Mr.Z-man's question first. Anomie⚔ 01:06, 18 December 2008 (UTC)

- Actualy, checking the links you provided, why shouldn't all links to any page on http://www.tsha.utexas.edu/ be changed to http://www.tshaonline.org/? Anomie⚔ 01:13, 18 December 2008 (UTC)

- I've added a count of pages per namespace using the requested link and using any link to www.tshaonline.org. Anomie⚔ 01:29, 18 December 2008 (UTC) And corrected the count to remove archive pages and Wikipedia process pages (e.g. AFD, VFD, CFD, this page). Anomie⚔ 01:50, 18 December 2008 (UTC)

- I started replacing some of these links by hand many months ago, but didn't get far. I suggest only replacing the ones in main space. Those in Talk space may in some cases be discussing the question of which link to use, so should be left alone. There is an official {{Handbook of Texas}} template and I'd recommend using that. If a link to Handbook of Texas appears under external links, it should be fixed there as well. Can someone keep a list of all the articles where the replacement is done? I remember that in some cases there were multiple occurrences of the link within one article and they were not all necessary. This is how I fixed up White Rock Lake. Here is what was done at Naval Air Station Dallas. Ideally there would be a human review of each change. Adding citation templates at the same time might be helpful. Perhaps AWB, or something? EdJohnston (talk) 02:09, 18 December 2008 (UTC)

- I've added a count of pages per namespace using the requested link and using any link to www.tshaonline.org. Anomie⚔ 01:29, 18 December 2008 (UTC) And corrected the count to remove archive pages and Wikipedia process pages (e.g. AFD, VFD, CFD, this page). Anomie⚔ 01:50, 18 December 2008 (UTC)

- Actualy, checking the links you provided, why shouldn't all links to any page on http://www.tsha.utexas.edu/ be changed to http://www.tshaonline.org/? Anomie⚔ 01:13, 18 December 2008 (UTC)

- How ironic. This is exactly the sort of thing my pending BRFA (DeadLinkBOT) is designed to fix. It is even designed to allow easy transition to templates. No one seems to want to approve it though. Sigh --ThaddeusB (talk) 02:30, 18 December 2008 (UTC)

- Re: "Why shouldn't it update the URL if its not used as a ref or on a non-article page?" - I don't trust automated tools to make the semantic distinction between when it should be changed and when it should not be changed. Two obvious times when it should not be changed: When the phrase is being used literally, as in this discussion, when the link is being used as an example, as in this discussion, or in any archived discussion whatsoever. The benefits of replacing it in non-article pages or in article pages when it is not used as a reference are not worth the risk of harm. davidwr/(talk)/(contribs)/(e-mail) 18:32, 18 December 2008 (UTC)

- Is there any reason not to go ahead and run it in the article space? Whose approval are you waiting on? davidwr/(talk)/(contribs)/(e-mail) 18:32, 18 December 2008 (UTC)

- Not sure if this comment was directed at me or not, but if it was the answer is simply: Policy forbids the running of bots not approved by the Bot Approvals Group. My bot isn't approved, so I can't tun it. I have tried to get some answers as to why, but no one seems willing to act (for reasons I cannot understand). As soon as I get approval, I'll be glad to help you. --ThaddeusB (talk) 19:06, 18 December 2008 (UTC)

Would it be possible for a bot to sweep through WP and change all instances of Manchester City Centre (note the capitalisation), to Manchester city centre? The article was renamed not so long ago, but the earlier name (now a redirect) persists. Shouldn't be a difficult bot to make I imagine. :) Thanks, --Jza84 | Talk 01:07, 19 December 2008 (UTC)

- This looks to fit under WP:R2D, unless something is now completely and utterly wrong with the link. ie The subject of the article changed. §hep • ¡Talk to me! 02:17, 19 December 2008 (UTC)

- Ooops! My apologies. I didn't realise there was a criteria covering redirects. I suppose I'll have to seek somebody out with AWB, unless there is a simple bot that can do this and is willing to overlook my ignorance (sorry!)? --Jza84 | Talk 02:36, 19 December 2008 (UTC)

- I don't think you understand, it has nothing to do with bots in particular; redirects that aren't broken should not be changed unless there is a reason to do so (such as the redirect needing to be used for a disambiguation page), this goes for bots, manually, and otherwise. Mr.Z-man 03:25, 19 December 2008 (UTC)

- Ooops! My apologies. I didn't realise there was a criteria covering redirects. I suppose I'll have to seek somebody out with AWB, unless there is a simple bot that can do this and is willing to overlook my ignorance (sorry!)? --Jza84 | Talk 02:36, 19 December 2008 (UTC)

Need some redlinks to become redirects

Hi there. I'm looking for someone here to take a list of redlinks articles I've compiled and and turn each one into a redirect. The list is located here. An example of what I need done is

Create:Addison Township, Gallia County, OH with #REDIRECT [[Addison Township, Gallia County, Ohio]]

Every link would be treated the same redirecting the page to Township, County, Ohio. Is this possible? Thanks! §hep • ¡Talk to me! 00:24, 19 December 2008 (UTC)

- Basically changing 'OH' to 'Ohio'? LegoKontribsTalkM 00:33, 19 December 2008 (UTC)

- Yes. I took all the links from List of townships in Ohio, switched Ohio to OH, and found which articles needed created. Once I got there I got stuck on how to make mass-redirects without it taking all night doing each one individually so I came here. Thanks! §hep • ¡Talk to me! 00:38, 19 December 2008 (UTC)

Coding... should be simple. LegoKontribsTalkM 00:51, 19 December 2008 (UTC)

Coding... should be simple. LegoKontribsTalkM 00:51, 19 December 2008 (UTC)

- Cool. Thank you. §hep • ¡Talk to me! 00:52, 19 December 2008 (UTC)

- Yes. I took all the links from List of townships in Ohio, switched Ohio to OH, and found which articles needed created. Once I got there I got stuck on how to make mass-redirects without it taking all night doing each one individually so I came here. Thanks! §hep • ¡Talk to me! 00:38, 19 December 2008 (UTC)

![]() Doing... LegoKontribsTalkM 01:59, 19 December 2008 (UTC)

Doing... LegoKontribsTalkM 01:59, 19 December 2008 (UTC)

![]() Done LegoKontribsTalkM 15:31, 19 December 2008 (UTC)

Done LegoKontribsTalkM 15:31, 19 December 2008 (UTC)

- That was quick, thanks for the help! §hep • ¡Talk to me! 21:14, 19 December 2008 (UTC)

DYKCheckbot

DYK gives credit to editors who write articles (creators) and for those that suggest DYK hooks (nominators). The creators and nominators are identified on the DYK suggestion page by parameters such |creator=, |nominator=, |collaborator=, etc. DYK hooks approved on the suggestion page are manually copied to the Template:Did you know/Next update page. From there, they are manually copied into one of five queue pages Template:Did you know/Queue. The nominator and creator credits listed in the Queue's are read by a bot and posted to the appropriate user talk page.

There is a significant difference between a creator and a nominator credit. Sometimes in manually moving the DYK hook information from the suggestion page to the next update page, the nominator is listed as a creator. For example, This diff shows that I was listed as a nominator for Careysburg, Liberia. This diff shows the move of the information to the Next update template put me as a creator/maker via {{DYKmake|Careysburg, Liberia|Suntag}}. It should have read {{DYKnom|Careysburg, Liberia|Suntag}}. Five of my last thirty DYK hooks were miss credited due to this error. It is a common, human error and DYK is hoping a bot can help us. Bot request Can you create a bot to read the credits in the history of the DYK suggestion page, compare them to the credits listed in the Queues, and output a report if there is a discrepancy between the two? -- Suntag ☼ 00:23, 17 December 2008 (UTC)

- The template used to post the DYK hooks the DYK suggestion page was modified recently to include credit strings, which themselves can be copied to the Next Update page. That should significantly reduce the data transfer error rate, so their now may not be a need for a DYKCheckbot. -- Suntag ☼ 19:14, 20 December 2008 (UTC)

Most Redlinked Bot

There's ton of requested articles on Wikipedia, but most items in this list are very minor, not notable, and don't have any links to them. It would be useful to have a list of the most requested nonexistant articles, found by the most common redlinks. This would be a huge benefit to WP: plenty of people want to create new articles, but the RA list is daunting and resources would be better directed towards articles that are already linked to.

There have been previous projects like this, but have all been discontinued - apparently due to changes in proxy regulations because of vandals. But perhaps a bot could do this job - search for redlinks and dump the results on a ranking page, perhaps? FlyingToaster 07:23, 4 December 2008 (UTC)

- The only practical way to do this is on the tool server or via database dumps since Special:WantedPages is disabled. —Nn123645 (talk) 12:30, 4 December 2008 (UTC)

- Fair enough, I was afraid of that. Do you know who I should talk to about doing it in one of these? FlyingToaster 16:50, 4 December 2008 (UTC)

Coding... I'm in the process of generating the statistics from the October 8th Database dump. I should have them by Saturday or so. —Nn123645 (talk) 21:23, 4 December 2008 (UTC)

Coding... I'm in the process of generating the statistics from the October 8th Database dump. I should have them by Saturday or so. —Nn123645 (talk) 21:23, 4 December 2008 (UTC)

- It could also possibly be implemented as a report with the Report tool. — Dispenser 15:46, 5 December 2008 (UTC)

- This is taking longer than expected. I am importing the pagelinks table dump. Last time I checked, which was about 4 hours ago, I was at 50 million records. Right now I'm averaging about 30,000 rows a minute. It has been going for over 20 hours and shows no signs of stopping anytime soon. —Nn123645 (talk) 01:12, 6 December 2008 (UTC)

- As an update on the status the pagelinks table import failed most of the way through. I am considering using the api to get the information as it would be possible to do it without pulling the actual wikitext for each page. —Nn123645 (talk) 04:58, 11 December 2008 (UTC)

- How do you want to do this using the api? I think you're best bet is using a database dump. It'll be a lot of work to actually get the results, which is why the special page has been disabled, but you can then run it on your own computer. It is indeed also possible to get the results from the toolserver. I wrote a query for that, but I only use it at w:nds-nl, a wiki with about 5,000 articles. I tried running it for nlwiki once, but that took too much time. So it definitely won't work for this wiki. --Erwin(85) 10:13, 14 December 2008 (UTC)

- I was planning to use all pages as a generator and use the link properties module. At 5,000 links per query, and 1,000 pages I should be able to to do this in under 3,000 API queries, which if I use a low maxlag shouldn't have too big an influence on the servers. I wrote a reply to this earlier but my browser crashed before I had a chance to save. Lately I've been busy but I should be able to get around to it this weekend along with the references statistics request mentioned below. —Nn123645 (talk) 15:07, 19 December 2008 (UTC)

- What do you mean by the number of pages and what are the figures for the number of pages and queries based on? I'm still pretty skeptical, but if you figure out a way to do this I'm sure a lot of projects will be interested. --Erwin(85) 10:57, 21 December 2008 (UTC)

- I'm basing the number of pages off of Special:Statistics. The 5,000 per query is the maximum limit for anyone who has the API High Limits right, which here is Bots and Admins (see Special:ListGroupRights). I may end up lowering the number of pages, but I was planning on using something like this API Query to get the information, then logging it to a db table. It does appear that mediawiki does the links query in order of page id, rather than in the alphabetical order that allpages uses, which would make since since the way the page links table is setup pl_from is the pageid of the page the link is from and pl_title is the name of the page the link is to. To my knowledge there is no way to have allpages list pages in order of pageid rather than by alphabetical order. This makes the output a bit tricky to work with, but it should work. Looking at it again, 3,000 queries may have been a big underestimate, since to get the number of under 3,000 I was figuring an average of 5 links per page (2.66 million pages / 1,000 pages per query = 2,660 queries to get through all the pages, then add a pad for when you will have to do query continue queries). Looking at the some of the API results for the bigger articles I can see there are more like 50 links per page or so. Based on Special:Statistics there is an average of 17.34 edits per page, which would lead one to believe there would not be many links. Until I get a decent idea of what the average links per page is, or how many total links there are on wikipedia, I can't really know how many queries its going to take to get all the pagelinks. Though even at an average of 100 links per page you would still be able to pull all the links in under 55,000 api queries. Which shouldn't put too much load on the servers as long as they are spread out (say one query every 30 seconds or so, with a maxlag of 2).

- Another option is to use the All links query and then use the list of page titles from the database dump to see which pages exist. If a page doesn't exist you could always do an api query to see if the page exists or not. This would most likely increase the number of queries it would take to get the results, but it would most likely cut down on the amount of resources needed per query. By doing that mediawiki would only have to query the pagelinks table, rather than query the pages table to get the allpages generator result then use the results from that and query the pagelinks table. —Nn123645 (talk) 16:55, 21 December 2008 (UTC)

- What do you mean by the number of pages and what are the figures for the number of pages and queries based on? I'm still pretty skeptical, but if you figure out a way to do this I'm sure a lot of projects will be interested. --Erwin(85) 10:57, 21 December 2008 (UTC)

- I was planning to use all pages as a generator and use the link properties module. At 5,000 links per query, and 1,000 pages I should be able to to do this in under 3,000 API queries, which if I use a low maxlag shouldn't have too big an influence on the servers. I wrote a reply to this earlier but my browser crashed before I had a chance to save. Lately I've been busy but I should be able to get around to it this weekend along with the references statistics request mentioned below. —Nn123645 (talk) 15:07, 19 December 2008 (UTC)

Change of brackets

The 259 deprecated {{coor d}} templates on Cities of the Ancient Near East have a name parameter, many of which include square brackets, for example: name=Eshnunna [Tell Asmar]. These need to be changed to parentheses, like name=Eshnunna (Tell Asmar) in order that the templates can be converted to {{Coord}}. The presence of square brackets elsewhere in the raw code precludes a simple search-and-replace. Can someone do the necessary, please? Andy Mabbett (User:Pigsonthewing); Andy's talk; Andy's edits 21:35, 23 December 2008 (UTC)

Done - a simply regex took care of it: "name=([^\[}]*)\[([^\]]*)\]" -> "name=$1($2)" --ThaddeusB (talk) 21:57, 23 December 2008 (UTC)

Done - a simply regex took care of it: "name=([^\[}]*)\[([^\]]*)\]" -> "name=$1($2)" --ThaddeusB (talk) 21:57, 23 December 2008 (UTC)

- That's great; thank you. Andy Mabbett (User:Pigsonthewing); Andy's talk; Andy's edits 22:08, 23 December 2008 (UTC)

Could someone please get a bot to sort each of the articles in Category:Blackpool F.C. seasons by season? For example, Blackpool F.C. season 1896–97 should have [[Category:Blackpool F.C. seasons|1896-97]] at the bottom, and Blackpool F.C. season 2007–08 should have [[Category:Blackpool F.C. seasons|2007-08]] at the bottom. If anyone could do this for me, that would be much appreciated. Thanks. – PeeJay 20:03, 23 December 2008 (UTC)

- By the way, the reason I'm not doing this myself is because there are over 100 articles in the category. – PeeJay 09:22, 24 December 2008 (UTC)

Calling all bot and script writers!

I just discovered Wikipedia:WikiProject Check Wikipedia and it is awesome. Any help in cleaning out some of these backlogs would be very much appreciated. Cheers. --MZMcBride (talk) 06:45, 24 December 2008 (UTC)

- I can't say I agree with most of the "these HTML tags aren't needed" comments there, sometimes the HTML style syntax is better. For example, you can't supply inline CSS styles for a wikitext list, and sometimes having lots of apostrophes around can confuse the parser where <b>/<i> won't. Anomie⚔ 14:44, 24 December 2008 (UTC)

- Most of problems are right up AWB's alley but not really bot fixable. BJTalk 15:07, 24 December 2008 (UTC)

A newbie welcoming bot?

THat would be nice, cuz then ppl dont have to be on 24/7 to keep up w/ the constant stream of users. If u know how, contact me, and tell me what to do for it.

- See Wikipedia:Bots/Frequently denied bots. Xclamation point 15:26, 24 December 2008 (UTC)

- Bots just don't have that personal touch :). Imagine if you lived in the world of The Jetsons and you just moved into your new apartment and sent Rosie the Robot Maid over with a pie to welcome you. It's just not the same. davidwr/(talk)/(contribs)/(e-mail) 16:38, 24 December 2008 (UTC)

Converting "as of" links

It's a request to change the deprecated "as of" links (as of 2000, etc) to the new system {{as of}}. Explicitly, it should remove the as of links, listed here, and replace them with {{As of|year}} or {{As of|year|month}}. The only little problem is that the template is case-sensitive: if it's a lowercase, it should add lc=on. The case where there is a day is more complicated. Though they are less common, so it can be revisited later or done (semi-)manually. This is completely supported by consensus: the as of links are deprecated and the new mosnum is even more strict on date links, while the as of template is widely used. Cenarium (Talk) 23:13, 25 December 2008 (UTC)

- Lightbot has already been used for this, see for example [1]. You could discuss further work with the operator. PrimeHunter (talk) 23:50, 25 December 2008 (UTC)

- I have done that. Thanks for the information, Cenarium (Talk) 14:17, 26 December 2008 (UTC)

Move date articles to Portal namespace

Most articles in Category:Days in 2005 and Category:Days in 2003 need to be moved out of article space to Portal space.

Rather than having the article February 12, 2005, it should be in the portal namespace at Portal:Current events/2005 February 12, like the more recent Portal:Current events/2008 November 23. We should have articles for month year (February 2005), but not month day, year. They, as they are not actual articles, should be in the portal namespace and transcluded to the month article, like how Portal:Current events/2008 September 6 is transcluded to September 2008.

This is already a set precedent, and this is how it has been done for more recent years. Earlier years, however, were not updated and moved to Portal:Current events. Thanks Reywas92Talk 02:37, 27 December 2008 (UTC)

DYK success in leading to GA and FA articles

(reposted from here) Suntag, I noticed your conversation with Daniel Case, and I just had an idea that seems like it might be up your alley.... I don't know how we would go about actually implementing this or keeping track of these kinds of things, but maybe it would be cool to have a list of DYK articles that go on to make GA or FA. (since the DYK credit template is transcluded in the talk page, the easiest way might be to have a bot that goes through all pages where that is transcluded, and return all pages that also have GA or FA in their {{ArticleHistory}}.) Of course, who knows, maybe such a list would just be embarrassing (what if we find that 95% of DYK articles stagnate and get ignored after their big day?) but if not, it may be fun to think about. —Politizer talk/contribs 16:44, 23 December 2008 (UTC)

- Bot request - Per the above, please have a bot revise the }} portion of the {{dyktalk}} template to read

|GA=yes}}for each talk page listed in Category:Wikipedia Did you know articles that also is listed in Category:Wikipedia good articles. In addition, please have the bot revise the }} portion of {{dyktalk}} template to read|FA=yes}}for each talk page listed in Category:Wikipedia Did you know articles that also is listed in Category:Wikipedia featured articles. The bot tagging will populate Category:Wikipedia Did you know articles that are good articles and Category:Wikipedia Did you know articles that are featured articles. Thanks. -- Suntag ☼ 17:08, 23 December 2008 (UTC) Coding... Please have the template edited to support those parameters so I can finish the coding. Anomie⚔ 17:52, 23 December 2008 (UTC)

Coding... Please have the template edited to support those parameters so I can finish the coding. Anomie⚔ 17:52, 23 December 2008 (UTC)

- The edited template code is at User:Politizer/Dyktalk, but I'm waiting for an admin to paste it into Template:Dyktalk (which is protected). Also, if it's not too late, can you change your code to fill in

FC=yesrather thanFA=yes(simply because there might be some featured lists as well as featured articles)? —Politizer talk/contribs 20:26, 23 December 2008 (UTC) - Actually, shouldn't it be ok to run the bot before the template is ready? The template is transcluded in talk pages, so the worst that can happen if you add stuff like

|GA=yesto the template calls, is that it will have a dummy parameter sitting around doing nothing...and then as soon as the template code itself is edited then those parameters would start working. (I assume that's what would happen?) —Politizer talk/contribs 20:29, 23 December 2008 (UTC) - Oh, one last thing...you probably know this already, but just in case: I believe the bot will be run repeatedly (every month or so, something random like that) so I guess it would be necessary to have some code telling it not to add

|FC=yesor|GA=yesif the template already has that text. Apologies if you already knew about that; I figured I should just mention it in case. —Politizer talk/contribs 20:33, 23 December 2008 (UTC)- Easy to add "FC" instead of "FA". It would be nice if the parameters were functional before the run, but only because the bot can skip loading the page text at all when it sees the correct categories present (which saves time and bandwidth); it'll work fine without it, just slightly slower, and once the template is updated you'll have to wait for the job queue to get around to populating the categories. I wanted to see the code just to make sure the parameters weren't named "ga" instead of "GA" or anything like that and to see if "Yes", "YES", "1", or anything else would be accepted in addition to "yes" (there's no need for anything besides "yes", but sometimes people code templates to support the extra possibilities). I did already add code to check if GA/FC is already present, and in fact I also included code to remove the GA/FC if the page has been demoted. Anomie⚔ 20:49, 23 December 2008 (UTC)

- The edited template code is at User:Politizer/Dyktalk, but I'm waiting for an admin to paste it into Template:Dyktalk (which is protected). Also, if it's not too late, can you change your code to fill in

![]() BRFA filed Wikipedia:Bots/Requests for approval/AnomieBOT 17. Anomie⚔ 23:24, 23 December 2008 (UTC)

BRFA filed Wikipedia:Bots/Requests for approval/AnomieBOT 17. Anomie⚔ 23:24, 23 December 2008 (UTC)

- Awesome! I could probably add functionality for "Yes," "YES," and "1" if you want (I imagine 1 is a lot more common than Yes and YES), but for now I won't go changing anything while you're still working the bot. —Politizer talk/contribs 00:13, 24 December 2008 (UTC)

- No, leave it as just "yes". Anomie⚔ 01:51, 24 December 2008 (UTC)

- Ok, that's fine with me. Just so you know, there is a discussion here about this...as it turns out, a lot of articles have the {{ArticleHistory}} template, rather than the {{DYKtalk}} template, on their talk page, so things might get a little more complicated than we thought (since any bot that runs a couple times to populate the categories Category:Wikipedia Did you know articles that are good articles and Category:Wikipedia Did you know articles that are featured articles will probably have to be able to deal with that template as well. —Politizer talk/contribs 21:34, 26 December 2008 (UTC)

- The bot shouldn't have to care about ArticleHistory, as that shouldn't need any extra parameters to add the categories. Anomie⚔ 02:20, 27 December 2008 (UTC)

- Yep, I think that's correct...I just realized that and made a note of it in the discussion at WT:DYK. —Politizer talk/contribs 16:03, 27 December 2008 (UTC)

- The bot shouldn't have to care about ArticleHistory, as that shouldn't need any extra parameters to add the categories. Anomie⚔ 02:20, 27 December 2008 (UTC)

- Ok, that's fine with me. Just so you know, there is a discussion here about this...as it turns out, a lot of articles have the {{ArticleHistory}} template, rather than the {{DYKtalk}} template, on their talk page, so things might get a little more complicated than we thought (since any bot that runs a couple times to populate the categories Category:Wikipedia Did you know articles that are good articles and Category:Wikipedia Did you know articles that are featured articles will probably have to be able to deal with that template as well. —Politizer talk/contribs 21:34, 26 December 2008 (UTC)

- No, leave it as just "yes". Anomie⚔ 01:51, 24 December 2008 (UTC)

- Awesome! I could probably add functionality for "Yes," "YES," and "1" if you want (I imagine 1 is a lot more common than Yes and YES), but for now I won't go changing anything while you're still working the bot. —Politizer talk/contribs 00:13, 24 December 2008 (UTC)

Note: I've withdrawn the BRFA as it seems this is no longer wanted. Hopefully Gimmetrow updates GimmeBot to convert the 76 or so uses of {{dyktalk}} on GA/FA pages to {{ArticleHistory}}. Anomie⚔ 04:11, 28 December 2008 (UTC)

MoveBOT

I'm moving a bunch of articles to comply with WP:DASH, and things would be a lot easier if there was a bot that could handle the moving, double redirect checking etc...

The links are compiled here : User:Headbomb/Move

The bot would move them to the en dash version automatically, correct article text to use en dashes, then check and fix double redirect. Is this doable?Headbomb {ταλκκοντριβς – WP Physics} 08:54, 18 December 2008 (UTC)

Coding... LegoKontribsTalkM 00:02, 19 December 2008 (UTC)

Coding... LegoKontribsTalkM 00:02, 19 December 2008 (UTC)

- Cool beans.Headbomb {ταλκκοντριβς – WP Physics} 01:42, 20 December 2008 (UTC)

- Restored from the archives.Headbomb {ταλκκοντριβς – WP Physics} 11:19, 29 December 2008 (UTC)

Coordinate template conversion

Many of the sub-pages of List of United Kingdom locations use {{coor d}}, which was deprecated some time ago. I thought these had already been changed to {{Coord}}; but apparently not. Can someone do that, please? A simple change of the string {{coor d to {{coord should be all that's needed, but there are a lot of instances on a good many pages. Andy Mabbett (User:Pigsonthewing); Andy's talk; Andy's edits 22:16, 23 December 2008 (UTC)

Need someone to take over my bots

I'm leaving and so I need an admin who is willing to take over User:DYKBot and User:DYKadminBot. The source code is here (PHP) although it has some problems. Anyone willing to do this? ~ User:Ameliorate! (with the !) (talk) 02:13, 28 December 2008 (UTC)

- I volunteer myself :-) If that is ok. I already run one bot myself (User:LoxyBot) and have another task in creation. I have access to a stable server and am applying for access to the Toolserver. Foxy Loxy Pounce! 23:22, 29 December 2008 (UTC)

- I can run DYKBot, but I'm not an admin so I can't run DYKadminBot. Let me know if you'd like me to (I do have much coding experience, and I run my own bot). 2DC 23:23, 29 December 2008 (UTC)

- The problem, Foxy Loxy, is that in order to run DYKadminbot, you would likely have to be an admin to run it. I would run it, but I don't have enough experience with DYK. Xclamation point 23:28, 29 December 2008 (UTC)

- Ah yes, good point. Well, I suppose that pulls me out of volunteering. Foxy Loxy Pounce! 23:34, 29 December 2008 (UTC)

- Thanks for the offers, however Nixeagle has agreed to take over the bots. 125.238.97.30 (talk) 03:20, 30 December 2008 (UTC)

- The problem, Foxy Loxy, is that in order to run DYKadminbot, you would likely have to be an admin to run it. I would run it, but I don't have enough experience with DYK. Xclamation point 23:28, 29 December 2008 (UTC)

- I can run DYKBot, but I'm not an admin so I can't run DYKadminBot. Let me know if you'd like me to (I do have much coding experience, and I run my own bot). 2DC 23:23, 29 December 2008 (UTC)

Wikify List of townlands in County Kilkenny

The web version of table2wiki.py choked on List of townlands in County Kilkenny, returning an empty page. It's a 400K+ source file that needs to be wikified. This should trim the source down considerably. Thanks. davidwr/(talk)/(contribs)/(e-mail) 02:38, 30 December 2008 (UTC)

Done No need for a bot, just a little script-assisted editing. Anomie⚔ 02:52, 30 December 2008 (UTC)

Done No need for a bot, just a little script-assisted editing. Anomie⚔ 02:52, 30 December 2008 (UTC)

- I went ahead and consolidated all the "align=center"s into a single "text-align:center" which loped another 24K off the file size for you. --ThaddeusB (talk) 04:07, 30 December 2008 (UTC)

- Can I ask what script you used? §hep • ¡Talk to me! 22:02, 30 December 2008 (UTC)

- I used It's All Text to load it into Vim, then piped the relevant portion of the page through a quickie Perl one-liner. I don't have the one-liner anymore, but it was probably something like this:

perl -nwe 'BEGIN { $first=0; } if(/^<tr>$/){ print "\n|- align=\"center\"\n"; $first=1; } elsif(m{^\s*$|^</tr>$}){ } elsif(m{^<td align="center">(.*)</td>$}){ print " |" unless $first; print "| $1"; $first=0; } else { die "WTF?"; }'Not the prettiest or most efficient code ever, but it worked. Anomie⚔ 22:34, 30 December 2008 (UTC)

- I used It's All Text to load it into Vim, then piped the relevant portion of the page through a quickie Perl one-liner. I don't have the one-liner anymore, but it was probably something like this:

Gotcha. Thanks, §hep • ¡Talk to me! 22:37, 30 December 2008 (UTC)

New page patrol bot- new proposal

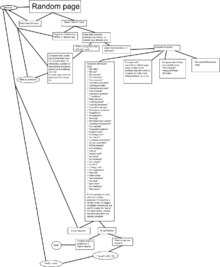

Hello, I have no background in computer programming, but I have patrolled new pages more than a couple of times. It seems that some of the basic tasks there could be automated. 1st, I take it for granted that most pages that are speedy-deleted are created by relatively new users, and that the longer a user has been here, the less likely to create pages that are speedy-worthy. As I said, I have no knowledge of programming, but I have put together a flow-chart that approximates the bot's modus-operandi. It contains arrays for blatant advertising (the blacklist there is borrowed from User:Bishonen), and I'm sure that more experienced users will be able to think of more blacklist terms to put in the other arrays. Speedy deletion is always a sensitive matter, especially when dealing with users who are potentially valuable new users, so the arrays will have to be fairly complex. Contrary to how they are portrayed in the chart, I now imagine them to compose a single, large array with +/- points in in multiple axes, i.e. -5 vandalism, +5 advertising, -5 exempt, instead of the linear system used by cluebot.

What it would do

- tag obvious vandalism pages, attack pages, advertising pages, vanity pages, and pages with little or no content for speedy deletion

- mark pages that meet a "quality" heuristic and are created by experienced users as patrolled

- notify the author of pages that it tags, and mark those pages as patrolled

What it would not do

- Tag pages older than 24 hours, pages that have been marked as patrolled, pages outside the main article space, pages that already have speedy delete tags, or pages that have been created by users with more than 2000 edits.

- Delete pages- this is still left up to the reviewing administrator

Any comments or suggestions would be welcome. Lithoderm 03:47, 31 December 2008 (UTC)

- IMO, this is a good suggestion and well thought out. I'd probably be willing to work on some code, but I'd like to hear other opinions about the feasibility/usefulness of this project before commiting my time to it. :) --ThaddeusB (talk) 04:55, 31 December 2008 (UTC)

- You may wish to compare this with user:JVBot, which is already doing a massive amount of patrolling based on a (fully-protected) whitelist; users are added to the whitelist if they've created at least 75-100 valid articles and there's no reason to believe their articles would be problematic. DS (talk) 05:04, 31 December 2008 (UTC)

- However, that bot does not tag articles for speedy deletion... a new part of this bot's function. Lithoderm 05:14, 31 December 2008 (UTC)

- I know ClueBot V was supposed to do this but there were problems with false positives. —Nn123645 (talk) 16:08, 31 December 2008 (UTC)

- Is the source code of Cluebot V available? That might help the eventual programmer of this bot. The trick, I would suppose, is to make the parameters so clear that only the most obviously worthless pages are tagged-- I don't intend this bot to go after subtle vandalism or hoaxes. I notice that there were some objections to the very idea of a bot tagging pages for speedy deletion, but from what I've seen it would be helpful. I don't know how many pages I've seen that were merely fragments of wikisyntax, or a single, disparaging sentence. For the no content/nonsense array, you could include a parameter of >20 characters- something very small- long strings of capital letters, etc. Because getting it right is important, we could first try coding it to only recognize one type of speedy-able page, like advertising, and then expand its function into other CSD. Lithoderm 16:41, 31 December 2008 (UTC)

- As a matter of fact, it is available here. Xclamation point 17:02, 31 December 2008 (UTC)

- Well, just looking at it without knowing PHP, I can see that it includes a variety of db tags... I think that 200 characters may be a little high for no context, and I would ignore tagging pages with unreferenced and other maintenance tags, and also there is only a single parameter for db-nonsense- the original cluebot looks better thought out.. I've invited User:Cobi to comment on this proposal. We could always code a single type of db first, and try it out on sub-pages of the user-space, or at the test wiki. Lithoderm 17:27, 31 December 2008 (UTC)

- As a matter of fact, it is available here. Xclamation point 17:02, 31 December 2008 (UTC)

- Is the source code of Cluebot V available? That might help the eventual programmer of this bot. The trick, I would suppose, is to make the parameters so clear that only the most obviously worthless pages are tagged-- I don't intend this bot to go after subtle vandalism or hoaxes. I notice that there were some objections to the very idea of a bot tagging pages for speedy deletion, but from what I've seen it would be helpful. I don't know how many pages I've seen that were merely fragments of wikisyntax, or a single, disparaging sentence. For the no content/nonsense array, you could include a parameter of >20 characters- something very small- long strings of capital letters, etc. Because getting it right is important, we could first try coding it to only recognize one type of speedy-able page, like advertising, and then expand its function into other CSD. Lithoderm 16:41, 31 December 2008 (UTC)

- I know ClueBot V was supposed to do this but there were problems with false positives. —Nn123645 (talk) 16:08, 31 December 2008 (UTC)

- However, that bot does not tag articles for speedy deletion... a new part of this bot's function. Lithoderm 05:14, 31 December 2008 (UTC)

- You may wish to compare this with user:JVBot, which is already doing a massive amount of patrolling based on a (fully-protected) whitelist; users are added to the whitelist if they've created at least 75-100 valid articles and there's no reason to believe their articles would be problematic. DS (talk) 05:04, 31 December 2008 (UTC)

Cleanup task

I'm requesting that a bot remove the outdated transclusions of Template:Afd-mergefrom. This would be accomplished by checking to see whether the page title supplied as the first parameter now is a redirect (and if so, whether it leads to the page corresponding to the talk page on which the template is transcluded). If both conditions are met, the template should be removed. If only the first condition is met, the talk page on which the template is transcluded should be added to a list for manual analysis. If neither condition is met, no action should be taken. —David Levy 03:13, 18 December 2008 (UTC)

- Comment - This template is part of the instructions at Wikipedia:Articles for deletion/Administrator instructions. -- Suntag ☼ 19:19, 20 December 2008 (UTC)

- Indeed, the template is used to inform users of consensus established at AfD to merge another article's content into the article associated with the talk page on which the tag appears. This is a request for a bot to remove outdated advice to perform mergers that already have been completed. —David Levy 02:13, 21 December 2008 (UTC)

- De-archived request. —David Levy 20:52, 29 December 2008 (UTC)

![]() BRFA filed Wikipedia:Bots/Requests for approval/AnomieBOT 20. Please let me know how often you'd like the thing to run, where it should report, and whether removing the {{afd-mergefrom}} when the AFDed page has been deleted is a good idea. Anomie⚔ 22:52, 29 December 2008 (UTC)

BRFA filed Wikipedia:Bots/Requests for approval/AnomieBOT 20. Please let me know how often you'd like the thing to run, where it should report, and whether removing the {{afd-mergefrom}} when the AFDed page has been deleted is a good idea. Anomie⚔ 22:52, 29 December 2008 (UTC)

- I'm thinking that once per month would be a reasonable frequency.

- The current report location (User:AnomieBOT/Afd-mergefrom report) is sensible.

- I hadn't considered the scenario in which an AFD-nominated page has been deleted. In such a case, I'd say that the appropriate behavior would be to remove the template (because it no longer is useful) and add a separate notation to the report page (because a sysop should check whether the deletion was appropriate).

- Thanks very much for setting this up! —David Levy 11:15, 30 December 2008 (UTC)

- I'll probably leave it as more frequent than once per month, just so when people fix issues then the bot will update the report page within a reasonable period of time. Most of the time, of course, the bot won't actually make any edits because there won't be anything to change. Anomie⚔ 17:37, 30 December 2008 (UTC)

- Ah, that makes sense. Thanks again for doing this! —David Levy 00:42, 1 January 2009 (UTC)

General Purpose Bot(s)

I was wondering if someone could make a bot(s) (or copy the source code of) a bot that could do some/all of the following general use tasks:

In no specific order:

- Check for double redirects, and redirect them to the proper target.

- Automatically assign stub-class to NEW articleswith less than some arbitrary number of words, say 200? Make sure to use new article list from recent changes, not the random article button.

- Any more non-controversial, general tasks that are unlikely to go wrong.

As well as:

- Have a shut-off button for administrators and myself.

- Can be programmed with some basic preferences, including when to run and how often.

- Follow Maxlag parameter with standard 5 seconds.

I have very little programming experience, but am willing to learn to operate such a bot. I simply need someone to create one for me. Thank you very much. Ninja Wizard (talk) 21:20, 31 December 2008 (UTC)

- These tasks often require human judgement (except for the double redir. fixing which is done by another bot) and therefore an automatic bot would not be a good idea (i.e. spelling 'mistakes' may be intentional, a human would need to check the context). However, using AWB in manual mode with the 'general fixes' and RegExTypoFix enabled wouldn't be a bad idea (and it doesn't require bot approval). RichardΩ612 Ɣ ɸ 11:00, 1 January 2009 (UTC)

JerryBot

Template:Main transclusion target link examiner bot

See also: Denied Bugzilla Request

Could somebody please create a bot for me that would do the following:

- Import Special:Whatlinkshere/Template:Main

- For each entry in the above list, find each transclusion of Template:Main

- For each such transclusion, verify that the target linked is not a dab, redlink, or redirect

- As dab's are found, generate a generate a list of pages that require updates

- As Redlinks are found, generate a list of pages that require investigation

- As redirects are found, replace the target link with that of the redirect, and generate a log of such actions

Additional requirements:

- The bot should employ an emergency shutdown button feature

- The bot should be configurable via an onwiki parameter page:

- limit size of each log to 'x' entries

- configure how often to run

I would like to be able to run this as *MY* bot... so I am looking for a bot-creator who is willing to make this bot for me. I will then go through the process of getting it approved.

Thanks, Jerry delusional ¤ kangaroo 01:21, 22 December 2008 (UTC)

- This should be possible, but I'll need to do some fiddling with code. I'll see what I can do and get back to you. Foxy Loxy Pounce! 08:16, 23 December 2008 (UTC)

- Ok, I've written several base functions for the detection of dab pages etc successfully, I'm now writing the code for the actual bot. Foxy Loxy Pounce! 13:29, 23 December 2008 (UTC)

- IMO, this is probably something that'd be better served with a DB dump, there's *a lot* of pages that transclude {{Main}}. Q T C 17:25, 23 December 2008 (UTC)

- I agree, according to the API, there's 71,030 pages that use the template. This bot would have to load each page that transcludes it and the target page. That's over 140,000 page loads per run if each article only uses it once with one target. Mr.Z-man 19:13, 23 December 2008 (UTC)

Not done then. I'm afraid I have no experience with database queries and do not have the bandwidth to download database dumps. I'll have to give this task to another user. Foxy Loxy Pounce! 20:53, 23 December 2008 (UTC)

Not done then. I'm afraid I have no experience with database queries and do not have the bandwidth to download database dumps. I'll have to give this task to another user. Foxy Loxy Pounce! 20:53, 23 December 2008 (UTC)

- I agree, according to the API, there's 71,030 pages that use the template. This bot would have to load each page that transcludes it and the target page. That's over 140,000 page loads per run if each article only uses it once with one target. Mr.Z-man 19:13, 23 December 2008 (UTC)

Using the API is fine. --MZMcBride (talk) 00:29, 24 December 2008 (UTC)

- Hm, I guess if it does it efficiently, using prop=templates and &redirects rather than just pulling the page text for all the target pages it would be fine. Also in reply to Foxy Loxy, the database dumps that include page text are in the XML format used by Special:Export. Mr.Z-man 00:38, 24 December 2008 (UTC)

Coding... Foxy Loxy Pounce! 23:13, 24 December 2008 (UTC)

Coding... Foxy Loxy Pounce! 23:13, 24 December 2008 (UTC)

Still doing... Just putting in the final touches to the code, and setting up configuration via Wikipedia. I am also trying to get an account on the toolserver to possibly make this tool accessible to more users. Foxy Loxy Pounce! 03:21, 29 December 2008 (UTC)

Still doing... Just putting in the final touches to the code, and setting up configuration via Wikipedia. I am also trying to get an account on the toolserver to possibly make this tool accessible to more users. Foxy Loxy Pounce! 03:21, 29 December 2008 (UTC)

- I'm having some issues with controlling the script via Wikipedia. While I try and fix that, feel free to contact me on my talk page and request a list of articles matching your defined criteria. Foxy Loxy Pounce! 09:01, 29 December 2008 (UTC)

- Jerry, you should probably go ahead and file the BRFA, the code should be completed by the time the BRFA is. Foxy Loxy Pounce! 23:16, 29 December 2008 (UTC)

- BRFA has been initiated. Please go there and update the programming language, and verify that my answers match the coding. Thanks! Jerry delusional ¤ kangaroo 14:39, 31 December 2008 (UTC)

cat sort articles in Category:ATC codes

Currently, all articles in this cat are sorted under "A" for "ATC". Can a bot edit each article and add a cat sort parameter that is the third word in the article name. Thus in ATC code D03 replace [[Category:ATC codes]] with [[Category:ATC codes|D03]]? Thanks —G716 <T·C> 06:31, 2 January 2009 (UTC)

- Already done. —Wknight94 (talk) 02:50, 3 January 2009 (UTC)

Bot to purge Main Page cache each day

This is a request for a bot to purge the cache of Main Page every day at 00:00 (UTC), when three of the five dynamic sections are supposed to switch to the next date's templates. This also would enable us to add the current date (based on UTC) to the top of the page (something currently under consideration). —David Levy 23:17, 14 December 2008 (UTC)

- that will do very little as purging is primarily client side and not server side. βcommand 23:18, 14 December 2008 (UTC)

- I don't follow. I can load a page for the first time (or the first time since clearing by cache) and see outdated transclusions until I purge Wikipedia's cache (http://en.wikipedia.org/w/index.php?title=[page name]&action=purge). —David Levy 00:33, 15 December 2008 (UTC)

- Even if you purge it, it does not mean that I wont get an old copy. All of the database slave servers need purged which happen naturally once a page is edited. βcommand 00:37, 15 December 2008 (UTC)

- I also thought purging affects other people viewing the page afterwards. Wikipedia:Purge and mw:Manual:Purge support this as I read them, for example the latter saying "It is typically utilised to clear the cache and ensure that changes are immediately visible to everyone.". The posts by βcommand and above in #DYK Purge bot by Mr.Z-man are the first time I have seen the statement that purging only has a local effect. Is there documentation for this? PrimeHunter (talk) 00:47, 15 December 2008 (UTC)

- Like PrimeHunter, this is the first that I've heard of that. Assuming that this is accurate, is there any way (other than performing an edit) to ensure that a page's transclusions are updated for everyone loading it? —David Levy 00:57, 15 December 2008 (UTC)

- Anyone? —David Levy 03:13, 18 December 2008 (UTC)

I could be wrong, but I believe at least certain ParserFunctions have an auto-purge function built into them. Things like {{CURRENTDAY}} specifically. --MZMcBride (talk) 00:42, 15 December 2008 (UTC)

- I can vouch that {{#time}} doesn't, I have the time on my userpage and it stops updating after a while until I purge. I can code something up for this task (it won't take long) and it can be used if required. Foxy Loxy Pounce! 07:40, 23 December 2008 (UTC)

Done The code is finished. Just let me know if you guys decide to use it and I'll file a BRFA. Foxy Loxy Pounce! 08:11, 23 December 2008 (UTC)

Done The code is finished. Just let me know if you guys decide to use it and I'll file a BRFA. Foxy Loxy Pounce! 08:11, 23 December 2008 (UTC)

- Thanks! It would be helpful to implement the script now, as this would update that the main page's dynamic content at the turn of each day. —David Levy 12:36, 29 December 2008 (UTC)

Doing... I just need to get cron working on the server I use and then all will be fine. Foxy Loxy Pounce! 03:43, 3 January 2009 (UTC)

Doing... I just need to get cron working on the server I use and then all will be fine. Foxy Loxy Pounce! 03:43, 3 January 2009 (UTC)

DYKadminBot not running

User:Nixeagle runs DYKadminBot now, but he's only just taken over from User:Ameliorate! who has retired from wiki, so he's not familiar with it and currently on vacation. Any chance someone could get this bot running again? It was (mostly) working fine before being apparently moved to the toolserver a day or two ago. Thanks, Gatoclass (talk) 06:35, 2 January 2009 (UTC)

- Toolserver is currently broken, needs a new cable of some sort, all bots based on it are down. MBisanz talk 06:38, 2 January 2009 (UTC)

- Not anymore, the toolserver's been mostly up for at least a day now. Only the MySQL server is down. Xclamation point 08:42, 2 January 2009 (UTC)

- So how come the bot is not running again? Gatoclass (talk) 13:08, 2 January 2009 (UTC)

- There could be a variety of reason for the bot not to be running right now, Nixeagle could have found a problem, is still familiarizing him/herself with the code or, quite simply, Nixeagle is taking a break. Relax, DYK can update itself for a little while, and the bot will be up and running soon. Foxy Loxy Pounce! 23:37, 3 January 2009 (UTC)

- So how come the bot is not running again? Gatoclass (talk) 13:08, 2 January 2009 (UTC)

- Not anymore, the toolserver's been mostly up for at least a day now. Only the MySQL server is down. Xclamation point 08:42, 2 January 2009 (UTC)

CommonsMoverBot

I was recently looking through Category:Wikipedia backlog and noticed that over a hundred thousand User-created public domain images should be moved to Commons. This task doesn't seem to require much human thought to move (I tried moving a couple; it was boring), so I thought a bot might be good to work through the backlog fairly quickly. I have absolutley no experience coding (other than with TI-BASIC), so I can't do the bot. ErikTheBikeMan (talk) 18:57, 3 January 2009 (UTC)

- The problem with this task, I believe, is that many people tag images with public domain tags when they are quite clearly not public domain images. Because of this, the category requires human review. Foxy Loxy Pounce! 23:39, 3 January 2009 (UTC)

- There was Wikipedia:Bots/Requests for approval/John Bot II 3. The bot was approved but it doesn't seem to be doing much. Garion96 (talk) 23:46, 3 January 2009 (UTC)

Nutcracker

There are a lot of articles entitled "List of birds of xxxx" and "Wildlife of xxxx" that have links to nutcracker that really should go to nutcracker (bird). Could someone fix these with a bot? Colonies Chris (talk) 19:03, 28 December 2008 (UTC)

- There don't appear to be any such links. Has this been done already? Andy Mabbett (User:Pigsonthewing); Andy's talk; Andy's edits 12:35, 29 December 2008 (UTC)

Done - looks like Canis Lupus took care of them --ThaddeusB (talk) 02:48, 30 December 2008 (UTC)

Done - looks like Canis Lupus took care of them --ThaddeusB (talk) 02:48, 30 December 2008 (UTC)

- Thanks. Colonies Chris (talk) 09:00, 4 January 2009 (UTC)

Cleanup of articles transcluding {{Infobox Court Case}}

Hi, {{Infobox Court Case}} has been updated as follows:

- It is no longer necessary to use wikitext markup when specifying the name of an image file for the "image" parameter. Thus, instead of typing "[[File:Image.svg|180px]]", editors should now type "Image.svg".

- The infobox now automatically displays an image according to the scheme at {{Infobox Court Case/images}}, provided the name of the court is correctly stated.

It would be great if a bot could do the following in all articles containing the infobox:

- remove "[[Image:" and "[[File:" and any trailing "]]" or "|???px]]" from image file names stated for the "image" parameter; and

- when one of the court names specified in {{Infobox Court Case/images}} has been given for the "court" parameter, remove the "image" parameter entirely so that the template can automatically decide what image to display.

Thanks. — Cheers, JackLee –talk– 19:10, 31 December 2008 (UTC), updated 08:53, 1 January 2009 (UTC)

Coding... This shouldn't be too hard! RichardΩ612 Ɣ ɸ 11:24, 1 January 2009 (UTC)

Coding... This shouldn't be too hard! RichardΩ612 Ɣ ɸ 11:24, 1 January 2009 (UTC) BRFA filed here. RichardΩ612 Ɣ ɸ 13:08, 1 January 2009 (UTC)

BRFA filed here. RichardΩ612 Ɣ ɸ 13:08, 1 January 2009 (UTC) Done - only took around 15 minutes! As Anomie mentioned on the BRFA, the soap opera stuff probably requires more discussion, but I'll be happy to do a run if consensus is reached. RichardΩ612 Ɣ ɸ 19:58, 3 January 2009 (UTC)

Done - only took around 15 minutes! As Anomie mentioned on the BRFA, the soap opera stuff probably requires more discussion, but I'll be happy to do a run if consensus is reached. RichardΩ612 Ɣ ɸ 19:58, 3 January 2009 (UTC)

Thanks very much! — Cheers, JackLee –talk– 17:34, 4 January 2009 (UTC)

Changing external links

Many articles about Christian hymns use the "Cyberhymnal" website as a resource. It's recently moved, however, from http://www.cyberhmnal.org to http://www.hymntime.com/tch. Could we have a bot change the links? I would do it, as I don't think that there are tons of links to the website, but I don't know how to find all the articles that link to the Cyberhymnal. Nyttend (talk) 17:33, 4 January 2009 (UTC)

- There are 411 links to cyberhymnal.org in total [2]. I'll have DeadLinkBOT work through these once it is approved (which should be soon, the request has been pending for a while.) A quick question: do links like "http://www.cyberhymnal.org/bio/l/a/latham_lb.htm" go to "http://www.hymntime.com/tch/bio/l/a/latham_lb.htm"? Are there any links that do not follow this basic pattern? --ThaddeusB (talk) 19:09, 4 January 2009 (UTC)

- For your first question: that's the right guy. I don't know about others, but it seems as if the pages have the same pattern, so I believe we could go with it. I don't know of any such links, but I only discovered Cyberhymnal's new location today — I knew that cyberhymnal.org had gone down some weeks ago, but until today I'd not known that it was back up somewhere else. Nyttend (talk) 22:28, 4 January 2009 (UTC)

Cabal Online US Bot

Make a Cabal Online US bot! —Preceding unsigned comment added by 68.51.213.242 (talk) 04:02, 5 January 2009 (UTC)

- Could you be a little more specific? —Nn123645 (talk) 04:06, 5 January 2009 (UTC)

Living People

Sorry if this is duplicated, but would it be possible to automatically check for, and remove instances of Category:Living people from those who are, by the rules of the category, presumed dead. Obvious signs that the category was now out-of-date or incorrectly added (it does happen) would include:

- Infobox death information

- Foo Bar (1923 - 2007)

- Being in a category such as 2007 Deaths

Obviously a few checks and balances would be needed (like obvious user mistakes such as putting (1954 - 2009) where 2009 is the current year to mean that they are still alive), but even a one-time purge of the 300000 articles in living people would be useful. Cheers, Jarry1250 (talk) 14:33, 5 January 2009 (UTC)

- Oh, I just had another idea. Where this had been completed, on the template WPBiography change living to "no" if living was yes. I suppose that's a much less likely scenario however. Jarry1250 (talk) 15:09, 5 January 2009 (UTC)

Bot to run WikiProject contests

Can someone write a bot that can automatically update WikiProject contest pages such as Wikipedia:WikiProject Military history/Contest? The military history page is currently manually updated. It could read the list of members from the Members page and bump it against articles using the Project template and update the statistics. Such a bot will be really useful in managing the statistics. These contest will motivate people contribute more. The bot can be used across projects similar to how cleanup listings and article alerts are generated. Thanks, Ganeshk (talk) 07:22, 4 January 2009 (UTC)

- Why would this be a good idea? I can't speak for any of the others, but the military history contest is deliberately set up to require participants to explicitly enter articles, rather than creating statistics for all ~1000 members across ~80,000 articles regardless of whether they want to take part.

- On a more practical level, a reasonably simple bot would have no way of determining which articles someone is actually a major contributor to. Awarding points merely on the basis of having edited a particular article—even if the extent of one's contribution was merely adding a single comma—would make the contest meaningless.

- This really looks like a solution looking for a problem, rather than vice versa. Kirill 07:33, 4 January 2009 (UTC)

- May be the bot could look at who nominated the article for FA, GA or DYK and add points. This is similar to how FA count bots work. Not all members will want to spend time submitting their contributions to this page. Use of a bot will allow all members to participate and get rewarded. Regards, Ganeshk (talk) 07:40, 4 January 2009 (UTC)

- It also ignores the B-Class articles, where reviewer interaction is needed to assess the articles and update the project tags. Regards, Woody (talk) 11:19, 4 January 2009 (UTC)

- Technically speaking, a bot could do this. It could judge a contribution to be major/minor based on if the 'minor edit' flag was checked, the number of characters\words added, and/or by some other well-defined rules. However, actually automating any given page would also require that community's consensus. A lot of people are pretty suspect on bots, so getting consensus might be hard to do. If/when there is consensus to automate a given page, I could work on some code to do the automation. --ThaddeusB (talk) 19:16, 4 January 2009 (UTC)

- Thanks for your offer. Like you mention, we will need to define some rules for each the scoring categories. It will be worth the effort if there is interest for this feature from a few projects and there is consensus to go forward. Let us wait and see. At the India project, we are currently discussing creating some kind of a award system. A bot's help will make it so much easier to manage. Thanks, Ganeshk (talk) 03:17, 5 January 2009 (UTC)

- WikiCup does something similar. It scores participants based on their mainspace edits. This request is looking to do it at a WikiProject level on a monthly basis. Regards, Ganeshk (talk) 03:29, 5 January 2009 (UTC)