Wikipedia:Wikipedia Signpost/Single/2018-02-05

Do editors have the right to be forgotten?

While the world was watching fireworks displays in celebration of the 2018 New Year, the English Wikipedia's editing community was experiencing a different kind of fireworks: back-to-back topic bans and blocks, including a few that were considered controversial and involved tenured editors who have since retired. It gave new meaning to "Should Old Acquaintance be forgot, and never thought upon".

Discussions for two New Year’s resolutions resulted, focused on the blocking policy and block log redaction:

- Phase 1: User:Atsme/Blocking policy proposal, comments welcome;

- Phase 2: User:Atsme/Block log proposals, still in draft form.

The issues revolve around the way editors treat each other and the manner in which administrators act as "first responders", particularly in situations when a tenured editor becomes the recipient of a controversial block or topic ban.

Edit warring, discretionary sanctions, ambiguity in policies and guidelines, a lack of consistency in administrator actions, concerns over the unfettered use of admin tools, bad judgment calls, biases, human error, anger and frustration are, while not the norm, major pitfalls in editor retention. Blocks and topic bans are intended as remedial actions to stop disruption but at times tend to appear punitive and magisterial, which exacerbates the situation and raises doubt as to whether the end truly does justify the means, particularly when such actions arise from misunderstandings and misinterpretations.

It's natural for editors to defend against a block or ban – to feel angry when they believe a situation was punitive or grossly mishandled. It is also equally as natural for an admin to maintain an opposing view by defending their actions by insisting (and believing) it was neither punitive nor mishandled. An admin's primary concern is to stop disruption and prevent harm to the project. When the dust settles, what usually remains is the user's block log, but what does that log actually tell us?

Controversial topics germinate disruption, and when POV warriors and/or advocacies are involved, content disputes are likely to end in topic bans and/or blocks. Wikipedia doesn’t have content administrators, rather we have what some editors refer to, with levity, as behavior police. Editors also have access to a number of specialized notice boards for discussion, but some are considered nothing more than extensions of the article TP in that the same editors are involved in the discussions. Add discretionary sanctions to the mix, including stacked sanctions that add confusion and make it difficult to interpret their intent or application, and what we end up with are sanctions that act more like a repellent than a preventative...well, perhaps one could consider it a preventative if it repels but that should not be the ultimate goal.

The thought of being "blocked" or "topic banned" is unsettling whereas the action itself can be quite demoralizing, and at the very least, a disincentive. The term dramah board, in and of itself, speaks volumes as an area to avoid. Perhaps we should consider replacing the block-ban terminology in the log summaries with less harsh descriptions like "content dispute, 24 hr time-out", or "30-day wikibreak – conduct time-out". The harmful effects of blocks and topic bans are also evident in editor retention research, as are the inconsistencies in admin actions across the board. While the blocking policy provides guidance, admins are still dealing with individual judgment calls and unfettered use of the mop, both of which conflict with the stability of consistency.

Questionable blocks and errors are often attributable to time constraints, work overload, inexperience, miscommunication, and misinterpretations. Other blocks of concern, although extremely rare, may be the result of POV railroading, ill-will, biases or COI, situations which are usually remedied with expediency, and may result in desysopping. Unfortunately, bad blocks remain permanently on the logs.

Another unfortunate consequence of block logs involves the adaptation of preconceived notions and bad first impressions after review, which may lead to users being wrongfully "branded" or "targeted", for lack of a better term, and possibly even rejected by the community. Block logs are readily accessible to the public, and include only the resulting block summary, not the circumstances which may persuade the reader to draw a much different conclusion.

Few, if any, actually care or are willing to invest the time to research the circumstances that led to a block; it's a difficult and time consuming task at best. Accepting the log at face value is much easier; therefore, in reality the block log is actually a rap sheet that is used to judge an editor’s suitability. Unfortunately, the right to be forgotten eludes us. Hopefully that will change.

Wars, sieges, disasters and everything black possible

Featured articles

26 featured articles were promoted.

- The Aberfan disaster (nominated by SchroCat) was the catastrophic collapse of a National Coal Board (NCB) colliery spoil tip in the Welsh village of Aberfan, near Merthyr Tydfil, on 21 October 1966. The tip slid down the mountain above the village at 9:15 am, killing 116 children and 28 adults as it engulfed the local junior school and other buildings in the town. The collapse was caused by the build-up of water in the accumulated rock and shale tip, which suddenly slid downhill in the form of slurry. There were seven spoil tips on the slopes above Aberfan; tip seven—the one that slipped onto the village—was begun in 1958 and, at the time of the disaster, was 111 feet (34 m) high. In contravention of the NCB's official procedures, the tip was partly based on ground from which water springs emerged. After three weeks of heavy rain the tip was saturated and approximately 140,000 cubic yards (110,000 m3) of spoil slipped down the side of the hill and onto the Pantglas area of the village. The main building hit was Pantglas Junior School, where lessons had just begun; 5 teachers and 109 children were killed in the school. An official inquiry placed the blame squarely on the NCB. The organisation's chairman, Lord Robens, was criticised for making misleading statements and for not providing clarity as to the NCB's knowledge of the presence of water springs on the hillside. The Aberfan Disaster Memorial Fund (ADMF) was set up on the day of the disaster. It received nearly £1.75 million. The remaining tips were removed only after a lengthy fight by Aberfan residents. Many of the village's residents suffered medical problems, and half the surviving children have experienced post-traumatic stress disorder at some time in their lives.

- The black-shouldered kite (nominated by Cas liber) is a small raptor found in open habitat throughout Australia. Measuring around 35 cm (14 in) in length with a wingspan of 80–100 cm (31–39 in), the adult black-shouldered kite has predominantly grey-white plumage and prominent black markings above its red eyes. The species forms monogamous pairs, breeding between August and January. The black-shouldered kite hunts in open grasslands, searching for its prey by hovering and systematically scanning the ground. It mainly eats small rodents, particularly the introduced house mouse, and has benefitted from the modification of the Australian landscape by agriculture. It is rated as least concern on the International Union for Conservation of Nature's Red List of endangered species.

- The Civil Service Rifles War Memorial (nominated by HJ Mitchell) is a First World War memorial located on the riverside terrace at Somerset House in central London, England. Designed by Sir Edwin Lutyens and unveiled in 1924, the memorial commemorates the 1,240 members of the Prince of Wales' Own Civil Service Rifles regiment who were killed in the First World War. They were Territorial Force reservists, drawn largely from the British Civil Service, which at that time had many staff based at Somerset House.

- The Gloucestershire Regiment (nominated by Factotem) was a line infantry regiment of the British Army from 1881 until 1994. It traced its origins to Colonel Gibson's Regiment of Foot raised in 1694, which later became the 28th (North Gloucestershire) Regiment of Foot. The regiment was formed by the merger of the 28th Regiment with the 61st (South Gloucestershire) Regiment of Foot. It inherited the unique privilege in the British Army of wearing a badge on the back of its headdress as well as the front, an honour won by the 28th Regiment when it fought in two ranks back to back at the Battle of Alexandria in 1801. At its formation the regiment comprised two regular, two militia and two volunteer battalions, and saw its first action during the Second Boer War.

- Russian battleship Petropavlovsk (1894) (nominated by Sturmvogel 66) was the lead ship of her class of three pre-dreadnought battleships built for the Imperial Russian Navy during the last decade of the 19th century. The ship was sent to the Far East almost immediately after entering service in 1899, where she participated in the suppression of the Boxer Rebellion the next year and was the flagship of the First Pacific Squadron. At the beginning of the Russo-Japanese War of 1904–1905, Petropavlovsk took part in the Battle of Port Arthur, where she was lightly damaged by Japanese shells and failed to score any hits in return. On 13 April 1904, the ship sank after striking one or more mines near Port Arthur, in northeast China. Casualties numbered 27 officers and 652 enlisted men, including Vice Admiral Stepan Makarov, the commander of the squadron, and the war artist Vasily Vereshchagin. The arrival of the competent and aggressive Makarov after the Battle of Port Arthur had boosted Russian morale, which plummeted after his death.

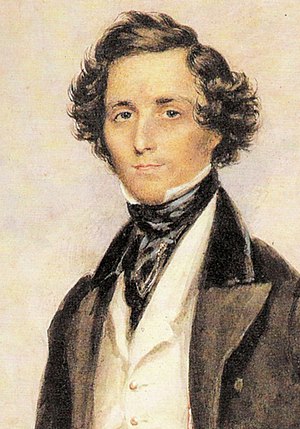

- Felix Mendelssohn (nominated by Smerus) was a German composer, pianist, organist and conductor of the early romantic period. Mendelssohn wrote symphonies, concertos, oratorios, piano music and chamber music. His best-known works include his Overture and incidental music for A Midsummer Night's Dream, the Italian Symphony, the Scottish Symphony, the overture The Hebrides, his mature Violin Concerto, and his String Octet. His Songs Without Words are his most famous solo piano compositions. After a long period of relative denigration due to changing musical tastes and antisemitism in the late 19th and early 20th centuries, his creative originality has been re-evaluated. He is now among the most popular composers of the romantic era.

- Resident Evil: Apocalypse (nominated by Freikorp) is a 2004 science fiction action horror film directed by Alexander Witt and written by Paul W. S. Anderson. It is the second installment in the Resident Evil film series. Resident Evil: Apocalypse is set directly after the events of the first film, where Alice escaped from an underground facility overrun by zombies. She now bands together with other survivors to escape the zombie outbreak which has spread to the fictional Raccoon City. While it received mostly negative reviews from critics who complained about the plot, Resident Evil: Apocalypse did receive praise for its action sequences. It is the lowest rated of the six films in the Resident Evil series on Rotten Tomatoes, with an approval rating of 21%. Earning $129 million worldwide on a $45 million budget, it surpassed the box office gross of the original film. A sequel, Resident Evil: Extinction, was released in 2007.

- The Siege of Constantinople (674–678) (nominated by Cplakidas) was a major conflict of the Arab–Byzantine wars, and the first culmination of the Umayyad Caliphate's expansionist strategy towards the Byzantine Empire, led by Caliph Mu'awiya I. Mu'awiya, who had emerged in 661 as the ruler of the Muslim Arab empire following a civil war, renewed aggressive warfare against Byzantium after a lapse of some years and hoped to deliver a lethal blow by capturing the Byzantine capital, Constantinople.

- Washington State Route 520 (nominated by SounderBruce) is a state highway and freeway in the Seattle metropolitan area, part of the U.S. state of Washington. It runs 13 miles (21 km) from Seattle in the west to Redmond in the east. The freeway connects Seattle to the Eastside region of King County via the Evergreen Point Floating Bridge on Lake Washington. SR 520 intersects several state highways, including Interstate 5 (I-5) in Seattle, I-405 in Bellevue, and SR 202 in Redmond.

- Tommy Phillips (nominated by Kaiser matias) was a Canadian professional ice hockey left winger. Like other players of his era, Phillips played for several teams and leagues. Most notable for his time with the Kenora Thistles, Phillips also played with the Montreal Hockey Club, the Ottawa Hockey Club, the Toronto Marlboros and the Vancouver Millionaires. Over the course of his career Phillips participated in six challenges for the Stanley Cup, the championship trophy of hockey, winning twice: with the Montreal Hockey Club in 1903 and with the Kenora Thistles, which he captained, in January 1907. Following his playing career, Phillips worked in the lumber industry until his death in 1923. One of the best defensive forwards of his era, Phillips was also known for his all-around skill, particularly his strong shot and endurance, and was considered, alongside Frank McGee, one of the two best players in all of hockey. His younger brother, Russell, also played for the Thistles and was a member of the team when they won the Stanley Cup. When the Hockey Hall of Fame was founded in 1945, Phillips was one of the original nine inductees.

- The Shawshank Redemption (nominated by Darkwarriorblake) is a 1994 American drama film written and directed by Frank Darabont, based on the 1982 Stephen King novella Rita Hayworth and Shawshank Redemption. It tells the story of banker Andy Dufresne (Tim Robbins), who is sentenced to life in Shawshank State Penitentiary for the murder of his wife and her lover, despite his claims of innocence. Over the following two decades, he befriends a fellow prisoner, contraband smuggler Ellis "Red" Redding (Morgan Freeman), and becomes instrumental in a money laundering operation led by the prison warden Samuel Norton (Bob Gunton). William Sadler, Clancy Brown, Gil Bellows, and James Whitmore appear in supporting roles. While The Shawshank Redemption received positive reviews on its release, particularly for its story and the performances of Robbins and Freeman, it was a box office disappointment, earning only $16 million during its initial theatrical run. Even so, it went on to receive multiple award nominations, including seven Academy Award nominations, and a theatrical re-release that, combined with international takings, increased the film's box office gross to $58.3 million. Over 320,000 VHS copies were shipped throughout the United States, and based on its award nominations and word of mouth, it became one of the top rented films of 1995. The film is now considered to be one of the greatest films of the 1990s. As of 2017, the film is still broadcast regularly, and is popular in several countries, with audience members and celebrities citing it as a source of inspiration, and naming the film as a favorite in various surveys. In 2015, the United States Library of Congress selected the film for preservation in the National Film Registry, finding it "culturally, historically, or aesthetically significant".

- The Breeders Tour 2014 (nominated by Moisejp) comprised thirteen concerts in the central and western United States in September 2014. Between September 2 and September 17, the Breeders performed in eleven cities, among which were St. Louis, Denver, Seattle, Portland, San Francisco, and Las Vegas. Support bands the Funs and the Neptunas opened for them at five and six of these shows, respectively. The group then played at the Hollywood Bowl concert, and finished the tour on September 20 at the Goose Island 312 Urban Block Party event in Chicago. As well as their new songs, they performed numerous selections from Last Splash and Pod (1990). The tour received good reviews from critics; appraisal included comments that the performances were rousing, and that the band was as good as—or better than—in its heyday.

- The Winter War (nominated by Manelolo) was a military conflict between the Soviet Union (USSR) and Finland lasting three and a half months from 1939 to 1940. The war began with the Soviet invasion of Finland on 30 November 1939, three months after the outbreak of World War II, and ended with the Moscow Peace Treaty on 13 March 1940. The League of Nations deemed the attack illegal and expelled the Soviet Union from the League.

- Withypool Stone Circle (nominated by Midnightblueowl) is a stone circle near the village of Withypool in the south-western English county of Somerset, within the Exmoor moorland. The ring is part of a tradition of stone circle construction that spread throughout much of Britain, Ireland, and Brittany during the Late Neolithic and Early Bronze Age, over a period between 3300 and 900 BCE. Archaeologists think that they were likely religious sites; the stones were perhaps regarded as having supernatural associations.

- The Hours of Mary of Burgundy (nominated by Ceoil) is a luxury book of hours (a form of devotional book for lay-people) completed in Flanders around 1477. It was probably commissioned for Mary of Burgundy, then the wealthiest woman in Europe. Its production began c. 1470, and includes miniatures by several artists, of which the foremost was the unidentified but influential illuminator known as the Master of Mary of Burgundy, who provides the book with its most meticulously detailed illustrations and borders. Other miniatures, considered of an older tradition, were contributed by Simon Marmion, Willem Vrelant and Lieven van Lathem. The majority of the calligraphy is attributed to Nicolas Spierinc, with whom the Master collaborated on other works and who may also have provided a number of illustrations.

- Bats (nominated by LittleJerry & Chiswick Chap & Dunkleosteus77) are mammals of the order Chiroptera; with their forelimbs adapted as wings, they are the only mammals naturally capable of true and sustained flight. Bats are more manoeuvrable than birds, flying with their very long spread-out digits covered with a thin membrane or patagium. The second largest order of mammals, bats comprise about 20% of all classified mammal species worldwide, with about 1,240 species. Many bats are insectivores, and most of the rest are frugivores (fruit-eaters). A few species feed on animals other than insects; for example, the vampire bats feed on blood. Most bats are nocturnal, and many roost in caves or other refuges. Bats are present throughout the world, with the exception of extremely cold regions. They are important in their ecosystems for pollinating flowers and dispersing seeds; many tropical plants depend entirely on bats for these services.

- Thorium (nominated by Double sharp & R8R Gtrs) is a weakly radioactive metallic chemical element with symbol Th and atomic number 90. Thorium metal is silvery and tarnishes black when it is exposed to air, forming the dioxide; it is moderately hard, malleable, and has a high melting point. Thorium is an electropositive actinide whose chemistry is dominated by the +4 oxidation state; it is quite reactive and can ignite in air when finely divided.

- Connecticut Tercentenary half dollar (nominated by Wehwalt) is a commemorative fifty-cent piece struck by the United States Bureau of the Mint in 1935. The Connecticut Tercentenary Commission wanted a half dollar issued, with proceeds from its sale to further its projects. A bill passed through Congress without dissent and became law on June 21, 1935, when President Franklin D. Roosevelt signed it, providing for 25,000 half dollars. The Philadelphia Mint initially coined 15,000 pieces, but when they quickly sold, the Connecticut commission ordered the 10,000 remaining in the authorization. These were soon exhausted as well. Kreis's design has generally been praised by numismatic writers. The coins sold for $1, but have gained in value over the years and sell in the hundreds of dollars, depending on condition.

- SMS Zähringen (nominated by Parsecboy) was the third Wittelsbach-class pre-dreadnought battleship of the German Imperial Navy (Kaiserliche Marine). Laid down in 1899 at the Germaniawerft shipyard in Kiel, she was launched on 12 June 1901 and commissioned on 25 October 1902. Her sisters were Wittelsbach, Wettin, Schwaben and Mecklenburg; they were the first capital ships built under the Navy Law of 1898, brought about by Admiral Alfred von Tirpitz. The ship, named for the former royal House of Zähringen, was armed with a main battery of four 24 cm (9.4 in) guns and had a top speed of 18 knots (33 km/h; 21 mph). Zähringen saw active duty in the I Squadron of the German fleet for the majority of her career. Zähringen was relegated to a target ship for torpedo training in 1917. In the mid-1920s, Zähringen was heavily reconstructed and equipped for use as a radio-controlled target ship. She served in this capacity until 1944, when she was sunk in Gotenhafen by British bombers. The retreating Germans raised the ship and moved her to the harbor mouth, where they scuttled her to block the port. Zähringen was broken up in situ in 1949–50.

- Neferefre (nominated by Iry-Hor) was an Ancient Egyptian pharaoh, likely the fourth but also possibly the fifth ruler of the Fifth Dynasty during the Old Kingdom period. He was very probably the eldest son of Pharaoh Neferirkare Kakai and Queen Khentkaus II, known as Prince Ranefer before he ascended the throne.

- Planet of the Apes (nominated by Cúchullain) is an American science fiction media franchise consisting of films, books, television series, comics, and other media about a world in which humans and intelligent apes clash for control. Based on the novel Planet of the Apes, the 1968 film adaptation, Planet of the Apes, was a critical and commercial hit, initiating a series of sequels, tie-ins, and derivative works. Arthur P. Jacobs produced the first five Apes films through APJAC Productions for distributor 20th Century Fox; since his death in 1973, Fox has controlled the franchise. Four sequels followed the original film from 1970 to 1973. They did not approach the critical acclaim of the original, but were commercially successful, spawning two television series in 1974 and 1975. was released in 2001. A reboot film series commenced in 2011 with Rise of the Planet of the Apes. The films have grossed a total of over $2 billion worldwide, against a combined budget of $567.5 million. Along with further narratives in various media, franchise tie-ins include video games, toys, and planned theme park rides. Planet of the Apes has received particular attention among film critics for its treatment of racial issues. Cinema and cultural analysts have also explored its Cold War and animal rights themes. The series has influenced subsequent films, media, and art, as well as popular culture and political discourse.

- ZETA (fusion reactor) (nominated by Maury Markowitz) was a major experiment in the early history of fusion power research. Based on the pinch plasma confinement technique, and built at the Atomic Energy Research Establishment in England, ZETA was larger and more powerful than any fusion machine in the world at that time. Its goal was to produce large numbers of fusion reactions, although it was not large enough to produce net energy. ZETA went into operation in August 1957 and by the end of the month it was giving off bursts of about a million neutrons per pulse. Measurements suggested the fuel was reaching between 1 and 5 million Kelvin, a temperature that would produce nuclear fusion reactions, explaining the quantities of neutrons being seen. Early results were leaked to the press in September 1957, and the following January an extensive review was released. Front-page articles in newspapers around the world announced it as a breakthrough towards unlimited energy, a scientific advance for Britain greater than the recently launched Sputnik had been for the Soviet Union. In spite of ZETA's failure to achieve fusion, the device went on to have a long experimental lifetime and produced numerous important advances in the field. In one line of development, the use of lasers to more accurately measure the temperature was developed on ZETA, and was later used to confirm the results of the Soviet tokamak approach. In another, while examining ZETA test runs it was noticed that the plasma self-stabilised after the power was turned off. This has led to the modern reversed field pinch concept. More generally, studies of the instabilities in ZETA have led to several important theoretical advances that form the basis of modern plasma theory.

- Family Trade (nominated by Bcschneider53) is an American reality television series broadcast by Game Show Network (GSN). The show premiered on March 12, 2013, and continued to air new episodes until April 16, 2013. Filmed in Middlebury, Vermont, the series chronicles the daily activities of G. Stone Motors, a GMC and Ford car dealership that employs the barter system in selling its automobiles. The business is operated by its founder, Gardner Stone, his son and daughter, Todd and Darcy, and General Manager Travis Romano. The series features the shop's daily interaction with its customers, who bring in a variety of items that can be resold in order to receive a down payment on the vehicle they are leasing or purchasing. Commentary and narration are also often provided by the Stones during the episodes. Family Trade was a part of GSN's intent to broaden their programming landscape since the network had historically aired traditional game shows in most of its programming. The series was given unfavorable reviews by critics, and its television ratings fell over time; Family Trade lost almost half of its audience between the series premiere and finale.

- The Mosaics of Delos (nominated by PericlesofAthens) are a significant body of ancient Greek mosaic art. Most of the surviving mosaics from Delos, Greece, an island in the Cyclades, date to the last half of the 2nd century BC and early 1st century BC, during the Hellenistic period and beginning of the Roman period of Greece. Hellenistic mosaics were no longer produced after roughly 69 BC, due to warfare with the Kingdom of Pontus and subsequently abrupt decline of the island's population and position as a major trading center. Among Hellenistic Greek archaeological sites, Delos contains one of the highest concentrations of surviving mosaic artworks. Approximately half of all surviving tessellated Greek mosaics from the Hellenistic period come from Delos.

- Anbe Sivam (nominated by Ssven2) is a 2003 Indian Tamil-language comedy-drama film directed and co-produced by Sundar C. The film tells the story of an unexpected journey from Bhubaneswar to Chennai undertaken by two men of contrasting personalities, Nallasivam and Anbarasu. Produced on a budget of ₹120 million, Anbe Sivam takes on several themes, including communism, atheism, and altruism, and depicts Haasan's views as a humanist. The film was released on 15 January 2003 to positive reviews from critics, but underperformed at the box office. Despite its failure, it gained recognition over the years through re-runs on television channels and is now regarded as a classic and a cult film in Tamil cinema.

- Harry R. Truman (nominated by ceranthor) was a resident of the U.S. state of Washington who lived on Mount St. Helens. The owner and caretaker of Mount St. Helens Lodge at Spirit Lake, at the foot of the mountain, he came to brief fame as a folk hero in the months preceding the volcano's 1980 eruption after he stubbornly refused to leave his home despite evacuation orders. Truman was presumed to have been killed by a pyroclastic flow that overtook his lodge and buried the site under 150 feet (46 m) of volcanic debris.

Featured lists

Nine featured lists.

- Laureus Spirit of Sport Award (nominated by The Rambling Man)

- Laureus World Sports Award for Sportsman of the Year (nominated by The Rambling Man)

- Laureus World Sports Award for Breakthrough of the Year (nominated by The Rambling Man)

- List of songs recorded by Beastie Boys (nominated by BeatlesLedTV)

- List of Hot Country Singles & Tracks number-one singles of 2001 (nominated by ChrisTheDude)

- List of Hot Country Singles & Tracks number ones of 2002 (nominated by ChrisTheDude)

- List of international goals scored by Lionel Messi (nominated by The Rambling Man & Saksapoiss)

- List of Presidents of the New York Public Library (nominated by Eddie891)

- List of songs recorded by Daft Punk (nominated by BeatlesLedTV)

Featured pictures

Five featured pictures were promoted.

-

Female coastal topi (Damaliscus lunatus topi) in Queen Elizabeth National Park, Uganda

(created and nominated by Charlesjsharp) -

Lietava Castle from Lietavská Svinná. The Lietava Castle is an extensive castle ruin in the Súľov Mountains of northern Slovakia, between the villages of Lietava and Lietavská Svinná-Babkov in the Žilina District.

(created by Vladimír Ruček and nominated by nominated by Tomer T) -

The main building of the Academy of Athens, one of Theophil Hansen's "Trilogy" in central Athens. The Academy of Athens is Greece's national academy, and the highest research establishment in the country.

(created by Der Wolf im Wald and nominated by Tomer T) -

Elena Runggaldier (b. 1990) is an Italian ski jumper and Nordic combined skier, who represents G.S. Fiamme Gialle. She received silver in the FIS Nordic World Ski Championships 2011.

(created and nominated by Ailura)

Automated Q&A from Wikipedia articles; Who succeeds in talk page discussions?

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

"Reading Wikipedia to Answer Open-Domain Questions"

- Reviewed by Thomas Niebler

This paper by Chen et al.[1] proposes to use the Wikipedia article corpus as a source of world knowledge in order to answer open domain questions. They point out that Wikipedia articles contain a lot more information than current knowledge bases, such as DBPedia or Freebase. While knowledge in KBs is encoded in a more machine-friendly way, the vast majority of Wikipedia's knowledge is not covered in KBs, but contained in unstructured text and is thus difficult to access in an algorithmic way. The proposed approach, called "DrQA", aims to overcome that limitation by leveraging the article content. It first retrieves Wikipedia articles relevant to a question, and then uses a recurrent neural network (RNN) to detect relevant parts in the article's paragraphs that could be used as answers. This RNN is based on a set of pretrained word embeddings as well as a set of other features.

Their results indicate that DrQA seems better suited to answer open domain questions than other competitors, based on a set of four question benchmarks. While the evaluation score improvement seems rather small (77.3 vs 78.8 F1 score), the whole task of machine reading at scale using Wikipedia gives directions for interesting future research and applications. For example, depending on the speed of the framework (which unfortunately was not discussed), a new Wikipedia service for answering such open domain questions could be established. Furthermore, this process of answering common knowledge questions could help in improving chatbots.

Are you a policy wonk? Who succeeds in talk page discussions

- Reviewed by Barbara (WVS)

This Carnegie Mellon University study[2] quantified the success of those editors who engage in talk page discussions and their roles in these discussions. The roles assigned to each editor was:

- Moderator - decides when a decision is final to support their views

- Architect - designs the article and its sections to support their views

- Policy Wonk - quotes acronyms that represent policy/rules/guidelines to support their view

- Wordsmith - determines the best article titles and section titles based upon their point of view

- Expert - interjects facts into the discussion to support their point of view

Unlike earlier studies exploring editor interactions, editors in this study could be assigned simultaneous roles on an article talk page. Success of each editor was determined by analyzing subsequent edits to the article under discussion which were promoted by a particular editor and longevity of these edits. Those editors that are more detail-oriented tend to have more success than those more interested in organization. Multiple editors assuming the role of organization lessens the success of individual editors. The study assessed 7,211 articles, 21,108 discussion threads, 21,108 editor discussion pairs, and the average number of editors per discussion. The number of total edits by an editor is not associated with success.

The researchers also published a dataset consisting of "53,175 instances in which an editor interacts with one or more other editors in a talk page discussion and achieves a measured influence on the associated article page".

"Determining Quality of Articles in Polish Wikipedia Based on Linguistic Features"

- Summarized by Eddie891

This article[3] focuses on the 1.2 million unassessed articles in the Polish Wikipedia, and considers "over 100 linguistic features to determine the quality of Wikipedia articles in Polish language." From the conclusion: "Use of linguistic features is valuable for automatic determination of quality of Wikipedia article in Polish language. Better results in terms of precision can be achieved when the whole text of [an] article is taken into the account. Then our model shows over 93% classification precision using such features as relative number of unique nouns and verbs (unique, 3rd person, impersonal). However, if we take into account only [the] leading section of an article, relative quantity of common words, locatives, vocatives and third person words are the most significant for determination of quality. Using the obtained quality models we [assess] 500 000 randomly chosen unevaluated articles from Polish Wikipedia. According to result, about 4–5% of assessed articles can be considered by Wikipedia community as high quality articles."

Conferences and events

See the research events page on Meta-wiki for upcoming conferences and events, including submission deadlines.

Other recent publications

Other recent publications that could not be covered in time for this issue include the items listed below. contributions are always welcome for reviewing or summarizing newly published research.

- Compiled by Tilman Bayer

- "Enrichment of Information in Multilingual Wikipedia Based on Quality Analysis"[4] From the abstract: "Wikipedia articles may include infobox, which used to collect and present a subset of important information about its subject. [sic] This study presents method for quality assessment of Wikipedia articles and information contained in their infoboxes. Choosing the best language versions of a particular article will allow for enrichment of information in less developed version editions of particular articles." See also coverage of related papers involving the same author above, in our last issue: "Assessing article quality and popularity across 44 Wikipedia language versions", and below:

- "Analysis of References Across Wikipedia Languages"[5] From the abstract: "This paper presents an analysis of using common references in over 10 million articles in several Wikipedia language editions: English, German, French, Russian, Polish, Ukrainian, Belarussian. Also, the study shows the use of similar sources and their number in language sensitive topics."

- "Wikipedia as a space for discursive constructions of globalization"[6] From the abstract: "This article [...] compares, through computer-assisted text analysis and qualitative reading, entries for the word ‘globalization’ in six major Western languages: English, German, French, Spanish, Portuguese, and Italian. Given Wikipedia’s model of open editing and open contribution, it would be logical to expect that definitions of globalization across different languages reflect variations related to diverse cultural contexts and collective writing. Results show, however, more similarities than differences across languages, demonstrated by an overall pattern of economic framing of the term, and an overreliance on English language sources."

- "FRISK: A Multilingual Approach to Find twitteR InterestS via wiKipedia"[7] From the abstract: "In this paper we describe Frisk a multilingual unsupervised approach for the categorization of the interests of Twitter users. Frisk models the tweets of a user and the interests (e.g., politics, sports) as bags of articles and categories of Wikipedia respectively [...]"

- "Introduction to anatomy on Wikipedia"[8] From the abstract: "No work parallels the amount of attention, scope or interdisciplinary layout of Wikipedia, and it offers a unique opportunity to improve the anatomical literacy of the masses. Anatomy on Wikipedia is introduced from an editor's perspective. Article contributors, content, layout and accuracy are discussed, with a view to demystifying editing for anatomy professionals."

- "The institutionalization of free culture movement based on the study of Wikimedia projects in the East-Central Europe"[9] From the English abstract: "The author of the publication presents the processes of institutionalization occurring in the projects of the Wikimedia Foundation, co-organized in the framework of the free culture movement. These processes on the one hand lead to the relative closing up of the members of groups belonging to regional cultures, especially those who speak the same language, on the other hand to encouraging interregional cooperation. Common enterprises undertaken by partners from East-Central Europe are not only contribution to the free culture movement, but may also point to emphasizing the common identity of prosumers of post-socialist societies."

- "The Russian-language Wikipedia as a Measure of Society Political Mythologization"[10] From the abstract [sic]: "The analyzed in this article myth about inheritance rights of Russia to the Kyivan Rus’1 arose in the 15th century. Recently this myth is being actively spread by the Russian propaganda in the mass media – in particular this is performed through Wikipedia being one of the most attended Internet resources. [...] the purpose of this myth consists in activation of separatist sentiments of Russian-speaking Ukrainian citizens. Purpose – to explore vulnerability of Wikipedia policy of openness on the basis of a specific example as well as to explore its efficiency for formation of political myths; to analyze the technology used for creation of Wikipedia articles in the process of formation of myths.Methods. Comparison method is applied – texts of Wikipedia articles on various time stages of their creation were compared; results of analyzing Wikipedia pages were correlated to political events of Russian-Ukrainian relations.[...] Results. Mythology not obliged to prove anything and Wikipedia aimed at forming the concept and creating only an impression of scientificness and not knowledge as such are perfectly agreed. That is why Wikipedia is one of the most efficient spreaders of myths (first of all political myths) supporting a definite ideology."

- "Analysing Timelines of National Histories across Wikipedia Editions: A Comparative Computational Approach"[11] From the abstract: "... we aim to automatically identify such differences by computing timelines and detecting temporal focal points of written history across languages on Wikipedia. In particular, we study articles related to the history of all UN member states and compare them in 30 language editions. We develop a computational approach that allows to identify focal points quantitatively, and find that Wikipedia narratives about national histories (i) are skewed towards more recent events (recency bias) and (ii) are distributed unevenly across the continents with significant focus on the history of European countries (Eurocentric bias). We also establish that national historical timelines vary across language editions, although average interlingual consensus is rather high ..."

- "Using WikiProjects to Measure the Health of Wikipedia"[12] From the abstract: "We analysed 3.2 million Wikipedia articles associated with 618 active Wikipedia projects. The dataset contained the logs of over 115 million article revisions and 15 million talk entries both representing the activity of 15 million unique Wikipedians altogether. Our analysis revealed that per WikiProject, the number of article and talk contributions are increasing, as are the number of new Wikipedians contributing to individual WikiProjects." From the results section: "In comparison to Suh et al. and Halfaker et al., our findings suggest that based on the WikiProject activity, Wikipedia is not in decline, but still enjoying growth with new users, edits, and discussion activity. Akin to other complex online communities, using traditional methods to measure community and system health may not reflect their true state ..."

References

- ^ Danqi Chen; Adam Fisch; Jason Weston; Antoine Bordes: Reading Wikipedia to Answer Open-Domain Questions. Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)

- ^ Maki, Keith; Yoder, Michael; Jo, Yohan; Rosé, Carolyn (2017). "Roles and Success in Wikipedia Talk Pages: Identifying Latent Patterns of Behavior". Proceedings of the Eighth International Joint Conference on Natural Language Processing (Volume 1: Long Papers). 1: 1026–1035.

- ^ Lewoniewski, Włodzimierz; Węcel, Krzysztof; Abramowicz, Witold (2018-01-03). "Determining Quality of Articles in Polish Wikipedia Based on Linguistic Features". doi:10.20944/preprints201801.0017.v1.

{{cite journal}}: Cite journal requires|journal=(help) - ^ Lewoniewski, Włodzimierz (2017-06-28). Enrichment of Information in Multilingual Wikipedia Based on Quality Analysis. International Conference on Business Information Systems. Lecture Notes in Business Information Processing. Springer, Cham. pp. 216–227. doi:10.1007/978-3-319-69023-0_19. ISBN 9783319690223.

- ^ Lewoniewski, Włodzimierz; Węcel, Krzysztof; Abramowicz, Witold (2017-10-12). Analysis of References Across Wikipedia Languages. International Conference on Information and Software Technologies. Communications in Computer and Information Science. Springer, Cham. pp. 561–573. doi:10.1007/978-3-319-67642-5_47. ISBN 9783319676418.

author's copy / conference presentation video recording

author's copy / conference presentation video recording

- ^ Rubira, Rainer; Gil-Egui, Gisela (2017-10-30). "Wikipedia as a space for discursive constructions of globalization". International Communication Gazette. 81: 3–19. doi:10.1177/1748048517736415. ISSN 1748-0485. S2CID 149356870.

- ^ Jipmo, Coriane Nana; Quercini, Gianluca; Bennacer, Nacéra (2017-11-05). FRISK: A Multilingual Approach to Find twitteR InterestS via wiKipedia. International Conference on Advanced Data Mining and Applications. Lecture Notes in Computer Science. Springer, Cham. pp. 243–256. doi:10.1007/978-3-319-69179-4_17. ISBN 9783319691787.

, author copy

, author copy

- ^ Ledger, Thomas Stephen (2017-09-01). "Introduction to anatomy on Wikipedia". Journal of Anatomy. 231 (3): 430–432. doi:10.1111/joa.12640. ISSN 1469-7580. PMC 5554820. PMID 28703298.

- ^ Skolik, Sebastian (2017). "Instytucjonalizacja ruchu wolnej kultury na przykładzie projektów Wikimedia w przestrzeni Europy Środkowo-Wschodniej". Wydawnictwo Uniwersytetu Śląskiego: 347–367.

{{cite journal}}: Cite journal requires|journal=(help) (in Polish, book chapter from ISBN 978-83-8012-916-0)

(in Polish, book chapter from ISBN 978-83-8012-916-0)

- ^ Sokolova, Sofiia (2017). "The Russian-language Wikipedia as a Measure of Society Political Mythologization". Journal of Modern Science. 33 (2): 147–176. ISSN 1734-2031.

- ^ Samoilenko, Anna; Lemmerich, Florian; Weller, Katrin; Zens, Maria; Strohmaier, Markus (2017). "Analysing Timelines of National Histories across Wikipedia Editions: A Comparative Computational Approach". Proceedings of the Eleventh International AAAI Conference on Web an Social Media (ICWSM in Montreal, Canada). 11: 210–219. arXiv:1705.08816. doi:10.1609/icwsm.v11i1.14881. S2CID 30431459.

- ^ Tinati, Ramine; Luczak-Roesch, Markus; Shadbolt, Nigel; Hall, Wendy (2015). "Using WikiProjects to Measure the Health of Wikipedia". Proceedings of the 24th International Conference on World Wide Web. ACM Press. pp. 369–370. doi:10.1145/2740908.2745937. ISBN 9781450334730.

/ Tinati, Ramine; Luczak-Rösch, Markus; Hall, Wendy; Shadbolt, Nigel (2015-05-23). Using WikiProjects to measure the health of Wikipedia. Web Science Track, World Wide Web Conference.

/ Tinati, Ramine; Luczak-Rösch, Markus; Hall, Wendy; Shadbolt, Nigel (2015-05-23). Using WikiProjects to measure the health of Wikipedia. Web Science Track, World Wide Web Conference.

New monthly dataset shows where people fall into Wikipedia rabbit holes

The Wikimedia Foundation's Analytics team is releasing a monthly clickstream dataset. The dataset represents—in aggregate—how readers reach a Wikipedia article and navigate to the next. Previously published as a static release, this dataset is now available as a series of monthly data dumps for English, Russian, German, Spanish, and Japanese Wikipedias.

Have you ever looked up a Wikipedia article about your favorite TV show just to end up hours later reading on some obscure episode in medieval history? First, know that you're not the only person who's done this. Roughly one out of three Wikipedia readers look up a topic because of a mention in the media, and often get lost following whatever link their curiosity takes them to.

Aggregate data on how readers browse Wikipedia contents can provide priceless insights into the structure of free knowledge and how different topics relate to each other. It can help identify gaps in content coverage (do readers stop browsing when they can't find what they are looking for?) and help determine if the link structure of the largest online encyclopedia is optimally designed to support a learner's needs.

Perhaps the most obvious usage of this data is to find where Wikipedia gets its traffic from. Clickstream data can not only be used to confirm that most traffic to Wikipedia comes via search engines, it can also be analyzed to find out—at any given time—which topics were popular on social media that resulted in a large number of clicks to Wikipedia articles.

In 2015, we released a first snapshot of this data, aggregated from nearly 7 million page requests. A step-by-step introduction to this dataset, with several examples of analysis it can be used for, is in a blog post by Ellery Wulczyn, one of the authors of the original dataset.

Since this data was first made available, it has been reused in a growing body of scholarly research. Researchers have studied how Wikipedia content policies affect and bias reader navigation patterns (Lamprecht et al, 2015); how clickstream data can shed light on the topical distribution of a reading session (Rodi et al, 2017); how the links readers follow are shaped by article structure and link position (Dimitrov et al, 2016; Lamprecht et al, 2017); how to leverage this data to generate related article recommendations (Schwarzer et al, 2016), and how the overall link structure can be improved to better serve readers' needs (Paranjape et al, 2016).

Due to growing interest in this data, the Wikimedia Analytics team has worked towards the release of a regular series of clickstream data dumps, produced at monthly intervals, for 5 of the largest Wikipedia language editions (English, Russian, German, Spanish, and Japanese). This data is available monthly, starting from November 2017.

A quick look into the November 2017 data for English Wikipedia tells us it contains nearly 26 million distinct links, between over 4.4 million nodes (articles), for a total of more than 6.7 billion clicks. The distribution of distinct links by type (see Ellery's blog post for more details) is as follows:

- 60% of links (15.6M) are internal and account for 1.2 billion clicks (18%).

- 37% of links (9.6M) are from external entry-points (like a Google search results page) to an article and count for 5.5 billion clicks.

- 3% of links (773k) have type "other", meaning they reference internal articles but the link to the destination page was not present in the source article at the time of computation. They account for 46 million clicks.

If we build a graph where nodes are articles and edges are clicks between articles, it is interesting to observe that the global graph is strongly connected (157 nodes not connected to the main cluster). This means that between any two nodes on the graph (article or external entrypoint), a path exists between them. When looking at the subgraph of internal links, the number of disconnected components grows dramatically to almost 1.9 million forests, with a main cluster of 2.5M nodes. This difference is due to external links having very few source nodes connected to many article nodes. Removing external links allows us to focus on navigation within articles.

In this context, a large number of disconnected forests lends itself to many interpretations. If we assume that Wikipedia readers come to the site to read articles about just sports or politics but neither reader is interested in the other category we would expect two "forests". There will be few edges over from the "politics" forest to the "sports" one. The existence of 1.9 million forests could shed light on related areas of interest among readers – as well as articles that have lower link density – and topics that have a relatively small volume of traffic, making them appear as isolated nodes.

If you're interested in studying Wikipedia reader behavior and in using this dataset in your research, we encourage you to cite it via its DOI (doi.org/10.6084/m9.figshare.1305770) and to peruse its documentation. You may also be interested in additional datasets that Wikimedia Analytics publishes (such as article pageview data) or in navigation vectors learned from a corpus of Wikipedia readers' browsing sessions.

Joseph Allemandou, Senior Software Engineer, Analytics

Mikhail Popov, Data Analyst, Reading Product

Dario Taraborelli, Director, Head of Research

Interview with The Rambling Man, Wikipedia's top contributor of Featured Lists

The Rambling Man has written the most featured lists of any Wikipedian. This interview dwells upon his editing process, and how he gets articles up to Featured List status.

- When did you begin editing WP, and what brought you here in the first place?

- I think it was around May 2005, someone at work told about this new-fangled encyclopedia, and I looked up Harold Faltermeyer, and edited it. The rest is history.

- Can you give a brief outline of your methods? '

- Some editors are daunted by the FL process and steer clear. Briefly, what advice would you give to FLC tenderfeet?

- Are they really? FLC is about as easy as it gets in the big arcane process machine. I'd say to anyone who was thinking about nominating a list and thought it was difficult to contact any of the delegates or the director for advice. FLC is a pretty friendly place so it's unlikely that anyone's going to get burned by asking questions.

- What is unusual about your FL research process? What have you learned to do differently in your FL prep?

- I don't know. Nothing I do is unusual to me, I like picking up half-made lists and doing the research, finding the references, but I don't do much differently from when I started other than maintain a proper adherence to WP:MOS.

- What originally attracted you to write the series of The Boat Race articles? And how has your interest in the topic group changed since you began?

- I coxed at Cambridge, not the University boat, but my college first boat, and since then I've had an inherent interest. I think I have around 160 good articles and a handful of featured articles about the Boat Race, and continue to update and maintain the articles. At some point I'd like to make it a featured topic but there's a lot of work to be done there. Nothing has changed, I'm still interested in high quality Boat Race articles, and to make a comprehensive history of it here.

- How have the limitations of available sourcing shaped or propelled your work?

- I had access to a few sourcing websites thanks to Wikipedia, British Newspapers etc, but those have all gone now, which is a real shame. I'd love to get more access to those kinds of sources for the articles I'd like to write.

- What is your preferred style of collaboration in your featured work?

- All-in. I don't divide anything with anyone. I am technically competent within the Wiki markup, tables, etc, so I get a few request to do that, and will happily mop up after others who are creating content.

- How have you handled working with unwitting collaborators?

- Collaboratively. When creating content it's all about the editors. Come one, come all. When generating decent articles, we usually aren't held back by the boring and pointed Wikipedia police.

- What kind of feedback have you received on your featured work off-wiki?

- I don't personally get any feedback really, although I do see featured articles I've written being highlighted on Twitter (for example), but that's not why we're here. I just get joy and pleasure from seeing articles improve.

- Do you have examples of content that for whatever reason you had to kill during the course of editing? How do you approach the idea of deleting portions of your work during the course of editing, and what do you do with the extraneous content, if anything?

- A recent thing, List of international goals scored by Ferenc Puskás, arguably one of the best players in history, and I can't find decent reliable sources for each goal. So, while I haven't "killed" the project, it's definitely on pause. I could push all the work I've done to the mainspace, but it'll be murdered in a tag-fest and I'll probably be blocked for controversial opinions.

TV, death, sports, and doodles

- This traffic report is adapted from the Top 25 Report, prepared with commentary by Stormy clouds (January 14 to 20), and igordebraga and Serendipodous (January 21 to 27)

Netflix got me wrapped around its finger (January 14 to 20)

This week's report is considerably more diverse, for better or worse. As ever, television has a dominant effect on the reading habits of Wikipedia's users, with Netflix maintaining its chokehold, securing eyes both on its own site and over here. The return of a pair of crime dramas also provided some intrigue for the readers of the wiki. The leading article, however, is that of Dolores O'Riordan, the lead singer of the Cranberries, who died tragically at a young age during the week. As ever, we can thank Reddit and Google for a couple of entries on the Report, and sports gasp also managed to make its way in, encroaching on the fiefdom of period drama fanatics. With the apparent addiction to television that is suffered by many a reader on Wikipedia, it is little wonder that we spend our time like zombies, clicking through tangentially related links. Long may it last, I say.

For the week of January 14 to 20, 2018, the most popular articles on Wikipedia, as determined from the WP:5000 report were:

| Rank | Article | Class | Views | Image | Notes |

|---|---|---|---|---|---|

| 1 | Dolores O'Riordan | 2,536,032 |  |

Beginning with musical tragedy, we have the death of the lead singer of The Cranberries (#4). This decade has had a remarkably high rate of attrition for talented musicians, and the death of my fellow countrywoman hit harder than most. From brave political statements to a oft-used ballad about rêves, the singer left a mark in her short life. Anyway, this is too upsetting to set the tone for the Report as a whole, so I shan't linger. | |

| 2 | Martin Luther King Jr. | 1,130,743 |  |

The national holiday to celebrate the champion of civil rights fell this week stateside, as it does every year. Intrigue surrounding the pastor was likely piqued by the fact that many media outlets drew parallels between a holiday designed to mark the life and death of the man who vanquished segregation, and Donald Trump's vitriol and rhetoric. | |

| 3 | Gianni Versace | 1,051,793 |  |

The legendary fashion designer is, perhaps not surprisingly, the subject of The Assassination of Gianni Versace: American Crime Story, the second season of American Crime Story. While the enterprise has not received the approval of Versace's family members, it seems to have riveted the readers of Wikipedia. If it can emulate the quality of its predecessor, we may perhaps truly understand why exactly the assassin (#5) decided to leave the icon on the floor. | |

| 4 | The Cranberries | 1,018,955 |  |

The band lost their lead singer, Dolores O'Riordan (#1), following her untimely demise in London. This prompted an outpouring of wiki-emotion and interest in the group, propelling them to the top 5 for the week. | |

| 5 | Andrew Cunanan | 869,460 |  |

The assassin of #3, Cunanan is also investigated thoroughly in the second season of American Crime Story, which seems to have propelled vast interest in his article. A notorious serial killer, Cunanan committed suicide following a lengthy and infamous manhunt. Unfortunately, should doubt persist about his guilt, we are in trouble. We cannot check if the glove fits, as he was cremated following his death. | |

| 6 | Queen Victoria | 782,363 |  |

Another article which attracts constant attention from Anglophiles, Victoria is a staple of the report. She has seen her interest increase greatly as a result of the PBS and ITV series which bears her name, where she is portrayed by Jenna Coleman (pictured). Many consider it to be a poor man's crown, but it can't be denied that Wikipedia's users are captivated by period drama focused on British queens. | |

| 7 | Deaths in 2018 | 765,887 |  |

Led by the demise of O'Riordan (#1), and a pioneering footballing legend, there was a lot of traffic at the list of the dead this week. Let's hope we are not in for another celebrity apocalypse. | |

| 8 | Elizabeth II | 735,840 |  |

Once again, Elizabeth Regina makes her way onto the Report by virtue of The Crown. Having written extensively about the series due to the high presence of second screeners, I finally decided to indulge in the series and binge watched it in its entirety. On the whole, I found it to be very entertaining, and yes, found myself journeying to the pages of the characters in the period drama – am I part of the problem? | |

| 9 | Schöningen Spears | 641,099 |  |

Another week, another Reddit entry sparking intense interest on Wikipedia. While I don't frequent r/TIL myself, I, as a commentator, do have to thank the moderators for introducing variety into the report. This one relates to wooden spears, which, through dendrochronology, have been dated as being over 300,000 years old. They were found very well preserved in a German mine. My question about this fascinating piece of trivia, naturally, given the fact that spears are potent weapons, is this – who had the bigger spear? On a side note, any of the TIL mods should journey over and help diversify DYK, as you clearly have the knack for it. | |

| 10 | Case Keenum | 612,896 | I have never understood why Minnesota of all places adopted the Vikings as their sporting idols. I mean, Miami Dolphins, I get. 49ers, sure. But why the Vikings? Because it is cold in Minneapolis? Because you enjoy historical anachronisms? Demographics indicate that it should be the Saxons. It truly puzzles me, as someone who stems from a Viking town. Nonetheless, Keenum's story this year has been remarkable, progressing from third-choice QB to a Super Bowl contender. The air will be let out of the balloon, though, when the Norse legions get wiped out by a ragtag volunteer army and their venerated general – or not. |

Going through changes (January 21 to 27)

Feel like the report was pretty much the same from week to week? We've got you covered, as only four entries remain from the last report, the ever-present death list and the subjects of the TV shows Wikipedia readers seem to watch. Most of the changes are sports related: three entries in the build-up to Super Bowl LII, the possibility of another gridiron league returning, an association footballer making his debut, the two winners of the Australian Open, the latest UFC event... and on a darker, off-field note, two entries regarding the closure of a scandal akin to the Weinstein effect, as a pedophile physician who regularly abused American gymnasts is sent to prison. Also shifting things are three Google Doodles - that including the top two entries of the week - and a Reddit topic, four Indian entries (a new Bollywood epic, two historic figures depicted in it, and a national holiday), the Oscar nominations and the latest turmoil the US government has put itself into.

For the week of January 21 to 27, 2018, the most popular articles on Wikipedia, as determined from the WP:5000 report were:

| Rank | Article | Class | Views | Image | Notes |

|---|---|---|---|---|---|

| 1 | Virginia Woolf | 1,497,132 |  |

The feminist icon and author of, among other books, Mrs Dalloway received a Google Doodle celebrating her 126th birthday. | |

| 2 | Sergei Eisenstein | 1,169,678 |  |

Like Virginia Woolf, pioneering Soviet filmmaker Eisenstein was born in the 19th century, peaked in the 1920s (including the iconic 1925 film Battleship Potemkin), died in the 1940s, and received a Google Doodle for his birthday (only this time a nice round number, 120). | |

| 3 | USA Gymnastics sex abuse scandal | 1,074,940 |

|

In 2016, while it was revealed Russia was doping so many athletes to the point a whole slew of them were banned, another Olympics powerhouse saw a scandal that was as bad, if not worse: a former USA Gymnastics national team physician was denounced for sexually abusing over 150 athletes – including McKayla Maroney, seen to the left doing that famous "not impressed" face in illustrious company – since 1992. This week, said physician was sentenced to prison. | |

| 4 | Tom Brady | 980,001 |

|

Unlike some of my friends, I don't care for American football. That being said, one of said friends is ecstatic that the Jacksonville Jaguars are incompetent and couldn't stop Brady and the New England Patriots from reaching a second consecutive Super Bowl and his eighth overall. | |

| 5 | Padmaavat | 920,105 |  |

India, ya know I love ya but baby you crazy. This week, a historic epic based on the poem Padmavat and starring Deepika Padukone (pictured) was released and is already making some big crore in spite of controversy – Padmaavat has been accused of being right wing and anti Muslim - that led to the movie being banned from a few Indian states, riots, firebombing, death threats to the director and cast, and even threats of mass suicide. | |

| 6 | Republic Day (India) | 832,560 |  |

There. See? National holiday! Fun! Do that. Instead of threatening to murder people or set fire to things. Many Indians took it to watch the movie in our previous entry, despite increased security. | |

| 7 | List of Super Bowl champions | 766,992 |  |

Self explanatory, really. Super Bowl LII is next week, and people wanted to remind themselves of the previous 51. The biggest winners are the Pittsburgh Steelers with six, though they can be matched by the New England Patriots if last year's result - seen in the picture, Tom Brady (#4) lifting the Vince Lombardi Trophy for the fifth time - repeats. Add the extended success to the fact that he's married to one of my country's most beautiful women and you can see why many downright envy Brady. | |

| 8 | Deaths in 2018 | 765,887 |  |

Needs no introduction. And maybe the most notable death last week was Mort Walker, finishing off an impressive 68 years of writing Beetle Bailey. | |

| 9 | Rani Padmini | 730,645 |  |

The legendary 13th–14th century Indian queen (Rani) who is the main character of Padmaavat (#5). | |

| 10 | 90th Academy Awards | 711,802 | The latest Oscar contenders were announced by Andy Serkis and Tiffany Haddish (the latter, clearly struggling with the teleprompter), with the most nominated film being The Shape of Water (#13) amid the expected (most nominees, including Academy regulars Daniel Day-Lewis, Denzel Washington, and Meryl Streep) and surprises both good (Get Out for Best Picture! Logan for Best Adapted Screenplay!) and bad (The Boss Baby?!). The ceremony is on March 4th. |

Exclusions

- These lists excludes the Wikipedia main page, non-article pages (such as redlinks), and anomalous entries (such as DDoS attacks or likely automated views). Since mobile view data became available to the Report in October 2014, we exclude articles that have almost no mobile views (5–6% or less) or almost all mobile views (94–95% or more) because they are very likely to be automated views based on our experience and research of the issue. Please feel free to discuss any removal on the Top 25 Report talk page if you wish.

Cochrane–Wikipedia Initiative

Wikipedia is a powerful public health knowledge-translation tool. Across all languages, medical-related Wikipedia articles receive over 10 million visits per day from around the world.[1] To improve the quality of health-related Wikipedia articles, the Cochrane-Wikipedia Initiative was developed in 2014. Presently, there are over 2000 uses of Cochrane Reviews in Wikipedia. Many Cochrane Groups are training Wikipedia editors and developing new ways to share high-quality evidence on Wikipedia. Wiley and the Cochrane Library have distributed over 85 free accounts to Wikipedians to support the sharing of Cochrane evidence on Wikipedia.

What is Cochrane?

Cochrane is a global non-governmental organization that works with a network of contributors from around the world. These collaborative teams produce Cochrane Reviews, a high-quality source of health-related information. Cochrane Reviews help people make informed decisions about treatment options by providing a summary of the best evidence in the field. Cochrane Reviews are peer-reviewed, credible, and unbiased (Cochrane does not accept funding from commercial sponsors or other potential conflicts of interest, for example). Cochrane Reviews meet Wikipedia’s reliable sources for medical articles criteria WP:MEDRS.

Improve the evidence base of Wikipedia articles using Cochrane evidence

Many content errors in Wikipedia articles are due to not enough skilled editors inserting new evidence.[2] A new Cochrane project is tackling this! We have created a Wikipedia project page that includes a list of all the Cochrane Reviews not presently in Wikipedia. Volunteers will be directed to the project page, given Wikipedia-editing support, and encouraged to “be bold” (Wikipedia-style) and select Cochrane Reviews to insert into Wikipedia. There are over 5000 reviews on the list, and while not all of the reviews will have an obvious home in Wikipedia, it is our goal to work through the list over the next 12 months and add in new Cochrane content. We are recruiting editors for this new task through Cochrane's TaskExchange or visit the project page directly and start editing!

Keeping Cochrane evidence up to date in Wikipedia articles

Cochrane Reviews are updated regularly based on need and updated reviews receive a new citation on MedLine. Once these updates are published, the next step is to update the citation within the Wikipedia article and make sure that the new conclusions are reflected on Wikipedia. Out of date Cochrane Reviews are flagged automatically in Wikipedia with the "Cochrane-Update-Bot", that is now run once a month. This volunteer task does not take a lot of time to perform, but the potential impact is very large. Between May 2017–October 2017, volunteers updated 340 Wikipedia articles and the articles have already received close to 32 million views. Please check our project updates page for articles newly eligible to update.

If you are interested in becoming involved or want more information, please visit the Cochrane-Wikipedia Initiative project page or contact User:JenOttawa.

Jennifer Dawson works with Cochrane’s Communications and External Affairs team as a Wikipedia Consultant. Her role includes maintaining and building further relations with Wikipedia, connecting new editors to the Wikipedia community, and supporting requests for engagement in Wikipedia work from the Cochrane community.

- ^ Heilman, James M.; West, Andrew G. (2015-03-04). "Wikipedia and medicine: quantifying readership, editors, and the significance of natural language". Journal of Medical Internet Research. 17 (3): e62. doi:10.2196/jmir.4069. ISSN 1438-8871. PMC 4376174. PMID 25739399.

- ^ Shafee, Thomas; Masukume, Gwinyai; Kipersztok, Lisa; Das, Diptanshu; Häggström, Mikael; Heilman, James (November 2017). "Evolution of Wikipedia's medical content: past, present and future". Journal of Epidemiology and Community Health. 71 (11): 1122–1129. doi:10.1136/jech-2016-208601. ISSN 1470-2738. PMC 5847101. PMID 28847845.

New cases initiated for inter-editor hostility and other collaboration issues

Requests for cases

New requests since the last issue of The Signpost include:

- Request "Joefromrandb" – initiated by MrX on 22 January 2018, reporting Joefromrandb. A number of behaviors were cited including hostile editing in the form of personal attacks, assumptions of bad faith, inflammatory edit summaries, and edit warring. It appears to be a continuation of a 20 October 2017 case naming Joefromrandb and opened by TomStar81. As of publication deadline the case has support from 14 members of the Arbitration Committee and has crossed the ten votes required to be accepted by the Arbitration Committee under the four net votes criterion (barring reversed votes).

- Request "Civility issues" – initiated by Volvlogia on 24 January 2018, reporting Cassianto. At issue, incivility surrounding discussion of infobox artist. Third parties in the request referred back to Wikipedia:Arbitration/Requests/Case/Infoboxes in which Arbcom decided that infobox usage was neither mandatory nor prohibited, and should be left to editor consensus. The prior infoboxes case did not name Cassianto but remedies included other editors placed under editing restrictions on adding, removing or discussing infoboxes. Cassianto is currently under a three-month self-requested block initiated 26 January 2018. "Allegations of pro-infobox sock/meat activity" were raised in this new request by third parties; other parties expressed concerns about Arbcom creating new policy around infoboxes. As of publication deadline the request is 9/0/0.

- Update after publication deadline

The two requests mentioned above were accepted as cases Joefromrandb and others and Civility in infobox discussions

Solving crime; editing out violence allegations

On the use of images

A bicyclist was hit. The driver fled the scene. Left in critical condition, a Reddit user by the name of YoungSalt desperately posted on several forums with a picture of the bumper. "Help identify this piece of a bumper from a hit and run with a cyclist now in critical condition." Using, among other sources, an image from Wikipedia (File:2009 Toyota Camry (ACV40R) Ateva sedan (2015-05-29) 01 (cropped).jpg) other users from Reddit were able to determine that the bumper fragment came from a 2009 Toyota Camry, and the previously unknown attacker was caught. Free culture is valuable for its own sake but even mundane pictures of cars can make a tangible difference in the world.

NFL coach's wife is editing out violence allegations on Wikipedia

Ccable62, at a glance would appear to be a very obsessive fan of Tom Cable, removing allegations of violence against the football coach repeatedly. However, it turns out that, as The Wall Street Journal and the New York Post reported, Ccable62 is in fact Carol Cable, Tom's wife. Clearly, they felt that the allegations were unfounded, writing "ALL ACCUSATIONS AGAINST COACH CABLE WERE ORICRN VIA NFL. DA. AND POKICE TO BR ABSOLUTELY FALSE. THEY SHOULD NOT LIST LIES AND FALSR ACCUSATIONS IN THIS WIKIPEDIA AS IT IS SLANDER" in an edit summary. For now the allegations remain up, and Ccable62 has not edited since January 5.

Did UCF really win?

When the University of Central Florida football team went undefeated for 13 games, everyone knew that controversy would ensue. As College football national championships in NCAA Division I FBS do not have a championship, there is no defined winner other than who has the best record. Many, however were and are of the opinion that Alabama truly won the division, and those people edited as such. Edit wars broke out across the spectrum, with an edit every 97 seconds on the 2017 UCF Knights football team page. Discussions broke out as to the color of the 2017 season at pages including coach Scott Frost, UCF Knights football, and the 2017 Alabama Crimson Tide football team (That page underwent 252 edits from mid-November to mid-January; of those, 124 came on January 8 and 9). (Originally reported in Sports Illustrated.)

In brief

- Jalopnik covered the efforts of User:McChizzle in updating the article Honda Ridgeline.

- Engadget and others reported on the "Wikipedia Rabbit Hole" phenomenon. See this issue's blog.

- New Hampshire Public Radio in New Hampshire, United States shares its research and reflections on who made the Wikipedia article for their capitol and how editors have developed it.

- Hürriyet Daily News, an English language news source in Turkey, relays that Wikimedia Foundation executive director Katherine Maher has told the Turkish language Habertürk that the Turkish government has not provided any reason about why the Turkish government has blocked access to Wikipedia throughout the country continually since April 2017.

- The Daily Mail, a tabloid newspaper known in the wiki community as being an unreliable source for Wikipedia articles (previous coverage), recently presented evidence on the dangers of Wikipedia's information about suicide methods. Citing a report by British politician Grant Shapps, previously known for editing the article about himself (previous coverage 1, 2), both the tabloid and the government report complain of the dangers of providing encyclopedic information in the way that Wikipedia does. The Signpost invites users who feel that content in Wikipedia should be different to edit articles and comment on discussion pages, including at Talk:Suicide methods, Wikipedia talk:WikiProject Death, and Wikipedia talk:WikiProject Medicine. Grant Shapps denies wiki mischief (previous coverage) but even if that were not the case, we are ever welcoming to critics and encourages everyone to check out the report.

- A vendor of chips (French fries) describes his experience of being the subject of a Wikipedia article for two weeks, at which time the wiki's review process deleted the article

- An editor from Slate considers the extent to which Wikipedia is a good place to start solving mysteries involving missing persons

- SPORTbible and FK Panevėžys noted that FK Panevėžys, a Lithuanian football club from the second-level I Lyga, is allegedly duped into signing Barkley Miguel Panzo based on fabricated data from a Wikipedia page. However, the club apologized on 3 February for "the appearance of incorrect information" on its website.

The Newest Ride in Disney World

Thanks to Jdlrobson

See also (other places whose existence may be doubtful)

- Alice in Wonderland syndrome

- Visual release hallucinations

- Alcoholic hallucinosis

- Middle Earthboring

- Neverland

- Greenland

- Green Acres

- "Green, Green Grass of Home"

- Shangri-La

- Wikimedia Foundation

- Mr. Roger's Neighborhood

- Petticoat Junction

- Sodor Island

- Gilligan's Island

- Montana