Underwater computer vision

Underwater computer vision is a subfield of computer vision. In recent years, with the development of underwater vehicles ( ROV, AUV, gliders), the need to be able to record and process huge amounts of information has become increasingly important. Applications range from inspection of underwater structures for the offshore industry to the identification and counting of fishes for biological research. However, no matter how big the impact of this technology can be to industry and research, it still is in a very early stage of development compared to traditional computer vision. One reason for this is that, the moment the camera goes into the water, a whole new set of challenges appear. On one hand, cameras have to be made waterproof, marine corrosion deteriorates materials quickly and access and modifications to experimental setups are costly, both in time and resources. On the other hand, the physical properties of the water make light behave differently, changing the appearance of a same object with variations of depth, organic material, currents, temperature etc.

Applications

[edit]- Seafloor survey

- Vehicle navigation and positioning[1]

- Biological monitoring[citation needed][clarification needed] {possibly aquatic biomonitoring)

- Video mosaics as visual navigation maps

- Submarine pipeline inspection

- Wreckage visualization[clarification needed][citation needed]

- Maintenance of underwater structures[citation needed]

- Drowning detection systems

Medium differences

[edit]Illumination

[edit]In air, light comes from the whole hemisphere on cloudy days, and is dominated by the sun. In water direct lighting comes from a cone about 96° wide above the scene. This phenomenon is called Snell's window.[2]

Artificial lighting can be used where natural light levels are insufficient and where the light path is too long to produce acceptable colour, as the loss of colour is a function of the total distance through water from the source to the camera lens port.[3]

This section needs expansion with: artificial illumination and Backscatter. You can help by adding to it. (July 2024) |

Light attenuation

[edit]

Unlike air,[citation needed] water attenuates light exponentially. This results in hazy images with very low contrast.[clarification needed] The main reasons for light attenuation are light absorption (where energy is removed from the light) and light scattering, by which the direction of light is changed. Light scattering can further be divided into forward scattering, which results in an increased blurriness and backward scattering that limits the contrast and is responsible for the characteristic veil of underwater images. Both scattering and attenuation are heavily influenced by the amount of organic matter dissolved or suspended in the water.[citation needed]

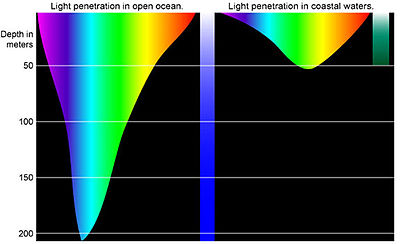

Light attenuation in water is also a function of the wavelength. This means that different colours are attenuated at different rates, leading to colour degradation.with depth and distance. Red and orange light are attenuated faster, followed by yellows and greens. Blue is the least attenuated visible wavelength.[4]

This section needs expansion with: light attenuation by turbidity. You can help by adding to it. (March 2021) |

Artificial lighting

[edit]This section is empty. You can help by adding to it. (July 2024) |

Challenges

[edit]In high level computer vision, human structures are frequently used as image features for image matching in different applications. However, the sea bottom lacks such features, making it hard to find correspondences in two images.

In order to be able to use a camera in the water, a watertight housing is required. However, refraction will happen at the water-glass and glass-air interface due to differences in density of the materials. This has the effect of introducing a non-linear image deformation.

The motion of the vehicle presents another special challenge. Underwater vehicles are constantly moving due to currents and other phenomena. This introduces another uncertainty to algorithms, where small motions may appear in all directions. This can be specially important for video tracking. In order to reduce this problem image stabilization algorithms may be applied.

Relevant technology

[edit]Image restoration

[edit]Image restoration< techniques are intended to model the degradation process and then invert it, obtaining the new image after solving.[5][6] It is generally a complex approach that requires plenty of parameters[clarification needed] that vary a lot between different water conditions.

Image enhancement

[edit]Image enhancement[7] only tries to provide a visually more appealing image without taking the physical image formation process into account. These methods are usually simpler and less computational intensive.

Color correction

[edit]Various algorithms exist that perform automatic color correction.[8][9] The UCM (Unsupervised Color Correction Method), for example, does this in the following steps: It firstly reduces the color cast by equalizing the color values. Then it enhances contrast by stretching the red histogram towards the maximum and finally saturation and intensity components are optimized.[citation needed]

Underwater stereo vision

[edit]It is usually assumed that stereo cameras have been calibrated previously, geometrically and radiometrically. This leads to the assumption that corresponding pixels should have the same color. However this can not be guaranteed in an underwater scene, because of dispersion and backscatter. However, it is possible to digitally model this phenomenon and create a virtual image with those effects removed

Other application fields

[edit]Imaging sonars[10][11] have become more and more accessible and gained resolution, delivering better images. Sidescan sonars are used to produce complete maps of regions of the sea floor stitching together sequences of sonar images. However, sonar images often lack proper contrast[clarification needed] and are degraded by artefacts and distortions due to noise, attitude changes of the AUV/ROV carrying the sonar or non uniform beam patterns. Another common problem with sonar computer vision is the comparatively low frame rate of sonar images.[12]

References

[edit]- ^ Horgan, Jonathan; Toal, Daniel (2009). "Computer Vision Applications In the Navigation of Unmanned Underwater Vehicles". Underwater Vehicles. doi:10.5772/6703. ISBN 978-953-7619-49-7. S2CID 2940888.

- ^ Martin Edge and Ian Turner (1999). The Underwater Photographer. Focal Press. ISBN 0-240-51581-1. Archived from the original on 2023-10-22. Retrieved 2024-07-24.

- ^ "Color underwater". Deep-six.com. Archived from the original on 23 July 2024. Retrieved 23 July 2024.

- ^ Hegde, M. (30 September 2009). "The Blue, the Bluer, and the Bluest Ocean". NASA Goddard Earth Sciences Data and Information Services. Archived from the original on 12 July 2009. Retrieved 27 May 2011.

- ^ Y. Schechner, Yoav; Karpel, Nir. "Clear Underwater vision". Proc. Computer Vision & Pattern Recognition. I: 536–543.

- ^ Hou, Weilin; J.Gray, Deric; Weidemann, Alan D.; A.Arnone, Robert (2008). "Comparison and Validation of point spread models for imaging in natural waters". Optics Express. 16 (13): 9958–9965. Bibcode:2008OExpr..16.9958H. doi:10.1364/OE.16.009958. PMID 18575566.

- ^ Schettini, Raimondo; Corchs, Silvia (2010). "Underwater Image Processing: State of the Art Image Enhancement Methods". EURASIP Journal on Advances in Signal Processing. 2010: 14. doi:10.1155/2010/746052.

- ^ Akkaynak, Derya, and Tali Treibitz. "Sea-Thru: A Method for Removing Water From Underwater Images." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019.

- ^ Iqbal, K.; Odetayo, M.; James, A.; Salam, R.A. "Enhancing the low quality images using Unsupervised Color Correction Methods" (PDF). Systems Man and Cybernetics.[dead link]

- ^ Mignotte, M.; Collet, C. (2000). "Markov Random Field and Fuzzy Logic Modeling in Sonar Imagery". Computer Vision and Image Understanding. 79: 4–24. CiteSeerX 10.1.1.38.4225. doi:10.1006/cviu.2000.0844.

- ^ Cervenka, Pierre; de Moustier, Christian (1993). "Sidescan Sonar Image Processing Techniques". IEEE Journal of Oceanic Engineering. 18 (2): 108. Bibcode:1993IJOE...18..108C. doi:10.1109/48.219531.

- ^ Trucco, E.; Petillot, Y.R.; Tena Ruiz, I. (2000). "Feature Tracking in Video and Sonar Subsea Sequences with Applications". Computer Vision and Image Understanding. 79: 92–122. doi:10.1006/cviu.2000.0846.