Wikipedia:Wikipedia Signpost/2011-08-29/Recent research

Article promotion by collaboration; deleted revisions; Wikipedia's use of open access; readers unimpressed by FAs; swine flu anxiety

Effective collaboration leads to earlier article promotion

A team of researchers from the MIT Center for Collective Intelligence investigated the structure of collaboration networks in Wikipedia and their performance in bringing articles to higher levels of quality. The study,[1] presented at HyperText 2011, defines collaboration networks by considering editors who edited the same article and exchanged at least one message on their respective talk pages. The authors studied whether a pre-existing collaboration network or structured collaboration management via WikiProjects accelerate the process of quality promotion of English Wikipedia articles. The metric used is the time it takes to bring articles from B-class to GA or from GA to FA on the Wikipedia 1.0 quality assessment scale. The results show that the WikiProject importance of an article increases its promotion rate to GA and FA by 27% and 20%, respectively. On the other hand, the number of WikiProjects an article is part of reduces the rate of promotion to FA by 32%, an effect that the authors speculated could imply that these articles are broader in scope than those claimed by fewer WikiProjects. Pre-existing collaboration also dramatically affects the rate of promotion to GA and FA (with 150% and 130% increases, respectively): prior collaborative ties significantly accelerate article quality promotion. The authors also identify contrasting effects of network structure (cohesiveness and degree centrality) on the increase of GA and FA promotion times.

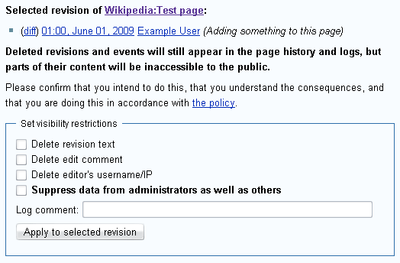

Deleted revisions in the English Wikipedia

Wikipedia and open-access repositories

The paper "Wikipedia and institutional repositories: an academic symbiosis?"[3] is concerned with Wikipedia articles citing primary sources when suitable secondary ones (as per WP:SCHOLARSHIP) are not available. Only about 10% of scholarly papers are published as open access, but another 10% are freely available through self-archiving, thus doubling in practice the number of scholarly primary resources that Wikipedia editors have at their disposal. The article describes a sample of institutional repositories from the major higher-education institutions in New Zealand, along with three Australian institutions serving as controls, and analyses the extent to which they are linked from Wikipedia (across languages).

Using Yahoo! Site Explorer, a total of 297 links were estimated to go from Wikipedia articles to these repositories (40% of which went to the three Australian controls), mostly to support specific claims but also (in 35% of the cases) for background information. In terms of document type linked from Wikipedia, PhD theses, academic journal articles and conference papers each scored about 20% of the entries, whereas in terms of Wikipedia language, 35% of links came from non-English Wikipedias.

The paper cites strong criticism of institutional repositories[4] but proposes "a potential symbiosis between Wikipedia and academic research in institutional repositories" – Wikipedia getting access to primary sources, and institutional repositories growing their user base – as a new reason that "academics should be systematically placing their research work in institutional repositories". Ironically, the author himself did not follow this advice. However, such potential alignments between Wikimedians and open access have been observed in related contexts – according to the expert participation survey. For instance, Wikipedia contributors are more likely to have most or all of their publications freely available on the web.

As is custom in academia, the paper does not provide links to the underlying data, but the Yahoo! Site Explorer queries can be reconstructed (archived example) or compared to Wikipedia search results and site-specific Google searches. There is also code from linkypedia and the Wikipedia part of the PLoS Altmetrics study that could both be adapted for automating such searches.

Quality of featured articles doesn't always impress readers

In an article titled "Information quality assessment of community generated content: A user study of Wikipedia" (abstract),[5] published this month by the Journal of Information Science, three researchers from Bar-Ilan University reported on a study examining judgment of Wikipedia's quality by non-expert readers (done as part of the 2008 doctoral thesis of one of the authors, Eti Yaari, which was already covered in Haaretz at the time).

The paper starts with a review of existing literature on information quality in general, and on measuring the quality of Wikipedia articles in particular. The authors then describe the setup of their qualitative study: 64 undergraduate and graduate students were each asked to examine five articles from the Hebrew Wikipedia, as well as their revision histories (an explanation was given), and judge their quality by choosing the articles they considered best and worst. The five articles were pre-selected to include one containing each of four quality/maintenance templates used on the Hebrew Wikipedia: featured, expand, cleanup and rewrite, plus one "regular" article. But only half of the participants were shown the articles with the templates. Participants were asked to "think aloud" and explain their choices; the audio recording of each session (on average 58 minutes long) was fully transcribed for further analysis, which found that the criteria mentioned by the students could be divided into "measurable" criteria "that can be objectively and reliably assigned by a computer program without human intervention (e.g. the number of words in an article or the existence of images)" and "non-measureable" ones ("e.g. structure, relevance of links, writing style", but also in some cases the nicknames of the Wikipedians in the version history). Interestingly, a high number of edits was both seen as a criterion indicating good quality by some, and indicating bad quality by others, and likewise for a low number of edits.

Comparing the quality judgments of the study's participants with that of Wikipedians as expressed in the templates revealed some striking differences: "The perceptions of our users regarding quality did not always coincide with the perceptions of Wikipedia editors, since in fewer than half of the cases the featured article was chosen as best". In fact, "in three cases, the featured article was chosen as the lowest quality article out of the five articles assessed by these participants." However, those participants who were able to see the templates chose the "featured" article considerably more often as the best one, "even though the participants did not acknowledge the influence of these templates".

In swine flu outbreak, Wikipedia reading preceded blogging and newspaper writing

A paper published in Health Communication examined "Public Anxiety and Information Seeking Following the H1N1 Outbreak" (of swine influenza in 2009) by tracking, among other measures, page view numbers on Wikipedia, which it described as "a popular health reference website" (citing a 2009 paper co-authored by Wikipedian Tim Vickers: "Seeking health information online: Does Wikipedia matter?"[6]). Specifically, the researchers - psychologists from the University of Texas and the University of Auckland - selected 39 articles related to swine flu (for example, H1N1, hand sanitizer, and fatigue) and examined their daily page views from two weeks prior to two weeks after the first announcement of the H1N1 outbreak during the 2009 flu pandemic. (The exact source of the page view numbers is not stated, but a popular site providing such data exists.) Controlling for variations per day of the week, they found that "the increase in visits to Wikipedia pages happened within days of news of the outbreak and returned to baseline within a few weeks. The rise in number of visits in response to the epidemic was greater the first week than the second week .... At its peak, the seventh day, there were 11.94 times as many visits per article on average."

While these findings may not be particularly surprising to Wikipedians who are used to current events driving attention for articles, the authors offer intriguing comparisons to the two other measures of public health anxiety they study in the paper: The number of newspaper articles mentioning the disease or the virus, and the number of blog entries mentioning the disease. "Increased attention to H1N1 happens most rapidly in Wikipedia page views, then in the blogs, and finally in newspapers. The duration of peak attention to H1N1 is shortest for the blog writers, followed by Wikipedia viewers, and is longest in newspapers." Examining correlations, they found that "The number of blog entries was most strongly related to the number of newspaper articles and number of Wikipedia visits on the same day. The number of Wikipedia visits was most strongly related to the number of newspaper articles the following day. In other words, public reaction is visible in online information seeking before it is visible in the amount of newspaper coverage." Finally, the authors emphasize the advantages of their approach to measure public anxiety in such situations over traditional approaches. Specifically, they point out that in the H1N1 case the first random telephone survey was conducted only two weeks after the outbreak, and therefore underestimated the initial public anxiety levels, as the author argue based on their combined data including Wikipedia pageviews.

Extensive analysis of gender gap in Wikipedia to be presented at WikiSym 2011

A paper by researchers of GroupLens Research to be presented at the upcoming WikiSym 2011 symposium offers the most comprehensive analysis of gender imbalance in Wikipedia so far.[7] This study was covered by a summary in the August 15 Signpost edition and, facilitated by a press release, it generated considerable media attention. Below are some of the main highlights from this study:

- reliably tracking gender in Wikipedia is complicated due to the different (and potentially inconsistent) ways in which users can specify gender information.

- self-identified females comprise 16.1% of editors who started editing in 2009 and specified their gender, but they only account for 9% of the total number of edits by this cohort and the gap is even wider among highly active editors.

- the gender gap has remained fairly constant since 2005

- gender differences emerge when considering areas of contribution, with a greater concentration of women in the People and Arts areas.

- male and female editors edit user-centric namespaces differently: on average, a female makes a significantly higher concentration of her edits in the User and User Talk namespaces, mostly at the cost of fewer edits in Main and Talk.

- a significantly higher proportion of females have participated in the "Adopt a User" program as mentees.

- female editors have an overall lower probability of becoming admins. However, when controlling for experience measured by number of edits it turns out that women are significantly more likely to become administrators than their male counterparts.

- articles that have a higher concentration of female editorship are more likely to be contentious (when measured by proportion of edit-protected articles) than those with more males.

- in their very initial contributions, female editors are more likely to be reverted than male editors but there is hardly any statistical difference between females and males in how often they are reverted after their seventh edit. The likelihood of the departure of a female editor, however, is not affected more than that of a male by reverts of edits that are genuine contributions (i.e. not considered vandalism).

- females are significantly more likely to be reverted for vandalizing Wikipedia’s articles and while males and females are temporarily blocked at similar rates, females are significantly more likely to be blocked permanently. In these cases, though, self-reported gender may be less reliable.

A second, unpublished paper addressing gender imbalance in Wikipedia ("Gender differences in Wikipedia editing") by Judd Antin and collaborators will be presented at WikiSym 2011.

"Bandwagon effect" spurs wiki adoption among Chinese-speaking users

In a paper titled "The Behavior of Wiki Users",[8] appearing in Social Behavior and Personality: An International Journal, two researchers from Taiwan used the Unified Theory of Acceptance and Use of Technology (UTAUT) "to explain why people use wikis", based on an online questionnaire distributed in July 2010 in various venues and to Wikipedians in particular. According to an online version of the article, the survey generated 243 valid responses from the Chinese-speaking world, which showed that – similar to previous results for other technologies – each of the following "had a positive impact on the intention to use wikis":

- Performance expectancy (measured by agreement to statements such as "Wikis, for example Wikipedia, help me with knowledge sharing and searches")

- Effort expectancy (e.g. "Wikis are easier to use than other word processors.")

- Facilitating conditions (e.g. "Other wiki users can help me solve technical problems.")

- User involvement (e.g. "Collaboration on wikis is exciting to me.")

The impact of user involvement was the most significant. Social influence (e.g. "The people around me use wikis") was not found to play a significant role. On the other hand, the researchers state that a person's general susceptibility to the "bandwagon effect" (measured by statements such as "I often follow others' suggestions") "can intensify the impact of [an already present] intention to use wikis on the actual use ... This can be explained in that users tend to translate their intention to use into actual usage when their inclination receives positive cues, but the intention alone is not sufficient for them to turn intention into action. ... people tend to be more active in using new technology when social cues exist. This is especially true for societies where obedience is valued, such as Taiwan and China."

In brief

- Mani Pande, a research analyst with the Wikimedia Foundation's Global Development Department, announced the final report from the latest Wikipedia Editor Survey. A dump with raw anonymized data from the survey was also released by WMF (read the full coverage).

- In an article appearing in the Communications of the ACM with the title "Reputation systems for open collaboration", a team of researchers based at UCSC discuss the design of reputational incentives in open collaborative systems and review lessons learned from the development and analysis of two different kinds of reputation tools for Wikipedia (WikiTrust) and for collaborative participation in Google Maps (CrowdSensus).[9]

- A paper presented by a Spanish research team at CISTI 2011 presents results from an experiment in using Wikipedia in the classroom and reports on "how the cooperation of Engineering students in a Wikipedia editing project helped to improve their learning and understanding of physics".[10]

- Researchers from Karlsruhe Institute of Technology released an analysis and open dataset of 33 language corpora extracted from Wikipedia.[11]

- A team from the University of Washington and UC Irvine will present a new tool at WikiSym 2011 for vandalism detection and an analysis of its performance on a corpus of Wikipedia data from the Uncovering Plagiarism, Authorship, and Social Software Misuse (PAN) workshop.[12]

- A paper in the Journal of Oncology Practice, titled "Patient-Oriented Cancer Information on the Internet: A Comparison of Wikipedia and a Professionally Maintained Database",[13] compared Wikipedia's coverage of ten cancer types with that of Physician Data Query(PDQ) [1], a database of peer-reviewed, patient-oriented summaries about cancer-related subjects which is run by the U.S. National Cancer Institute (NCI). Last year, the main results had already been presented at a conference, announced in a press release and summarized in the Signpost: "Wikipedia's cancer coverage is reliable and thorough, but not very readable". In addition, the journal article examines a few other aspects, e.g. that on search engines Google and Bing, "in more than 80% of cases, Wikipedia appeared above PDQ in the results list" for a particular form of cancer.

- A paper published in Springer's Lecture Notes in Computer Science presents a new link prediction algorithm for Wikipedia articles and discusses how relevant links to and from new articles can be inferred "from a combination of structural requirements and topical relationships".[14]

References

- ^ K. Nemoto, P. Gloor, and R. Laubacher (2011). Social capital increases efficiency of collaboration among Wikipedia editors. In Proceedings of the 22nd ACM conference on Hypertext and hypermedia – HT '11, 231. New York, New York, USA: ACM Press. DOI • PDF

- ^ A.G. West and I. Lee (2011). What Wikipedia Deletes: Characterizing Dangerous Collaborative Content. In WikiSym 2011: Proceedings of the 7th International Symposium on Wikis. PDF

- ^ Alastair Smith (2011). Wikipedia and institutional repositories: an academic symbiosis? In E. Noyons, P. Ngulube and J. Leta (Eds), Proceedings of the 13th International Conference of the International Society for Scientometrics & Informetrics, Durban, South Africa, July 4–7, 2011 (pp. 794–800). PDF

- ^ Dorothea Salo (2008). Innkeeper at the Roach Motel. Library Trends 57 (2): 98–12. DOI • PDF

- ^ E. Yaari, S. Baruchson-Arbib, and J. Bar-Ilan (2011). Information quality assessment of community generated content: A user study of Wikipedia. Journal of Information Science (August 15, 2011). DOI

- ^ Michaël R. Laurent and Tim J. Vickers (2009). Seeking health information online: does Wikipedia matter? Journal of the American Medical Informatics Association: JAMIA 16(4): 471-9 DOI • PDF

- ^ S.T.K. Lam, A. Uduwage, Z. Dong, S. Sen, D.R. Musicant, L. Terveen, and J. Riedl (2011). WP:Clubhouse? An Exploration of Wikipedia's Gender Imbalance. In WikiSym 2011: Proceedings of the 7th International Symposium on Wikis, 2011. PDF

.

.

- ^ Wesley Shu and Yu-Hao Chuang (2011). The Behavior of Wiki Users. Social Behavior and Personality: An International Journal 39, no. 6 (October 1, 2011): 851-864. DOI

- ^ L. De Alfaro, A. Kulshreshtha, I. Pye, and B. Thomas Adler (2011). Reputation systems for open collaboration. Communications of the ACM 54 (8), August 1, 2011: 81. DOI • PDF

- ^ Pilar Mareca, and Vicente Alcober Bosch (2011). Editing the Wikipedia: Its role in science education.In 6th Iberian Conference on Information Systems and Technologies (CISTI). HTML

.

.

- ^ Denny Vrandečić, Philipp Sorg, and Rudi Studer (2011). Language resources extracted from Wikipedia. In Proceedings of the sixth international conference on Knowledge capture - K-CAP '11, 153. New York, New York, USA: ACM Press, 2011. DOI • PDF

- ^ Sara Javanmardi, David W Mcdonald, and Cristina V Lopes (2011). Vandalism Detection in Wikipedia: A High-Performing, Feature-Rich Model and its Reduction Through Lasso. In WikiSym 2011: Proceedings of the 7th International Symposium on Wikis, 2011. PDF

- ^ Malolan S. Rajagopalan, Vineet K. Khanna, Yaacov Leiter, Meghan Stott, Timothy N. Showalter, Adam P. Dicker, and Yaacov R. Lawrence (2011). Patient-Oriented Cancer Information on the Internet: A Comparison of Wikipedia and a Professionally Maintained Database. Journal of Oncology Practice 7(5). PDF • DOI

- ^ Kelly Itakura, Charles Clarke, Shlomo Geva, Andrew Trotman, and Wei Huang (2011). Topical and Structural Linkage in Wikipedia. In: Advances in Information Retrieval, edited by Paul Clough, Colum Foley, Cathal Gurrin, Gareth Jones, Wessel Kraaij, Hyowon Lee, and Vanessa Mudoch, 6611:460-465. Berlin, Heidelberg: Springer Berlin Heidelberg, 2011 DOI • PDF

Discuss this story

Am I the only one who's seeing strange "" characters all over the footnotes? — Cheers, JackLee –talk– 08:17, 30 August 2011 (UTC)[reply]

"Readers unimpressed by FAs" – what a sensational headline. Number of edits, unique editors, and editor names having an influence on the perceived quality of an article??? Without a proper assessment as opposed to some mere babbling the outcome of this study seems pretty worthless. Nageh (talk) 13:09, 30 August 2011 (UTC)[reply]

- "babbling", "worthless" - did you read the study? If not, is this a valid method for assessing information quality?

- I believe the subtitle aptly (if briefly) summarizes what the authors highlighted as one of the study's "novel findings" in the "Summary and conclusion" section ("The perceptions of our users regarding quality did not always coincide with the perceptions of Wikipedia editors, since in fewer than half of the cases the featured article was chosen as best. This finding warrants further exploration. Previous studies often relied implicitly on the high quality of featured articles.").

- Academic research generally strives to describe the world as it is, not as it should be. Of course one can be of the opinion that the assessments of the study participants were not all well-founded, or disagree with their criteria. ("One of the participants who chose the featured article as the lowest quality article complained about the lack of images in the article: ‘Provide some images, this is an abstract concept, how can you do without visualization?’, and the others found the article too high level and detailed.") But it is nevertheless worth knowing that many readers think this way. And actually, much of the comments on WP:FAC are about formal criteria and from non-experts, too (rather than, say, systematic fact-checking).

- Lastly, keep in mind that the study concerned the Hebrew Wikipedia and appears to have been conducted in 2008 or earlier.

- Regards, Tbayer (WMF) (talk) 11:03, 31 August 2011 (UTC)[reply]

- Note the word "seems": I did not say that the study "is" worthless. I was basing my comment on the information available as the full paper is paywalled (I would have read it otherwise). In this regard I do think my complaint was valid. It may very well be that there is more substantive reviewer feedback available in the paper (as you indicate) but if editor names and the like have an influence on the quality assessment I have a right to complain. Moreover, considering the setup of the test, if you go out on the street with an exceptional source and another one that is shorter, more easily comprehensible with colorful pictures aso. but of inferior quality people will likely prefer the latter because they don't know better. Too much detail? Heck, what a complaint is that? That's the complaint of a pupil who is forced to study something. There really has to be objective criteria for quality assessment: informative, accessible, comprehensive, balanced are suitable criteria. Having said that, I do not think that the outcome of this study is completely worthless but simply taking the outcome to state that "readers are unimpressed by FAs" is misleading and, with all respect, sensational IMHO. That's like going out on the street with a source of exceptionally high quality and expecting Joe Average to be impressed by it. Anyway, this was my thought on this and I think it was valid to present. So is yours. Thanks for the feedback! Nageh (talk) 11:46, 31 August 2011 (UTC)[reply]

- On second thought, concerning the headline, I guess it's fine when read in the context. I complained about it because I have often seen narrow results being interpreted as general facts and wronggoings on Wikipedia, published as such in the general press. Nageh (talk) 12:20, 31 August 2011 (UTC)[reply]

- I fear there might have been a misunderstanding about the setup of the study (and I apologize if the wording of my review contributed to it): The participants were not instructed by the researchers to use particular criteria (like, say, the number of edits in a revision history, or the nicknames appearing there). Rather, these are criteria that participants came up with by themselves. So if anything, you should direct your criticism at the students who participated in the study (and in that case, it would have merit), not at the study itself, which made considerable effort to objectively record the student's assessments and the criteria they used. (BTW, another superficial criterion named frequently by the students was the presence - not the selection! - of external links.)

- if you go out on the street ... - from the paper's description of the methodology, it seems that the selection of articles was sufficiently randomized to avoid such biases (i.e. not consistently pitting a high-quality text vs. an article of lower quality but higher visual appeal).

- By the way, it is worth mentioning the Article feedback tool recently rolled out to the entire English Wikipedia, as another effort to learn more about the quality assessments by non-editors. There, preliminary research found that

- "... there appears to be some alignment between reader assessments and community assessments of an article’s quality. Of the 25 most highly rated articles in the sample (average rating of 4.54), 10 are either Featured Articles or Good Articles (3 Featured and 7 Good). Of the 25 most poorly rated articles (average rating of 3.29), there is only one Good Article and no Featured Articles."

- Regards, Tbayer (WMF) (talk) 13:06, 31 August 2011 (UTC)[reply]

- I understand. Well, the article feedback tool would not be a blind study (so to say, readers can recognize an article's featured status), and there are other problems with it, but certainly there is useful information that can be drawn from it (and may allow for a comparative study of FA quality among the various Wikipedias). Cheers, Nageh (talk) 13:20, 31 August 2011 (UTC)[reply]

Another excellent review article. Please keep them coming! --Piotr Konieczny aka Prokonsul Piotrus| talk to me 16:50, 30 August 2011 (UTC)[reply]