Wikipedia:Reference desk/Archives/Mathematics/2013 February 19

Appearance

| Mathematics desk | ||

|---|---|---|

| < February 18 | << Jan | February | Mar >> | Current desk > |

| Welcome to the Wikipedia Mathematics Reference Desk Archives |

|---|

| The page you are currently viewing is an archive page. While you can leave answers for any questions shown below, please ask new questions on one of the current reference desk pages. |

February 19

[edit]Why is tensor a very tough topic? No one is explaining tensor in a simple language. I tried to read Wikipedia article about Tensor, but it is also very tough. Could someone make me understand about tensors in a simple language? 27.62.227.241 (talk) 14:11, 19 February 2013 (UTC)

- Tensors are geometric objects. Triangles, rectangles, and parallelograms are also geometric objects. 140.254.226.230 (talk) 14:34, 19 February 2013 (UTC)

- I don't agree that tensors are geometric objects in the way that triangles and rectangles are. That seems to suggest that tensors are "objects" in a naive sense, which is not true. Rather tensors express geometrical information. Sławomir Biały (talk) 16:37, 19 February 2013 (UTC)

- As the picture of a tensor shows on the article Tensor, it looks like tensors look like the vectors used in physics to describe magnitude and direction. But they are not just vectors. Tensors would probably be the geometrical objects that may use vectors to specify some linear relationship between two objects. They may be vectors themselves. Perhaps, the force vectors in physics would count as tensors. If that is the case, then see Kinematics. 140.254.226.230 (talk) 16:55, 19 February 2013 (UTC)

- Right. Vectors are a special case of tensors. The illustration there shows, more generally, that certain kinds of tensors express information that require specifying one or more directions in a space. Strain, for instance, is an example. Sławomir Biały (talk) 17:25, 19 February 2013 (UTC)

- As the picture of a tensor shows on the article Tensor, it looks like tensors look like the vectors used in physics to describe magnitude and direction. But they are not just vectors. Tensors would probably be the geometrical objects that may use vectors to specify some linear relationship between two objects. They may be vectors themselves. Perhaps, the force vectors in physics would count as tensors. If that is the case, then see Kinematics. 140.254.226.230 (talk) 16:55, 19 February 2013 (UTC)

- I don't agree that tensors are geometric objects in the way that triangles and rectangles are. That seems to suggest that tensors are "objects" in a naive sense, which is not true. Rather tensors express geometrical information. Sławomir Biały (talk) 16:37, 19 February 2013 (UTC)

- A similar question was asked recently on the Science Desk, and got some good answers. AndrewWTaylor (talk) 18:22, 19 February 2013 (UTC)

- Tensors can be confusing because there are several (equivalent) ways of defining them. Here is one way of looking at a tensor: It is a function that takes a number of vectors as arguments and produces a number as output. This function is required to be linear in each of its arguments. Such a function is called multi-linear. To figure out the components of the tensor one takes combinations of basis vectors as arguments and computes the output. This description is basis dependent, because if one uses another basis for the vectors, one will, in general, get a different multi-linear function describing the same tensor! YohanN7 (talk) 21:11, 19 February 2013 (UTC)

- Well, no, the multi-linear function is the same. It's only its representation in terms of what it does to (tuples of) basis vectors that's basis-dependent. --Trovatore (talk) 00:10, 20 February 2013 (UTC)

- I think we mean the same thing. Well, I certainly hope we mean the same thing. In case we don't, then I am lost. If I change my formulation to "..., get a different representation (numerically) of the multi-linear function (it being the tensor)...", then we refer to the same correct concept? Your formulation is in any case better. YohanN7 (talk) 16:49, 20 February 2013 (UTC)

- Well, no, the multi-linear function is the same. It's only its representation in terms of what it does to (tuples of) basis vectors that's basis-dependent. --Trovatore (talk) 00:10, 20 February 2013 (UTC)

- I found the Dan Fleisch video mentioned in the previous post illuminating. It was mostly a rehash ("Tensors are N-dimensional vectors"), but the gem at the end for me was the realization that a tensor is almost always accompanied by a (potentially implicit) basis vector set, and that the basis vector set of a tensor can combine basis vectors from potentially distinct vector spaces. The prevalent use of the implicit x/y/z basis vectors for most usage of (1-dimensional) vectors can make it difficult to realize that there can be more complex sets of basis vectors that accompany a tensor. -- 205.175.124.30 (talk) 22:38, 19 February 2013 (UTC)

- Tensors can be confusing because there are several (equivalent) ways of defining them. Here is one way of looking at a tensor: It is a function that takes a number of vectors as arguments and produces a number as output. This function is required to be linear in each of its arguments. Such a function is called multi-linear. To figure out the components of the tensor one takes combinations of basis vectors as arguments and computes the output. This description is basis dependent, because if one uses another basis for the vectors, one will, in general, get a different multi-linear function describing the same tensor! YohanN7 (talk) 21:11, 19 February 2013 (UTC)

- Part of the problem is that there really are several different things that are called "tensors". There are completely abstract tensors from abstract algebra, from the context of abstract vector spaces. These are, really, not very complicated at all — the hardest thing to get your head around is just the abstraction itself.

- More concrete tensors, ones where you can relate them to some more physics-like thing, are probably easier to internalize (because you can think about concrete examples), but also involve a lot more machinery from mathematical analysis. Most of the time these are really tensor fields, where in some sense the tensor varies smoothly from point to point, and it doesn't make much sense to think about them without taking this differentiable structure into account. So some of what you think is your difficulty with tensors may actually be difficulty with analysis. You kind of have to do the analysis and the linear algebra at the same time, and possibly that's the hardest part of all. --Trovatore (talk) 09:17, 20 February 2013 (UTC)

- I don't think the idea of a tensor varying from point to point is hard to understand. In my experience, students have a lot more trouble understanding tensor products. Usually in engineering and physics courses, tensors are introduced via their transformation law, which has some advantages. Sławomir Biały (talk) 12:13, 20 February 2013 (UTC)

- It's not the idea of varying from place to place that's hard to understand, per se. It's the fact that it brings in a second level of structure that has to be dealt with at the same time. The transformation-law approach is part of what I have in mind here; you have to remember it, and you have to do calculus to use it, and appears arbitrary and unmotivated. --Trovatore (talk) 19:35, 20 February 2013 (UTC)

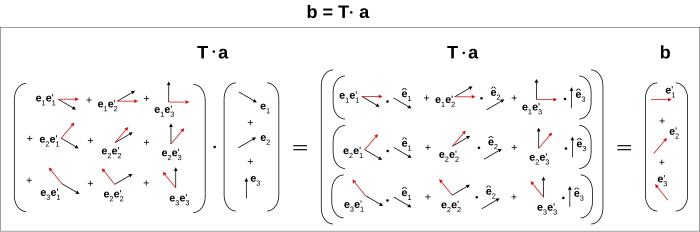

- If it helps the IP above; you can effortlessly represent a vector as an arrow in a space, but cannot, at all, represent a tensor geometrically (which is extremely frustrating!)... Well, multivectors and alternating forms (as exterior products of vectors or one-forms) can be depicted but you can't draw a tensor product of vectors... That's one reason you can't consider tensors to be "geometric objects" in a niave way... It might be heuristically correct to depict dyadic tensors as "arrows stuck together":

- I don't think the idea of a tensor varying from point to point is hard to understand. In my experience, students have a lot more trouble understanding tensor products. Usually in engineering and physics courses, tensors are introduced via their transformation law, which has some advantages. Sławomir Biały (talk) 12:13, 20 February 2013 (UTC)

- but don't trust this basic, heuristic illustration... M∧Ŝc2ħεИτlk 16:12, 20 February 2013 (UTC)

- One more thing... this may just be a personal interpretation, but maybe each index of a tensor corresponds to a direction?... It makes sense. It's been mentioned that tensors express a relation between directions in space, but no mention of any index-direction correspondence. Does that add any geometrical interpretation? M∧Ŝc2ħεИτlk 16:52, 21 February 2013 (UTC)

- Well, if you have a basis for your vector space consisting of, say, a unit vector pointing right, one pointing away from you, and one pointing up, then yes, of course the indices of the matrix representation of a rank-two tensor will correspond to things like the upwards effect of an input vector pointing right. That's kind of obvious (and also you basically said it in your previous post), so maybe you meant something else? --Trovatore (talk) 22:06, 23 February 2013 (UTC)

- One more thing... this may just be a personal interpretation, but maybe each index of a tensor corresponds to a direction?... It makes sense. It's been mentioned that tensors express a relation between directions in space, but no mention of any index-direction correspondence. Does that add any geometrical interpretation? M∧Ŝc2ħεИτlk 16:52, 21 February 2013 (UTC)

- All I'm trying to suggest is a possible geometric depiction of a tensor with n indices corresponds to n directions associated with the tensor, and those n directions are arrows combined together as illustrated above (the dyadic illustration if obviously just a special case n = 2)... which is pictorially suggestive that tensors are multi-directional quantities as a multi-arrow illustration and vectors are the special case of one arrow.

- In other words is it correct to draw a tensor product as arrows stuck together? Can vectors p, q, r be tensor multiplied to obtain p ⊗ q, visualized as p and q joined, similarly p ⊗ q ⊗ r? I doubt it, even tough it makes sense, it still doesn't somehow, and there are no references in existence to back up this interpretation...

- Anyway I have been told (in a similar way to what yourself and Sławomir Biały have said) that the best way to think of tensors is as "machines" which relate vectors to vectors (or a scalar), i.e. expressing geometric information as multilinear functions. Thanks, M∧Ŝc2ħεИτlk 11:10, 25 February 2013 (UTC)