User:Xionbox/Algorithms

Here be summary of algorithms and data structures to be known.

Analysis

[edit]Amortized analysis

[edit]Amortized analysis is a method of analyzing algorithms that considers the entire sequence of operations of the program. It allows for the establishment of a worst-case bound for the performance of an algorithm irrespective of the inputs by looking at all of the operations. At the heart of the method is the idea that while certain operations may be extremely costly in resources, they cannot occur at a high-enough frequency to weigh down the entire program because the number of less costly operations will far outnumber the costly ones in the long run, "paying back" the program over a number of iterations. It is particularly useful because it guarantees worst-case performance while accounting for the entire set of operations in an algorithm.

Big O

[edit]Big-O notation (Landau notation) describes the limiting behavior of the function when the argument tends towards a particular value or infinity, usually in terms of simpler functions. Big O notation characterizes functions according to their growth rates: different functions with the same growth rate may be represented using the same O notation.

| Notation | Name | Example |

|---|---|---|

| constant | Determining if a number is even or odd; using a constant-size lookup table or hash table | |

| double logarithmic | Finding a key value in an array sorted on the keys with Interpolation search. | |

| logarithmic | Finding an item in a sorted array with a binary search or a balanced search tree as well as all operations in a Binomial heap. | |

| fractional power | Searching in a kd-tree | |

| linear | Finding an item in an unsorted list or a malformed tree (worst case) or in an unsorted array; Adding two n-bit integers by ripple carry. | |

| linearithmic, loglinear, or quasilinear | Performing a Fast Fourier transform; heapsort, quicksort (best and average case), or merge sort | |

| quadratic | Multiplying two n-digit numbers by a simple algorithm; bubble sort (worst case or naive implementation), Shell sort, quicksort (worst case), selection sort or insertion sort | |

| polynomial or algebraic | Tree-adjoining grammar parsing; maximum matching for bipartite graphs | |

| L-notation or sub-exponential | Factoring a number using the quadratic sieve or number field sieve | |

| exponential | Finding the (exact) solution to the traveling salesman problem using dynamic programming; determining if two logical statements are equivalent using brute-force search | |

| factorial | Solving the traveling salesman problem via brute-force search; generating all unrestricted permutations of a poset; finding the determinant with expansion by minors. |

The statement is sometimes weakened to to derive simpler formulas for asymptotic complexity. For any and , is a subset of for any , so may be considered as a polynomial with some bigger order.

NP Complexity

[edit]

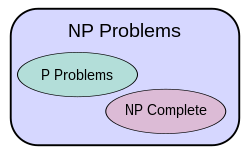

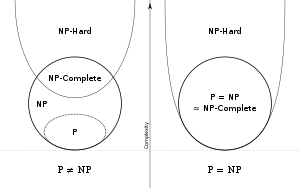

NP is one of the most fundamental complexity classes. The abbreviation NP refers to nondeterministic polynomial time.

Intuitively, NP is the set of all decision problems for which the instances where the answer is "yes" have efficiently verifiable proofs of the fact that the answer is indeed "yes." More precisely, these proofs have to be verifiable in polynomial time by a deterministic Turing machine. In an equivalent formal definition, NP is the set of decision problems where the "yes"-instances can be recognized in polynomial time by a non-deterministic Turing machine. The equivalence of the two definitions follows from the fact that an algorithm on such a non-deterministic machine consists of two phases, the first of which consists of a guess about the solution which is generated in a non-deterministic way, while the second consists of a deterministic algorithm which verifies or rejects the guess as a valid solution to the problem.

The complexity class P is contained in NP, but NP contains many important problems, the hardest of which are called NP-complete problems, for which no polynomial-time algorithms are known. The most important open question in complexity theory, the P = NP problem, asks whether such algorithms actually exist for NP-complete, and by corollary, all NP problems.

NP complete

[edit]

The complexity class NP-complete (abbreviated NP-C or NPC) is a class of decision problems. A decision problem L is NP-complete if it is in the set of NP problems so that any given solution to the decision problem can be verified in polynomial time, and also in the set of NP-hard problems so that any NP problem can be converted into L by a transformation of the inputs in polynomial time.

Although any given solution to such a problem can be verified quickly, there is no known efficient way to locate a solution in the first place; indeed, the most notable characteristic of NP-complete problems is that no fast solution to them is known. That is, the time required to solve the problem using any currently known algorithm increases very quickly as the size of the problem grows. As a result, the time required to solve even moderately large versions of many of these problems easily reaches into the billions or trillions of years, using any amount of computing power available today. As a consequence, determining whether or not it is possible to solve these problems quickly, called the P versus NP problem, is one of the principal unsolved problems in computer science today.

While a method for computing the solutions to NP-complete problems using a reasonable amount of time remains undiscovered, computer scientists and programmers still frequently encounter NP-complete problems. NP-complete problems are often addressed by using approximation algorithms.

P complexity

[edit]In computational complexity theory, P, also known as PTIME or DTIME(nO(1)), is one of the most fundamental complexity classes. It contains all decision problems which can be solved by a deterministic Turing machine using a polynomial amount of computation time, or polynomial time.

P can also be viewed as a uniform family of boolean circuits. A language L is in P if and only if there exists a polynomial-time uniform family of boolean circuits , such that

- For all , takes n bits as input and outputs 1 bit

- For all x in L,

- For all x not in L,

The circuit definition can be weakened to use only a logspace uniform family without changing the complexity class P.

P is known to contain many natural problems, including the decision versions of linear programming, calculating the greatest common divisor, and finding a maximum matching. In 2002, it was shown that the problem of determining if a number is prime is in P. The related class of function problems is FP.

Several natural problems are complete for P, including st-connectivity (or reachability) on alternating graphs. The article on P-complete problems lists further relevant problems in P.

Turing machine

[edit]A Turing machine is a theoretical device that manipulates symbols on a strip of tape according to a table of rules. Despite its simplicity, a Turing machine can be adapted to simulate the logic of any computer algorithm, and is particularly useful in explaining the functions of a CPU inside a computer. A Turing machine that is able to simulate any other Turing machine is called a universal Turing machine (UTM, or simply a universal machine).

A limitation of Turing Machines is that they do not model the strengths of a particular arrangement well. For instance, modern stored-program computers are actually instances of a more specific form of abstract machine known as the random access stored program machine or RASP machine model. Like the Universal Turing machine the RASP stores its "program" in "memory" external to its finite-state machine's "instructions". Unlike the Universal Turing Machine, the RASP has an infinite number of distinguishable, numbered but unbounded "registers"—memory "cells" that can contain any integer. The RASP's finite-state machine is equipped with the capability for indirect addressing (e.g. the contents of one register can be used as an address to specify another register); thus the RASP's "program" can address any register in the register-sequence. The upshot of this distinction is that there are computational optimizations that can be performed based on the memory indices, which are not possible in a general Turing Machine; thus when Turing Machines are used as the basis for bounding running times, a 'false lower bound' can be proven on certain algorithms' running times (due to the false simplifying assumption of a Turing Machine). An example of this is binary search, an algorithm that can be shown to perform more quickly when using the RASP model of computation rather than the Turing machine model.

Locks

[edit]Deadlock

[edit]A deadlock is a situation where in two or more competing actions are each waiting for the other to finish, and thus neither ever does.

In computer science, Coffman deadlock refers to a specific condition when two or more processes are each waiting for the other to release a resource, or more than two processes are waiting for resources in a circular chain. Deadlock is a common problem in multiprocessing where many processes share a specific type of mutually exclusive resource known as a software lock or soft lock. Computers intended for the time-sharing and/or real-time markets are often equipped with a hardware lock (or hard lock) which guarantees exclusive access to processes, forcing serialized access. Deadlocks are particularly troubling because there is no general solution to avoid (soft) deadlocks.

This situation may be likened to two people who are drawing diagrams, with only one pencil and one ruler between them. If one person takes the pencil and the other takes the ruler, a deadlock occurs when the person with the pencil needs the ruler and the person with the ruler needs the pencil to finish his work with the ruler. Neither request can be satisfied, so a deadlock occurs.

The telecommunications description of deadlock is weaker than Coffman deadlock because processes can wait for messages instead of resources. A deadlock can be the result of corrupted messages or signals rather than merely waiting for resources. For example, a dataflow element that has been directed to receive input on the wrong link will never proceed even though that link is not involved in a Coffman cycle.

Livelock

[edit]A livelock is similar to a deadlock, except that the states of the processes involved in the livelock constantly change with regard to one another, none progressing.

A real-world example of livelock occurs when two people meet in a narrow corridor, and each tries to be polite by moving aside to let the other pass, but they end up swaying from side to side without making any progress because they both repeatedly move the same way at the same time.

Livelock is a risk with some algorithms that detect and recover from deadlock. If more than one process takes action, the deadlock detection algorithm can be repeatedly triggered. This can be avoided by ensuring that only one process (chosen randomly or by priority) takes action.

To avoid livelocks, use lock hierarchies in the code you control. Assign each shared resource a level that corresponds to its architectural layer in your application, and follow the two rules: While holding a resource at a higher level, acquire only resources at lower levels; and acquire multiple resources at the same level all at once.

Monitor

[edit]In concurrent programming, a monitor is an object or module intended to be used safely by more than one thread. The defining characteristic of a monitor is that its methods are executed with mutual exclusion. That is, at each point in time, at most one thread may be executing any of its methods. This mutual exclusion greatly simplifies reasoning about the implementation of monitors compared to reasoning about parallel code that updates a data structure.

Monitors also provide a mechanism for threads to temporarily give up exclusive access, in order to wait for some condition to be met, before regaining exclusive access and resuming their task. Monitors also have a mechanism for signaling other threads that such conditions have been met.

Mutex

[edit]Mutual exclusion (often abbreviated to mutex) algorithms are used in concurrent programming to avoid the simultaneous use of a common resource, such as a global variable, by pieces of computer code called critical sections. A critical section is a piece of code in which a process or thread accesses a common resource. The critical section by itself is not a mechanism or algorithm for mutual exclusion. A program, process, or thread can have the critical section in it without any mechanism or algorithm which implements mutual exclusion.

Examples of such resources are fine-grained flags, counters or queues, used to communicate between code that runs concurrently, such as an application and its interrupt handlers. The synchronization of access to those resources is an acute problem because a thread can be stopped or started at any time.

To illustrate: suppose a section of code is altering a piece of data over several program steps, when another thread, perhaps triggered by some unpredictable event, starts executing. If this second thread reads from the same piece of data, the data, which is in the process of being overwritten, is in an inconsistent and unpredictable state. If the second thread tries overwriting that data, the ensuing state will probably be unrecoverable. These shared data being accessed by critical sections of code must, therefore, be protected, so that other processes which read from or write to the chunk of data are excluded from running.

Semaphore

[edit]In computer science, a semaphore is a variable or abstract data type that provides a simple but useful abstraction for controlling access by multiple processes to a common resource in a parallel programming environment.

A useful way to think of a semaphore is as a record of how many units of a particular resource are available, coupled with operations to safely (i.e. without race conditions) adjust that record as units are required or become free, and if necessary wait until a unit of the resource becomes available. Semaphores are a useful tool in the prevention of race conditions and deadlocks; however, their use is by no means a guarantee that a program is free from these problems. Semaphores which allow an arbitrary resource count are called counting semaphores, whilst semaphores which are restricted to the values 0 and 1 (or locked/unlocked, unavailable/available) are called binary semaphores.

Data structures

[edit]| Linked list | Array | Dynamic array |

Balanced tree | |

|---|---|---|---|---|

| Search | Θ(n) | Θ(1) | Θ(1) | Θ(log n) |

| Insert/delete at beginning | Θ(1) | — | Θ(n) | Θ(log n) |

| Insert/delete at end | Θ(1) | — | Θ(1) amortized | Θ(log n) |

| Insert/delete in middle | search time + Θ(1) |

— | Θ(n) | Θ(log n) |

| Wasted space (average) | Θ(n) | 0 | Θ(n) | Θ(n) |

Graphs

[edit]In computer science, a graph is an abstract data structure which consists of a finite (and possibly mutable) set of ordered pairs, called edges or arcs, of certain entities called nodes or vertices. As in mathematics, an edge (x,y) is said to point or go from x to y. The nodes may be part of the graph structure, or may be external entities represented by integer indices or references.

A graph data structure may also associate to each edge some edge value, such as a symbolic label or a numeric attribute (cost, capacity, length, etc.).

Representations

[edit]Different data structures for the representation of graphs are used in practice:

- Adjacency list - Vertices are stored as records or objects, and every vertex stores a list of adjacent vertices. This data structure allows to store additional data on the vertices.

- Incidence list - Vertices and edges are stored as records or objects. Each vertex stores its incident edges, and each edge stores its incident vertices. This data structure allows to store additional data on vertices and edges.

- Adjacency matrix - A two-dimensional matrix, in which the rows represent source vertices and columns represent destination vertices. Data on edges and vertices must be stored externally. Only the cost for one edge can be stored between each pair of vertices.

- Incidence matrix - A two-dimensional Boolean matrix, in which the rows represent the vertices and columns represent the edges. The entries indicate whether the vertex at a row is incident to the edge at a column.

In the matrix representations, the entries encode the cost of following an edge. The cost of edges that are not present are assumed to be .

| Adjacency list | Incidence list | Adjacency matrix | Incidence matrix | |

|---|---|---|---|---|

| Storage | ||||

| Add vertex | ||||

| Add edge | ||||

| Remove vertex | ||||

| Remove edge | ||||

| Query: are vertices u, v adjacent? (Assuming that the storage positions for u, v are known) | ||||

| Remarks | When removing edges or vertices, need to find all vertices or edges | Slow to add or remove vertices, because matrix must be resized/copied | Slow to add or remove vertices and edges, because matrix must be resized/copied |

Adjacency lists are generally preferred because they efficiently represent sparse graphs. An adjacency matrix is preferred if the graph is dense, that is the number of edges E is close to the number of vertices squared, V2, or if one must be able to quickly look up if there is an edge connecting two vertices.

For graphs with some regularity in the placement of edges, a symbolic graph is a possible choice of representation.

Hash tables

[edit]

A hash table or hash map is a data structure that uses a hash function to map identifying values, known as keys (e.g., a person's name), to their associated values (e.g., their telephone number). Thus, a hash table implements an associative array. The hash function is used to transform the key into the index (the hash) of an array element (the slot or bucket) where the corresponding value is to be sought.

Separate chaining

[edit]

In the strategy known as separate chaining, direct chaining, or simply chaining, each slot of the bucket array is a pointer to a linked list that contains the key-value pairs that hashed to the same location. Lookup requires scanning the list for an entry with the given key. Insertion requires adding a new entry record to either end of the list belonging to the hashed slot. Deletion requires searching the list and removing the element. (The technique is also called open hashing or closed addressing, which should not be confused with 'open addressing' or 'closed hashing'.)

Chained hash tables with linked lists are popular because they require only basic data structures with simple algorithms, and can use simple hash functions that are unsuitable for other methods.

The cost of a table operation is that of scanning the entries of the selected bucket for the desired key. If the distribution of keys is sufficiently uniform, the average cost of a lookup depends only on the average number of keys per bucket—that is, on the load factor.

For separate-chaining, the worst-case scenario is when all entries were inserted into the same bucket, in which case the hash table is ineffective and the cost is that of searching the bucket data structure. If the latter is a linear list, the lookup procedure may have to scan all its entries; so the worst-case cost is proportional to the number n of entries in the table.

Separate chaining with list heads

[edit]

Some chaining implementations store the first record of each chain in the slot array itself. The purpose is to increase cache efficiency of hash table access. To save memory space, such hash tables often have about as many slots as stored entries, meaning that many slots have two or more entries

Heap

[edit]

In computer science, a heap is a specialized tree-based data structure. We distinguish two kinds of heaps:

- Max-heap, which satisfies the following heap property:

- Min-heap, which satisfies the following heap property:

There is no restriction as to how many children each node has in a heap, although in practice each node has at most two. The heap is one maximally-efficient implementation of an abstract data type called a priority queue. Heaps are crucial in several efficient graph algorithms such as Dijkstra's algorithm, and in the sorting algorithm heapsort.

Applications

[edit]The heap data structure has many applications.

- Heapsort: One of the best sorting methods being in-place and with no quadratic worst-case scenarios.

- Selection algorithms: Finding the min, max, both the min and max, median, or even the k-th largest element can be done in linear time (often constant time) using heaps.

- Graph algorithms: By using heaps as internal traversal data structures, run time will be reduced by polynomial order. Examples of such problems are Prim's minimal spanning tree algorithm and Dijkstra's shortest path problem.

Full and almost full binary heaps may be represented in a very space-efficient way using an array alone. The first (or last) element will contain the root. The next two elements of the array contain its children. The next four contain the four children of the two child nodes, etc. Thus the children of the node at position n would be at positions 2n and 2n+1 in a one-based array, or 2n+1 and 2n+2 in a zero-based array. This allows moving up or down the tree by doing simple index computations. Balancing a heap is done by swapping elements which are out of order. As we can build a heap from an array without requiring extra memory (for the nodes, for example), heapsort can be used to sort an array in-place.

Time computation

[edit]Function names assume a min-heap.

| Operation | Binary | Binomial | Fibonacci | Pairing | Brodal |

|---|---|---|---|---|---|

| findMin | Θ(1) | Θ(log n) or Θ(1) | Θ(1) | Θ(1)[citation needed] | Θ(1)[citation needed] |

| deleteMin | Θ(log n) | Θ(log n) | O(log n)* | O(log n)* | O(log n) |

| insert | Θ(log n) | O(log n) | Θ(1)[citation needed] | O(1)*[citation needed] | Θ(1)[citation needed] |

| decreaseKey | Θ(log n) | Θ(log n) | Θ(1)* | O(log n)* | Θ(1) |

| merge | Θ(n) | O(log n)** | Θ(1) | O(1)* | Θ(1) |

(*)Amortized time

(**)Where n is the size of the larger heap

Linked lists

[edit]A linked list is a data structure used for collecting a sequence of objects, which allows efficient addition, removal and retrieval of elements from any position in the sequence. It is implemented as nodes, each of which contains a reference (i.e., a link) to the next and/or previous node in the sequence.

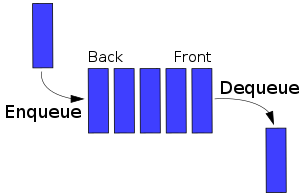

Queue

[edit]

Double-ended queue

[edit]A double-ended queue (dequeue, often abbreviated to deque, pronounced deck) is an abstract data structure that implements a queue for which elements can only be added to or removed from the front (head) or back (tail). It is also often called a head-tail linked list.

Priority queue

[edit]It is exactly like a regular queue or stack data structure, but additionally, each element is associated with a "priority".

- stack: elements are pulled in last-in first-out-order (e.g. a stack of papers)

- queue: elements are pulled in first-in first-out-order (e.g. a line in a cafeteria)

- priority queue: elements are pulled highest-priority-first (e.g. cutting in line, or VIP service).

A priority queue must at least support the following operations:

insert_with_priority: add an element to the queue with an associated prioritypull_highest_priority_element: remove the element from the queue that has the highest priority, and return it (also known as "pop_element(Off)", "get_maximum_element", or "get_front(most)_element"; some conventions consider lower priorities to be higher, so this may also be known as "get_minimum_element", and is often referred to as "get-min" in the literature; the literature also sometimes implement separate "peek_at_highest_priority_element" and "delete_element" functions, which can be combined to produce "pull_highest_priority_element")

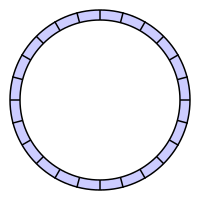

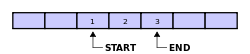

Circular buffer (or circular queue)

[edit]

A circular buffer, cyclic buffer or ring buffer is a data structure that uses a single, fixed-size buffer as if it were connected end-to-end. The circular buffer is well suited as a FIFO buffer while a standard, non-circular buffer is well suited as a LIFO buffer. This structure lends itself easily to buffering data streams. An example that could possibly use an overwriting circular buffer is with multimedia. Circular buffering makes a good implementation strategy for a Queue that has fixed maximum size.

Start / End Pointers

[edit]Generally, a circular buffer requires three pointers:

- one to the actual buffer in memory

- one to point to the start of valid data

- one to point to the end of valid data

Alternatively, a fixed-length buffer with two integers to keep track of indices can be used in languages that do not have pointers.

Taking a couple of examples from above. (While there are numerous ways to label the pointers and exact semantics can vary, this is one way to do it.)

What to note about the second one is that after each element is overwritten then the start pointer is incremented as well.

Stack

[edit]

In computer science, a stack is a last in, first out (LIFO) abstract data type and data structure. A stack can have any abstract data type as an element, but is characterized by only three fundamental operations: push, pop and stack top. The push operation adds a new item to the top of the stack, or initializes the stack if it is empty. If the stack is full and does not contain enough space to accept the given item, the stack is then considered to be in an overflow state. The pop operation removes an item from the top of the stack. A pop either reveals previously concealed items, or results in an empty stack, but if the stack is empty then it goes into underflow state (It means no items are present in stack to be removed). The stack top operation gets the data from the top-most position and returns it to the user without deleting it. The same underflow state can also occur in stack top operation if stack is empty.

A stack is a restricted data structure, because only a small number of operations are performed on it. The nature of the pop and push operations also means that stack elements have a natural order. Elements are removed from the stack in the reverse order to the order of their addition: therefore, the lower elements are those that have been on the stack the longest.

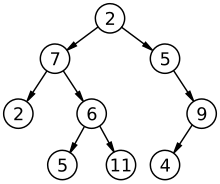

Trees

[edit]In graph theory, a k-ary tree is a rooted tree in which each node has no more than k children. It is also sometimes known as a k-way tree, an N-ary tree, or an M-ary tree.A binary tree is the special case where k=2.

A node is a structure which may contain a value, a condition, or represent a separate data structure (which could be a tree of its own). Each node in a tree has zero or more child nodes, which are below it in the tree (by convention, trees are drawn growing downwards). A node that has a child is called the child's parent node (or ancestor node, or superior). A node has at most one parent.

Nodes that do not have any children are called leaf nodes. They are also referred to as terminal nodes.

A free tree is a tree that is not rooted

The height of a node is the length of the longest downward path to a leaf from that node. The height of the root is the height of the tree. The depth of a node is the length of the path to its root (i.e., its root path). This is commonly needed in the manipulation of the various self balancing trees, AVL Trees in particular. Conventionally, the value −1 corresponds to a subtree with no nodes, whereas zero corresponds to a subtree with one node.

The topmost node in a tree is called the root node. Being the topmost node, the root node will not have parents. It is the node at which operations on the tree commonly begin (although some algorithms begin with the leaf nodes and work up ending at the root). All other nodes can be reached from it by following edges or links. (In the formal definition, each such path is also unique). In diagrams, it is typically drawn at the top. In some trees, such as heaps, the root node has special properties. Every node in a tree can be seen as the root node of the subtree rooted at that node.

An internal node or inner node is any node of a tree that has child nodes and is thus not a leaf node. Similarly, an external node or outer node is any node that does not have child nodes and is thus a leaf.

A subtree of a tree T is a tree consisting of a node in T and all of its descendants in T. (This is different from the formal definition of subtree used in graph theory.) The subtree corresponding to the root node is the entire tree; the subtree corresponding to any other node is called a proper subtree (in analogy to the term proper subset).

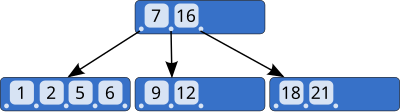

B tree

[edit]| B-tree | ||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | tree | |||||||||||||||||||||||

| ||||||||||||||||||||||||

A B-tree is a tree data structure that keeps data sorted and allows searches, sequential access, insertions, and deletions in logarithmic amortized time. The B-tree is a generalization of a binary search tree in that a node can have more than two children. (Comer, p. 123) Unlike self-balancing binary search trees, the B-tree is optimized for systems that read and write large blocks of data. It is commonly used in databases and filesystems.

Search

[edit]Searching is similar to searching a binary search tree. Starting at the root, the tree is recursively traversed from top to bottom. At each level, the search chooses the child pointer (subtree) whose separation values are on either side of the search value.

Deletion

[edit]There are two popular strategies for deletion from a B-Tree.

- locate and delete the item, then restructure the tree to regain its invariants

or

- do a single pass down the tree, but before entering (visiting) a node, restructure the tree so that once the key to be deleted is encountered, it can be deleted without triggering the need for any further restructuring

The algorithm below uses the former strategy.

There are two special cases to consider when deleting an element:

- the element in an internal node may be a separator for its child nodes

- deleting an element may put its node under the minimum number of elements and children.

Each of these cases will be dealt with in order.

Deletion from a leaf node

[edit]- Search for the value to delete.

- If the value is in a leaf node, it can simply be deleted from the node,

- If underflow happens, check siblings to either transfer a key or fuse the siblings together.

- if deletion happened from right child retrieve the max value of left child if there is no underflow in left child

- in vice-versa situation retrieve the min element from right

Deletion from an internal node

[edit]Each element in an internal node acts as a separation value for two subtrees, and when such an element is deleted, two cases arise. In the first case, both of the two child nodes to the left and right of the deleted element have the minimum number of elements, namely L−1. They can then be joined into a single node with 2L−2 elements, a number which does not exceed U−1 and so is a legal node. Unless it is known that this particular B-tree does not contain duplicate data, we must then also (recursively) delete the element in question from the new node.

In the second case, one of the two child nodes contains more than the minimum number of elements. Then a new separator for those subtrees must be found. Note that the largest element in the left subtree is still less than the separator. Likewise, the smallest element in the right subtree is the smallest element which is still greater than the separator. Both of those elements are in leaf nodes, and either can be the new separator for the two subtrees.

- If the value is in an internal node, choose a new separator (either the largest element in the left subtree or the smallest element in the right subtree), remove it from the leaf node it is in, and replace the element to be deleted with the new separator.

- This has deleted an element from a leaf node, and so is now equivalent to the previous case.

Rebalancing after deletion

[edit]If deleting an element from a leaf node has brought it under the minimum size, some elements must be redistributed to bring all nodes up to the minimum. In some cases the rearrangement will move the deficiency to the parent, and the redistribution must be applied iteratively up the tree, perhaps even to the root. Since the minimum element count doesn't apply to the root, making the root be the only deficient node is not a problem. The algorithm to rebalance the tree is as follows:[citation needed]

- If the right sibling has more than the minimum number of elements

- Add the separator to the end of the deficient node.

- Replace the separator in the parent with the first element of the right sibling.

- Append the first child of the right sibling as the last child of the deficient node

- Otherwise, if the left sibling has more than the minimum number of elements.

- Add the separator to the start of the deficient node.

- Replace the separator in the parent with the last element of the left sibling.

- Insert the last child of the left sibling as the first child of the deficient node

- If both immediate siblings have only the minimum number of elements

- Create a new node with all the elements from the deficient node, all the elements from one of its siblings, and the separator in the parent between the two combined sibling nodes.

- Remove the separator from the parent, and replace the two children it separated with the combined node.

- If that brings the number of elements in the parent under the minimum, repeat these steps with that deficient node, unless it is the root, since the root is permitted to be deficient.

The only other case to account for is when the root has no elements and one child. In this case it is sufficient to replace it with its only child.

Binary search is typically (but not necessarily) used within nodes to find the separation values and child tree of interest.

DSW algorithm

[edit]The DSW algorithm is a method for efficiently balancing binary search trees — that is, decreasing their height to O(log n) nodes, where n is the total number of nodes. Unlike a self-balancing binary search tree, it does not do this incrementally during each operation, but periodically, so that its cost can be amortized over many operations.

The algorithm requires linear (O(n)) time and is in-place. The original algorithm generates as compact a tree as possible: all levels of the tree are completely full except possibly the bottom-most. The modification (by S/W) generates a complete binary tree, namely one in which the bottom-most level is filled strictly from left to right. This is a useful transformation to perform if it is known that no more inserts will be done.

Left child-right sibling binary tree

[edit]In computer science, a left child-right sibling binary tree is a binary tree representation of a k-ary tree. The process of converting from a k-ary tree to an LC-RS binary tree is not reversible in general without additional information.

To form a binary tree from an arbitrary k-ary tree by this method, the root of the original tree is made the root of the binary tree. Then, starting with the root, each node's leftmost child in the original tree is made its left child in the binary tree, and its nearest sibling to the right in the original tree is made its right child in the binary tree.

If the original tree was sorted, the new tree will be a binary search tree.

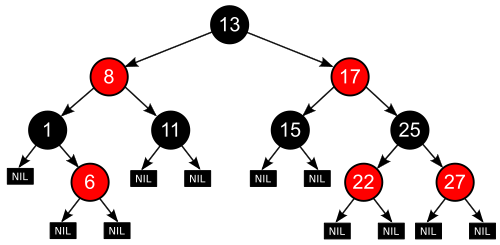

Red-black tree

[edit]A red–black tree is a type of self-balancing binary search tree, a data structure used in computer science, typically to implement associative arrays. It is complex, but has good worst-case running time for its operations and is efficient in practice: it can search, insert, and delete in O(log n) time, where n is total number of elements in the tree. Put very simply, a red–black tree is a binary search tree that inserts and deletes intelligently, to ensure the tree is reasonably balanced.

A red–black tree is a binary search tree where each node has a color attribute, the value of which is either red or black. In addition to the ordinary requirements imposed on binary search trees, the following requirements apply to red–black trees:

- A node is either red or black.

- The root is black. (This rule is sometimes omitted from other definitions. Since the root can always be changed from red to black, but not necessarily vice-versa, this rule has little effect on analysis.)

- All leaves are black.

- Both children of every red node are black.

- Every simple path from a given node to any of its descendant leaves contains the same number of black nodes.

These constraints enforce a critical property of red–black trees: that the path from the root to the furthest leaf is no more than twice as long as the path from the root to the nearest leaf. The result is that the tree is roughly balanced. Since operations such as inserting, deleting, and finding values require worst-case time proportional to the height of the tree, this theoretical upper bound on the height allows red–black trees to be efficient in the worst-case, unlike ordinary binary search trees.

To see why this is guaranteed, it suffices to consider the effect of properties 4 and 5 together. For a red–black tree T, let B be the number of black nodes in property 5. Therefore the shortest possible path from the root of T to any leaf consists of B black nodes. Longer possible paths may be constructed by inserting red nodes. However, property 4 makes it impossible to insert more than one consecutive red node. Therefore the longest possible path consists of 2B nodes, alternating black and red.

The shortest possible path has all black nodes, and the longest possible path alternates between red and black nodes. Since all maximal paths have the same number of black nodes, by property 5, this shows that no path is more than twice as long as any other path.

In many of the presentations of tree data structures, it is possible for a node to have only one child, and leaf nodes contain data. It is possible to present red–black trees in this paradigm, but it changes several of the properties and complicates the algorithms. For this reason, this article uses "null leaves", which contain no data and merely serve to indicate where the tree ends, as shown above. These nodes are often omitted in drawings, resulting in a tree that seems to contradict the above principles, but in fact does not. A consequence of this is that all internal (non-leaf) nodes have two children, although one or both of those children may be null leaves. Property 5 ensures that a red node must have either two black null leaves or two black non-leaves as children. For a black node with one null leaf child and one non-null-leaf child, properties 3, 4 and 5 ensure that the non-null-leaf child must be a red node with two black null leaves as children.

Some explain a red–black tree as a binary search tree whose edges, instead of nodes, are colored in red or black, but this does not make any difference. The color of a node in this article's terminology corresponds to the color of the edge connecting the node to its parent, except that the root node is always black (property 2) whereas the corresponding edge does not exist.

Trie or prefix tree

[edit]

A trie, or prefix tree, is an ordered tree data structure that is used to store an associative array where the keys are usually strings. Unlike a binary search tree, no node in the tree stores the key associated with that node; instead, its position in the tree shows what key it is associated with. All the descendants of a node have a common prefix of the string associated with that node, and the root is associated with the empty string. Values are normally not associated with every node, only with leaves and some inner nodes that correspond to keys of interest.

In the example shown, keys are listed in the nodes and values below them. Each complete English word has an arbitrary integer value associated with it. A trie can be seen as a deterministic finite automaton, although the symbol on each edge is often implicit in the order of the branches.

It is not necessary for keys to be explicitly stored in nodes. (In the figure, words are shown only to illustrate how the trie works.)

Though it is most common, tries need not be keyed by character strings. The same algorithms can easily be adapted to serve similar functions of ordered lists of any construct, e.g., permutations on a list of digits or shapes. In particular, a bitwise trie is keyed on the individual bits making up a short, fixed size of bits such as an integer number or pointer to memory.

Algorithms

[edit]Searching

[edit]Binary search

[edit]A binary search algorithm locates the position of a specified value in a sorted array. The method works by comparing the specified value to the array's value in the middle of the array's span from first to last elements.

public class BinarySearch{

static int search(int[] A, int k){

int b = 0;

int e = A.length - 1;

int m;

while( b <= e ){

m = 1 + (e-1)/2;

if(A[m] < K){

b = m+1;

}else if( A[m] == k ){

return m;

}else{

e = m - 1;

}

}

return -1;

}

BFS search (graphs)

[edit]In graph theory, breadth-first search (BFS) is a graph search algorithm that begins at the root node and explores all the neighboring nodes. Then for each of those nearest nodes, it explores their unexplored neighbor nodes, and so on, until it finds the goal.

BFS is an uninformed search method that aims to expand and examine all nodes of a graph or combination of sequences by systematically searching through every solution. In other words, it exhaustively searches the entire graph or sequence without considering the goal until it finds it. It does not use a heuristic algorithm.

From the standpoint of the algorithm, all child nodes obtained by expanding a node are added to a FIFO (i.e., First In, First Out) queue. In typical implementations, nodes that have not yet been examined for their neighbors are placed in some container (such as a queue or linked list) called "open" and then once examined are placed in the container "closed".

DFS search (graphs)

[edit]Depth-first search (DFS) is an algorithm for traversing or searching a tree, tree structure, or graph. One starts at the root (selecting some node as the root in the graph case) and explores as far as possible along each branch before backtracking.

Formally, DFS is an uninformed search that progresses by expanding the first child node of the search tree that appears and thus going deeper and deeper until a goal node is found, or until it hits a node that has no children. Then the search backtracks, returning to the most recent node it hasn't finished exploring. In a non-recursive implementation, all freshly expanded nodes are added to a stack for exploration.

Sorting

[edit]Heap sort

[edit]Heapsort is a comparison-based sorting algorithm to create a sorted array (or list), and is part of the selection sort family. Although somewhat slower in practice on most machines than a well implemented quicksort, it has the advantage of a more favorable worst-case O(n log n) runtime. Heapsort is an in-place algorithm, but is not a stable sort.

The following is the "simple" way to implement the algorithm in pseudocode. Arrays are zero based and swap is used to exchange two elements of the array. Movement 'down' means from the root towards the leaves, or from lower indices to higher. Note that during the sort, the smallest element is at the root of the heap at a[0], while at the end of the sort, the largest element is in a[end].

function heapSort(a, count) is

input: an unordered array a of length count

(first place a in max-heap order)

heapify(a, count)

end := count-1 //in languages with zero-based arrays the children are 2*i+1 and 2*i+2

while end > 0 do

(swap the root(maximum value) of the heap with the last element of the heap)

swap(a[end], a[0])

(put the heap back in max-heap order)

siftDown(a, 0, end-1)

(decrease the size of the heap by one so that the previous max value will

stay in its proper placement)

end := end - 1

function heapify(a, count) is

(start is assigned the index in a of the last parent node)

start := count / 2 - 1

while start ≥ 0 do

(sift down the node at index start to the proper place such that all nodes below

the start index are in heap order)

siftDown(a, start, count-1)

start := start - 1

(after sifting down the root all nodes/elements are in heap order)

function siftDown(a, start, end) is

input: end represents the limit of how far down the heap

to sift.

root := start

while root * 2 + 1 ≤ end do (While the root has at least one child)

child := root * 2 + 1 (root*2 + 1 points to the left child)

swap := root (keeps track of child to swap with)

(check if root is smaller than left child)

if a[swap] < a[child]

swap := child

(check if right child exists, and if it's bigger than what we're currently swapping with)

if child+1 ≤ end and a[swap] < a[child+1]

swap := child + 1

(check if we need to swap at all)

if swap != root

swap(a[root], a[swap])

root := swap (repeat to continue sifting down the child now)

else

return

The heapify function can be thought of as building a heap from the bottom up, successively shifting downward to establish the heap property. An alternative version (shown below) that builds the heap top-down and sifts upward may be conceptually simpler to grasp. This "siftUp" version can be visualized as starting with an empty heap and successively inserting elements, whereas the "siftDown" version given above treats the entire input array as a full, "broken" heap and "repairs" it starting from the last non-trivial sub-heap (that is, the last parent node).

Also, the "siftDown" version of heapify has O(n) time complexity, while the "siftUp" version given below has O(n log n) time complexity due to its equivalence with inserting each element, one at a time, into an empty heap. This may seem counter-intuitive since, at a glance, it is apparent that the former only makes half as many calls to its logarithmic-time sifting function as the latter; i.e., they seem to differ only by a constant factor, which never has an impact on asymptotic analysis.

To grasp the intuition behind this difference in complexity, note that the number of swaps that may occur during any one siftUp call increases with the depth of the node on which the call is made. The crux is that there are many (exponentially many) more "deep" nodes than there are "shallow" nodes in a heap, so that siftUp may have its full logarithmic running-time on the approximately linear number of calls made on the nodes at or near the "bottom" of the heap. On the other hand, the number of swaps that may occur during any one siftDown call decreases as the depth of the node on which the call is made increases. Thus, when the "siftDown" heapify begins and is calling siftDown on the bottom and most numerous node-layers, each sifting call will incur, at most, a number of swaps equal to the "height" (from the bottom of the heap) of the node on which the sifting call is made. In other words, about half the calls to siftDown will have at most only one swap, then about a quarter of the calls will have at most two swaps, etc.

The heapsort algorithm itself has O(n log n) time complexity using either version of heapify.

function heapify(a,count) is

(end is assigned the index of the first (left) child of the root)

end := 1

while end < count

(sift up the node at index end to the proper place such that all nodes above

the end index are in heap order)

siftUp(a, 0, end)

end := end + 1

(after sifting up the last node all nodes are in heap order)

function siftUp(a, start, end) is

input: start represents the limit of how far up the heap to sift.

end is the node to sift up.

child := end

while child > start

parent := floor((child - 1) ÷ 2)

if a[parent] < a[child] then (out of max-heap order)

swap(a[parent], a[child])

child := parent (repeat to continue sifting up the parent now)

else

return

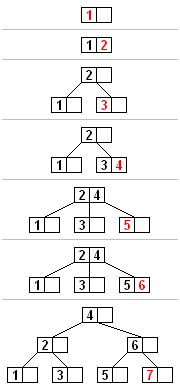

Example

[edit]Let { 6, 5, 3, 1, 8, 7, 2, 4 } be the list that we want to sort from the smallest to the largest.

1. Build the heap

| Heap | newly added element | swap elements |

|---|---|---|

| nil | 6 | |

| 6 | 5 | |

| 6, 5 | 3 | |

| 6, 5, 3 | 1 | |

| 6, 5, 3 ,1 | 8 | |

| 6, 5, 3, 1, 8 | 5, 8 | |

| 6, 8, 3, 1, 5 | 6, 8 | |

| 8, 6, 3, 1, 5 | 7 | |

| 8, 6, 3, 1, 5, 7 | 3, 7 | |

| 8, 6, 7, 1, 5, 3 | 2 | |

| 8, 6, 7, 1, 5, 3, 2 | 4 | |

| 8, 6, 7, 1, 5, 3, 2, 4 | 1, 4 | |

| 8, 6, 7, 4, 5, 3, 2, 1 |

2. Sorting.

| Heap | swap elements | delete element | sorted array | details |

|---|---|---|---|---|

| 8, 6, 7, 4, 5, 3, 2, 1 | 8, 1 | swap 8 and 1 in order to delete 8 from heap | ||

| 1, 6, 7, 4, 5, 3, 2, 8 | 8 | delete 8 from heap and add to sorted array | ||

| 1, 6, 7, 4, 5, 3, 2 | 1, 7 | 8 | swap 1 and 7 as they are not in order in the heap | |

| 7, 6, 1, 4, 5, 3, 2 | 1, 3 | 8 | swap 1 and 3 as they are not in order in the heap | |

| 7, 6, 3, 4, 5, 1, 2 | 7, 2 | 8 | swap 7 and 2 in order to delete 7 from heap | |

| 2, 6, 3, 4, 5, 1, 7 | 7 | 8 | delete 7 from heap and add to sorted array | |

| 2, 6, 3, 4, 5, 1 | 2, 6 | 7, 8 | swap 2 and 6 as thay are not in order in the heap | |

| 6, 2, 3, 4, 5, 1 | 2, 5 | 7, 8 | swap 2 and 5 as they are not in order in the heap | |

| 6, 5, 3, 4, 2, 1 | 6, 1 | 7, 8 | swap 6 and 1 in order to delete 6 from heap | |

| 1, 5, 3, 4, 2, 6 | 6 | 7, 8 | delete 6 from heap and add to sorted array | |

| 1, 5, 3, 4, 2 | 1, 5 | 6, 7, 8 | swap 1 and 5 as they are not in order in the heap | |

| 5, 1, 3, 4, 2 | 1, 4 | 6, 7, 8 | swap 1 and 4 as they are not in order in the heap | |

| 5, 4, 3, 1, 2 | 5, 2 | 6, 7, 8 | swap 5 and 2 in order to delete 5 from heap | |

| 2, 4, 3, 1, 5 | 5 | 6, 7, 8 | delete 5 from heap and add to sorted array | |

| 2, 4, 3, 1 | 2, 4 | 5, 6, 7, 8 | swap 2 and 4 as they are not in order in the heap | |

| 4, 2, 3, 1 | 4, 1 | 5, 6, 7, 8 | swap 4 and 1 in order to delete 4 from heap | |

| 1, 2, 3, 4 | 4 | 5, 6, 7, 8 | delete 4 from heap and add to sorted array | |

| 1, 2, 3 | 1, 3 | 4, 5, 6, 7, 8 | swap 1 and 3 as they are not in order in the heap | |

| 3, 2, 1 | 3, 1 | 4, 5, 6, 7, 8 | swap 3 and 1 in order to delete 3 from heap | |

| 1, 2, 3 | 3 | 4, 5, 6, 7, 8 | delete 3 from heap and add to sorted array | |

| 1, 2 | 1, 2 | 3, 4, 5, 6, 7, 8 | swap 1 and 2 as they are not in order in the heap | |

| 2, 1 | 2, 1 | 3, 4, 5, 6, 7, 8 | swap 2 and 1 in order to delete 2 from heap | |

| 1, 2 | 2 | 3, 4, 5, 6, 7, 8 | delete 2 from heap and add to sorted array | |

| 1 | 1 | 2, 3, 4, 5, 6, 7, 8 | delete 1 from heap and add to sorted array | |

| 1, 2, 3, 4, 5, 6, 7, 8 | completed |

Quick sort

[edit]Quicksort is a divide and conquer algorithm. Quicksort first divides a large list into two smaller sub-lists: the low elements and the high elements. Quicksort can then recursively sort the sub-lists.

The steps are:

- Pick an element, called a pivot, from the list.

- Reorder the list so that all elements with values less than the pivot come before the pivot, while all elements with values greater than the pivot come after it (equal values can go either way). After this partitioning, the pivot is in its final position. This is called the partition operation.

- Recursively sort the sub-list of lesser elements and the sub-list of greater elements.

The base case of the recursion are lists of size zero or one, which never need to be sorted.

function quicksort(array)

var list less, greater

if length(array) ≤ 1

return array // an array of zero or one elements is already sorted

select and remove a pivot value pivot from array

for each x in array

if x ≤ pivot then append x to less

else append x to greater

return concatenate(quicksort(less), pivot, quicksort(greater))

|

Notice that we only examine elements by comparing them to other elements. This makes quicksort a comparison sort. This version is also a stable sort (assuming that the "for each" method retrieves elements in original order, and the pivot selected is the last among those of equal value).

The correctness of the partition algorithm is based on the following two arguments:

- At each iteration, all the elements processed so far are in the desired position: before the pivot if less than the pivot's value, after the pivot if greater than the pivot's value (loop invariant).

- Each iteration leaves one fewer element to be processed (loop variant).

The correctness of the overall algorithm can be proven via induction: for zero or one element, the algorithm leaves the data unchanged; for a larger data set it produces the concatenation of two parts, elements less than the pivot and elements greater than it, themselves sorted by the recursive hypothesis.

Merge sort

[edit]

Conceptually, a merge sort works as follows

- If the list is of length 0 or 1, then it is already sorted. Otherwise:

- Divide the unsorted list into two sublists of about half the size.

- Sort each sublist recursively by re-applying the merge sort.

- Merge the two sublists back into one sorted list.

Merge sort incorporates two main ideas to improve its runtime:

- A small list will take fewer steps to sort than a large list.

- Fewer steps are required to construct a sorted list from two sorted lists than from two unsorted lists. For example, you only have to traverse each list once if they're already sorted (see the merge function below for an example implementation).

Example: Use merge sort to sort a list of integers contained in an array:

Suppose we have an array A with n indices ranging from to . We apply merge sort to and where c is the integer part of . When the two halves are returned they will have been sorted. They can now be merged together to form a sorted array.

In a simple pseudocode form, the algorithm could look something like this:

function merge_sort(m)

if length(m) ≤ 1

return m

var list left, right, result

var integer middle = length(m) / 2

for each x in m up to middle

add x to left

for each x in m after middle

add x to right

left = merge_sort(left)

right = merge_sort(right)

result = merge(left, right)

return result

The merge function needs to then merge both the left and right lists. It has several variations; one is shown:

function merge(left,right)

var list result

while length(left) > 0 or length(right) > 0

if length(left) > 0 and length(right) > 0

if first(left) ≤ first(right)

append first(left) to result

left = rest(left)

else

append first(right) to result

right = rest(right)

else if length(left) > 0

append first(left) to result

left = rest(left)

else if length(right) > 0

append first(right) to result

right = rest(right)

end while

return result

![{\displaystyle L_{n}[\alpha ,c],\;0<\alpha <1=\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/edcabf42d8d254f315347b4047413ec19bc3ab3a)