User:Siliconred/sandbox/SSHRC submission

| Title | Uncovering patterns of meaning on Wikipedia using data science, network analysis and machine learning |

|---|---|

| Student | Nathan Drezner |

| Advisers | Profs. Richard So & Andrew Piper |

| Instutition | McGill University |

Wikipedia offers a massive crowd-sourced data set, ready to be analyzed to uncover how we use language and better understand questions of representation, conflict, and community standards.

Understanding culture by studying crowd-sourced texts

[edit]McGill's .txtLab is a laboratory for cultural analytics at McGill University that explores the use of computational and quantitative approaches towards understanding literature and culture in both the past and present. Through the .txtLab, I have researched Wikipedia pages about American novels, films, and television shows based on lists of these genres curated by Wikipedians—"canons" of literature crowd-sourced by an online community.

What kind of culture do Wikipedians care about?

[edit]-

Average number of edits for novels listed on Wikipedia

-

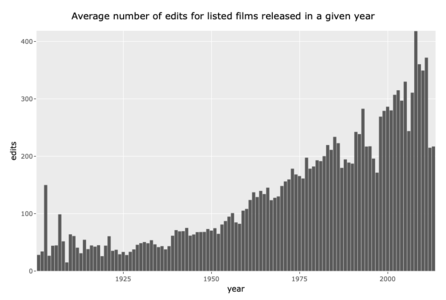

Average number of edits for films listed on Wikipedia

My work has focused on metadata generated from Wikipedia's lists of films and novels. There is more activity on pages for older novels, declining over time to a consistent level of activity in more recent works. By contrast, the Wikipedia community there is more interested in recent films, with older works receiving far fewer edits. Different medias inspire vastly different methods of collaboration and community interest, a conclusion with broad implications in understanding the focus on culture from contributors to Wikipedia.

Edit histories as an eye to building consensus around culture?

[edit]A study of conflict over language on Wikipedia by mapping language that is being fought over in edit histories can provide further insight. The various revisions to a page can clarify the salient language of disagreement and consensus building on a forum of collaborating volunteers, answering more complex questions prompted by the network of activity across genres.

This research raises a host of new questions: Does conflict over language differ between genres being written on? Do Wikipedia's "canons" resemble academic canons? How does language inform online consensus?