User:Shlomital

Shlomi Tal is a student of Arabic, Islam, Hebrew linguistics and Persian at Bar-Ilan University. Also a computer cognoscens with an inordinate interest in file formats and multimedia applications, and the interface between the real (analogue) world and the digital one in general; but with zero knowledge of or interest in programming.

Wikipedia Rejoinder

[edit]- Everything you read

on Wikipediashould be taken with a grain of salt.

There, fixed that for you.

Hebrew Computing Primer

[edit]I consider myself an expert when it comes to Hebrew computing. Not having programmed Hebrew support systems, nor being old enough to have worked with Old Code, yet I’ve encountered all variants of Hebrew encodings and storage methods in the two decades or so I’ve been using computers, and I know the theory behind them and how to deal with them practically. This is a primer for anyone who’s interested in the details.

Specialties

[edit]Hebrew computing has two specialties: additional characters (which any character set outside ASCII has) and bidirectionality. For any undertaking of Hebrew support, the additional characters must be provided by an encoding, and the writing mode must be accommodated by an I/O system. I will now elaborate on the peculiarity of the writing mode and storage, as it is the more challenging property.

Writing Hebrew

[edit]For English the writing mode is left to right, top to bottom: the pen starts at top left and moves right constantly until the line ends, and then it moves one line bottom and back to the far left. For Hebrew the writing mode is in general right to left, top to bottom: the pen starts at top right and moves left constantly until the line ends, and then it moves one line bottom and back to the far right. The “in general” is crucial: any use of digits (0–9) or Western-script text (usually English nowadays) will induce a left to right direction on the same line: the pen starts at the right and moves left, but in the middle of the line it moves from left to right, and after that it goes back to moving right to left. This feature, bidirectionality, complicates the writing of Hebrew (and Arabic) and needs special handling for both input and output.

For input, Hebrew typewriters would force the typist to key in the digits and English text in reverse order. When computers came, there was room for a little more sophistication. Early on in Hebrew computing, the typist could type Hebrew text normally in right to left typing mode, and when he or she had to type digits or English, the characters appeared in what is called “push mode”: the cursor stays on the same position, and the characters flow out of it until reversion to Hebrew. This hasn’t changed over the ages—the most modern systems, like Windows XP and GNOME and Mac OS X, still display the same choice of a general writing mode, and push mode for characters with the other directionality.

Storing Hebrew

[edit]Storage, however, is a different matter. After typing and saving the file, there had to be a way of storing the Hebrew text. Here there is a sharp demarcation line between old systems and new ones: old systems stored Hebrew text in visual order, while new ones store it in logical order.

Visual order is the order that the eyes see, relative to a general directionality. If the general directionality of the text is left to right, then visually ordered Hebrew is stored as the eyes read it left to right (the opposite of normal typing and reading order). For example, in the word שלום the eyes sees ם (final mem!) first, so in visual Hebrew this ם will be stored first, and the ש last. This makes text operations (sorting, editing and linebreaking, to name a few) quite difficult; there is only one reason why this unnatural storage mode was adopted at all, and I will explain later on.

Logical order, in contrast, is the order that the fingers type and the eyes read naturally. No matter the general directionality of the text, in the word שלום the letter ש is always typed and read first. In logical Hebrew, therefore, the letter ש will also be stored first. This is the natural order, making computer handling of Hebrew text as easy and feature-rich as that of English text. However, it does have one snag: that for proper display of the Hebrew text, the display system must be programmed to output the Hebrew letters right to left.

Now that is the reason why visual Hebrew can be seen in all early Hebrew computing systems. For visual Hebrew to work, an encoding is needed and an input mechanism, but the display mechanism can be left unchanged from that which suffices for English text. Visual Hebrew, in essence, can be called a kludge, a practice of retrofitting—the easy addition of Hebrew support onto systems that have never been designed for Hebrew. Logical order, on the other hand, is a ground-up affair, carried out for new systems, and where resources are more plentiful. So on old Hebrew computers (IBM mainframes, old Unix workstations) and platforms (the Hebrew Internet until the advent of Internet Explorer 5 in 2000) we find visual Hebrew, while in the newer settings (Microsoft Windows 3.0 and onwards, KDE and GNOME, the Hebrew Internet nowadays) logical Hebrew is the norm.

Hebrew storage is a detail as relevant as the encoding. For Cyrillic text, for instance, it would be enough to state the encoding (ISO 8859-5, KOI-8, Windows-1251 etc), but for Hebrew text one has to know its storage method as well as its encoding. Two of the Hebrew encodings come in either storage method.

Encoding

[edit]The Hebrew block in Unicode, hexadecimal 0590–05FF, is a small one. The repertoire of Hebrew is not demanding; for day to day use, the 27 letter-forms (א to ת including final forms) are sufficient. Vowel-points (nikkud) are nice to have but not necessary. Cantillation marks for the Hebrew Bible can be left to encyclopaedic character sets like Unicode. The first Hebrew encodings, therefore, were concerned with the mapping of 27 Hebrew characters.

The first attempt for a Hebrew encoding is called Old Code. Old Code is not a single encoding, nor is New Code. Old Code can be a 6-bit BCD encoding used on an IBM mainframe, or it can be 7-bit Hebrew (an ISO 646 variant), and New Code can be DOS Hebrew (CP862), ISO 8859-8, Windows-1255 or Unicode Hebrew. What is meant by Old Code is this: the Hebrew alphabet is mapped instead of Latin letters (usually the lowercase ones) and one additional symbol. New Code means that the Hebrew alphabet has separate positions of its own, not trampling on Latin-script repertoire. In the most common form of Old Code, 7-bit Hebrew (Israeli standard SI 960), the Hebrew alphabet occupied the positions 60–7A, on top of the lowercase Latin letters, with א on the grave accent. On IBM mainframes the arrangement would be the same: ב to ת on top of Latin (lowercase if both cases existed), א on some other character, usually & or @.

Old Code Hebrew required two display modes for the terminal: English mode and Hebrew mode. The switch determined what the lowercase Latin positions represented in display. Hebrew text in English mode would be a jumble of lowercase Latin letters, and lowercase English in Hebrew mode would be a jumble of Hebrew letters, with only the human operator any the wiser to it. That inconvenience was the chief reason for transitioning to New Code forms of Hebrew.

The ISO 8859 standards had a simple policy of transitioning from 7-bit to 8-bit character sets: just take the non-ASCII characters and set the high bit on them. So ISO 8859-8, the Hebrew part of the standard, had the Hebrew alphabet in positions E0–FA. Microsoft, luckily, followed the lead in its Windows-1255 codepage and encoded the Hebrew letters in the same positions, adding vowel-points in lower positions (I say “luckily” because the Windows codepages aren’t always compatible with the ISO standards—compare ISO 8859-6 and Windows-1256 for Arabic). Hebrew on the Macintosh too follows the ISO ordering of the letters, and adds vowel-points in lower positions, but different from those of Windows-1255. Unix adopted the ISO standard for Hebrew. The only odd man out for New Code Hebrew was DOS, using CP862, where the Hebrew letters (like the ISO standard, without vowel-points) were mapped to positions 80–9A.

Finally, the last word on Hebrew encoding is, of course, the Unicode standard. Positions 05D0–05EA have the letters, and lower positions have vowel-points and cantillation marks, enough for digitising any kind of Hebrew text.

History

[edit]I said both encoding and storage method had to be taken into account when speaking about Hebrew computing. Here now is the history of Hebrew computing:

- Old Code Hebrew in visual order for mainframes and early workstations. Still in use in legacy environments, much as EBCDIC is, and can also be seen on Israeli teletext (there’s a button on the remote control that switches between the two display modes, and if you switch it to English mode you can see a mess of lowercase Latin letters).

- CP862 Hebrew in visual order for DOS. Visual order because DOS had no bidirectional display mechanism. However, a few applications were programmed with their own bidirecational display mechanism, and stored Hebrew in logical order. So CP862 Hebrew can be either visual or logical. Those who have ever tried to pass between the QText and EinsteinWriter word processors (the former visual, the latter logical) know what a headache it could be.

- ISO 8859-8 Hebrew in visual order for Unix workstations and for the early Hebrew Internet. Various attempts to fit bidirectional support for Hebrew on Unix were carried out, most notably El-Mar’s system for Motif, but, like so many issues in the Unix world, integrated support had to wait until the free software world (GNU/Linux) supplied the goods. The Hebrew Internet was in visual order until Microsoft launched Internet Explorer 5, which featured bidirectional support without regard to the operating system (earlier versions of IE supported Hebrew only on Hebrew Microsoft operating systems). Mozilla came soon afterwards, and nowadays visual Hebrew on the Internet is dead.

- ISO 8859-8 Hebrew in logical order (ISO-8859-8-I at IANA) for Unix and the Internet. It never gained as much acceptance as Windows-1255, as it had no provision for vowel-points, and Unicode has finally made it obsolete.

- Windows CP1255 Hebrew in logical order for Microsoft Windows operating systems beginning with 3.0. This brought logical Hebrew to the mainstream, and despite a few setbacks resulting from changes in the bidirectional algorithm (such as the display of the hyphen-minus), Israeli computer users were made not to settle for less. In the early days of the Internet, the fact that the OS used logical Hebrew while the Web used visual Hebrew was a source of much frustration, as anyone who pasted Hebrew text from a website into Notepad or Word back then knows.

- Unicode Hebrew in logical order as the encoding and storage method to end all encodings and storage methods. All operating systems and web browsers support it today. Windows-1255 still lingers on, but it will be the last to die out; all the others are already obsolete.

For Wikipedia

[edit]The article is a summary of my knowledge on Hebrew computing. It is too specialised, and perhaps too long, for a Wikipedia article of its own, or so I feel, but as is the norm in Wikipedia, anyone is free to take it, or bits and pieces of it, and incorporate it in an existing article. Or just save it for reference. Like all my writings on Wikipedia, the GFDL applies.

Here is how to add iTXt (international text, in Unicode UTF-8) chunks to PNG files using pngcrush. Why is this tutorial necessary? Because the current documentation is misleading (I’ve contacted the author about it and he says he’ll fix it in the next version), and it took me a long time, with a lot of trial and error, till I finally found out how to do it. The tutorial is for sparing anyone else the trouble.

Writing a tEXt chunk (limited to ISO Latin-1) is just as the documentation says:

-text b[efore_IDAT]|a[fter_IDAT] "keyword" "text"

The documentation would have you believe that writing an iTXt chunk is the same:

-itxt b[efore_IDAT]|a[fter_IDAT] "keyword" "text"

But that is not the case. To write an iTXt chunk, pngcrush requires four parameters, not two. The correct help would look like this:

-itxt b[efore_IDAT]|a[fter_IDAT] "keyword" "language code" "translated keyword" "text"

Only the keyword is required, as in tEXt, but four values must be passed to pngcrush, otherwise the program terminates without doing anything (which is what led me to believe the -itxt option was nonfunctional). In other words, the -itxt flag must be followed by either b or a and then by four pairs of quotation marks, the first of which must contain characters, the others allowed to be empty.

After discovering the liveliness of the -itxt function, the next task for me was to find out how to write the UTF-8 strings properly from the Win32 console window. This probably isn’t an issue for Linux users, so the section ends here if you are one.

I tried different modes for the console window: cmd starts it in codepage mode, cmd /u starts it in Unicode (more accurately UTF-16LE) mode; when opened to display in a raster font, only one MS-DOS codepage is supported (CP437 on a American WinXP, CP850 on a West European, etc), while the TrueType font (Lucida Console) can display a greater character set, such as Greek and Cyrillic (but not Hebrew or Arabic); once launched, chcp 65001 switches the console codepage to UTF-8, and others are also available (437, 850 and the rest, as well as 1252 for Windows West-European, and the other Microsoft Windows codepages). I had plenty of variations to tinker with.

The first input method I tried was to type the text in the command line window. The result, upon examination of the output file with the hexadecimal editor, was question marks where the Unicode text should be. The second method I tried was writing the text in a text editor and pasting from there into the command line window. Again, the result was question marks no matter what I tried. Finally, I decided to try batch files. I wrote a batch file with the entire pngcrush command, saved it as UTF-8, removed the Byte Order Mark and then ran it.

The first result wasn’t what I wanted, but it was different from all the previous errors: not question marks but characters in a wrong encoding. That was under CP437. I switched to 65001 (UTF-8), but the batch file then wouldn’t execute at all. Lastly, I did chcp 1252 (Windows West-European), and it worked. All characters were as they should be, and reading the file with a viewer in Unicode mode confirmed this.

So the steps to writing an international text metadata chunk with pngcrush are these: 1) Write the entire pngcrush command, with all its options and parameters, into a batch file (extension *.bat) and save as UTF-8. Remove the Byte Order Mark if any. 2) Launch the console window in any mode (ie with or without /u), in any font (raster or TTF). Only the codepage matters. 3) Switch the codepage to Windows West-European by typing chcp 1252. 4) Run the batch file.

The result is a totally correct PNG file with perfect iTXt chunks. They may not be very useful now, but they will be in the not so far future. A graphical interface for manipulating PNG iTXt chunks would be much more preferrable to many, of course, but even this somewhat tedious use of calling pngcrush from a batch file in a command line window is far better than the hackish and error-prone way of writing the chunks, after their calculated lengths, into the PNG file with a hex editor and then fixing the CRCs. Writing a PNG file involves a lot of procedure, and only computers can be relied upon to follow procedure without error.

(Note, added 24 Jun 2006: you can also use Jason Summers’s TweakPNG to write out, and then import, any PNG chunk you want, including iTXt. However, although this option takes care of the chunk length field and the CRC, you still have to do the internals of the chunk, such as the null separators, by hand with a hex editor, so it isn’t ideal. TweakPNG as of version 1.2.1 can’t manipulate iTXt.)

History Our Teacher?

[edit]In the academic year of 2003–4, I studied History in addition to Arabic at Beit Berl College. When, for various reasons, I left Beit Berl for Bar-Ilan University, I decided not to continue with History as my minor. Why is that? I still like history as a subject, as a hobby, but my year of studying it at Beit Berl convinced me I could never persevere in learning it professionally. For the mind that craves certainty, the study of history can only leave it in torment; the potential to go astray into falsehood, and to cling to it thinking it is truth, in the field of history is huge.

The study of history attempts to find out the truth about the distant past, which is fraught with gaping holes and misleading signs; but what made me aware of the inherent fuzziness of history is the fact that even recent history suffers from the same flaws and pitfalls. Even recent history, with its wealth of documentation, and often with the people concerned still among us, is liable to human distortion and selective bias. I will now give two accounts that illustrate my point.

Godwin’s Law

[edit]Mike Godwin is among us as of this writing, and he formulated his famous law in 1990, live on the Internet. Godwin’s Law states:

As an online discussion grows longer, the probability of a comparison involving Nazis or Hitler approaches one.

This is a “law” in the sense of the laws of physics: not really laws, as in rules made by a state and enforced by its police, but observations. He observed the tendency of those comparisons to get more probable in the course of online discussion, and then decided it was such a regular phenomenon that it could be called a law, much the same as Newton’s laws.

But of course, if you’ve browsed or participated in online forums any fair length of time (not recommended, but too late for me now, I already done that…), you know of “Godwin’s Law”, not in this version, not as an observation, but as a law in the other sense: a rule that people have to follow, a prescription to be honoured. People talk of “violations of Godwin’s Law”, or say things like “I invoke Godwin’s Law” or “Must you Godwin the thread so soon?”, and that doesn’t make sense for a mere observation. If you ask them, in the newbie way, what Godwin’s Law is, the response will usually be:

Godwin’s Law states that as soon as you make a comparison involving Nazis or Hitler, the discussion has ended.

Gentlemen, Godwin’s Law was formulated within Internet history, and is there in its original form for all to see; and for the ultimate doubter, there is still the opportunity to verify with the originator. The usual historical impediments of “holes in the historical record” and lack of eyewitnesses therefore do not hold. Yet that has not prevented Godwin’s Law from being distorted, and that distortion from being disseminated, such that so many people recognise Godwin’s Law only in that distorted form, and of the original form know they not. Strike one for the perils of historical research.

Personal computing history

[edit]In this example the problem is not distortion but selective bias; not outright falsehood, yet the omission of key facts makes for a biased picture, a half-truth. Again, the example is striking because all the usual impediments for historical research do not apply: for example, the inventor of the personal computer, Ed Roberts, can still be asked questions about the Altair if needed.

Of the timelines of personal computing, most I have found go like this: MITS Altair in 1975, Apple’s first computers in 1977, the IBM PC in 1981, the Macintosh in 1984, Windows 3.0 in 1990, Windows 95, up till you get to the present. Those are the important milestones, the formative events of personal computing, according to a great many histories, from the PBS’s Triumph of the Nerds to short summaries found on small websites.

Is this timeline false? No. I have no argument that all those were milestones in the history of personal computing. However, the timeline suffers from the flaw of omission: namely, where are all the personal computers that introduced me, and countless others, to computing and shaped my knowledge of computers hence? Where is the Commodore 64, the ZX Spectrum, the Amiga and the Atari ST, to name just a few examples? The Macintosh is a milestone because it popularised the Graphical User Interface? No argument here; but is it so hard to acknowledge, for example, that the Amiga popularised the use of computers for multimedia creation? If it is trumpeted that the Apple II with VisiCalc was the starting-point for many businessmen in computing, why is there this tendency to hush up the fact that the Commodore 64 introduced thousands upon thousands of schoolkids to computing? This can only be selective bias.

So here we run into another problem with the study of history: you can have all the facts, and you can have all those facts absolutely right, but in the end, you as the historian choose which facts to include in your study and which to omit, according to the inclination of your heart. Trouble is, your reader doesn’t necessarily know which way your heart is inclined, therefore is bound to take your biased research as unbiased truth.

Summary

[edit]The pitfalls of distortion and selective bias in historical research exist even today, in the age of copious documentation and ample verifiability; how much greater, then, is the danger of historical error when researching the more distant past, in which information is scarce and verifiability usually nonexistent? Some people are actually thrilled by all this uncertainty; if you are one of those, you may have a career as a historian ahead of you. I shall have to content myself with supplying you with my distortions and biased takes.

GIGO: Recalculation vs. Resampling

[edit]This is a draft about digital sampling.

VIC-II Palette

[edit]All values are according to the sRGB colour space.

Original 8-bit, calculated by Philip “Pepto” Timmermann:

0: 00 00 00 1: FF FF FF 2: 68 37 2B 3: 70 A4 B2 4: 6F 3D 86 5: 58 8D 43 6: 35 28 79 7: B8 C7 6F 8: 6F 4F 25 9: 43 39 00 A: 9A 67 59 B: 44 44 44 C: 6C 6C 6C D: 9A D2 84 E: 6C 5E B5 F: 95 95 95

Extended to 16 bits. I arrived at these by doing the YUV to RGB and gamma correction with 16-bit values:

0: 0000 0000 0000 1: FFFF FFFF FFFF 2: 67F2 37B6 2B4D 3: 7084 A41A B283 4: 6FBA 3D0C 85F7 5: 588E 8D08 4380 6: 34FA 2880 79A4 7: B89C C765 6F4C 8: 6FBA 4F93 257D 9: 4324 3997 0019 A: 9A24 6702 5990 B: 4444 4444 4444 C: 6C39 6C39 6C39 D: 9AF6 D27D 844C E: 6C39 5E87 B557 F: 9611 9611 9611

6-bit values. Again, I recalculated rather resampled:

0: 00 00 00 1: 3F 3F 3F 2: 1A 0D 0A 3: 1B 29 2C 4: 1B 0F 21 5: 16 22 10 6: 0D 0A 1E 7: 2D 32 1B 8: 1B 14 09 9: 10 0E 00 A: 26 19 16 B: 11 11 11 C: 1B 1B 1B D: 26 34 20 E: 1B 17 2D F: 26 26 26

The 6-bit values upsampled to 8 bits, using the linear scaling equation:

0: 00 00 00 1: FF FF FF 2: 69 35 28 3: 6D A6 B2 4: 6D 3D 86 5: 59 8A 41 6: 35 28 79 7: B6 CA 6D 8: 6D 51 24 9: 41 39 00 A: 9A 65 59 B: 45 45 45 C: 6D 6D 6D D: 9A D2 82 E: 6D 5D B6 F: 9A 9A 9A

5-bit values, recalculated:

0: 00 00 00 1: 1F 1F 1F 2: 0D 07 05 3: 0E 14 16 4: 0E 08 11 5: 0B 11 08 6: 07 05 0F 7: 16 18 0E 8: 0E 0A 05 9: 09 07 00 A: 13 0D 0B B: 0B 0B 0B C: 0E 0E 0E D: 13 19 10 E: 0E 0C 16 F: 12 12 12

5-bit values, linearly scaled to 8 bits:

0: 00 00 00 1: FF FF FF 2: 6B 3A 29 3: 73 A5 B5 4: 73 42 8C 5: 5A 8C 42 6: 3A 29 84 7: B5 C5 73 8: 73 52 29 9: 4A 3A 00 A: 9C 6B 5A B: 5A 5A 5A C: 73 73 73 D: 9C CE 84 E: 73 63 B5 F: 94 94 94

Examples

[edit]From 8 bits per channel to 16 bits per channel, here, one can arrive either through recalculation, as I’ve done here, or through resampling, which is never as accurate, and is a necessity only if rescanning from source is impossible.

Possible resampling algorithms:

- Linear scaling

- Left-bit replication

- Left-shift with zero-fill

Linear scaling is done according to the following equation:

where = maximum value for the output and = maximum value for the input, for example 255 and 63 respectively if upsampling from 6 bits to 8 bits is desired.

I have taken index #7 (“Yellow”) for the demonstration. The original 8-bit values are:

Hexadecimal: B8 C7 6F Binary: 10111000 11000111 01101111 Decimal: 184 199 111

Recalculation yields the following values:

Hexadecimal: B89C C765 6F4C Binary: 1011100010011100 1100011101100101 0110111101001100 Decimal: 47260 51045 28492

Linear scaling yields the following values:

Hexadecimal: B8B8 C7C7 6F6F Binary: 1011100010111000 1100011111000111 0110111101101111 Decimal: 47288 51143 28527

Left-shift with zero-fill yields the following values:

Hexadecimal: B800 C700 6F00 Binary: 1011100000000000 1100011100000000 0110111100000000 Decimal: 47104 50944 28416

In order of accuracy, recalculation is the best, linear scaling the next best, left-bit replication nearly as good and left-shift with zero-fill poorest.

Caveats

[edit]All this applies only if the source is analogue or on the mode of analogue (such as vector graphics). If the source is digital, then there is no recalculation to do; linear scaling is usually the best.

Example: the 2-bit values of the CGA and EGA palettes are 0 (zero), 1 (one third), 2 (two thirds) and 3 (full), and there is nothing to do except rescale them to the bit depth in use. The values 00, 55, AA and FF add no more accuracy over the original 0, 1, 2 and 3, and are there only for compatibility with the modern 8-bit environment.

Another caveat: the best algorithm is often to be determined on a case-by-case basis, thus lies outside the bounds of simple automation. Consider the following values:

Hexadecimal: 00 80 FF Binary: 00000000 10000000 11111111 Decimal: 0 128 255

Because 80 is here to signify “half” and FF is here to signify “full”, neither linear scaling nor left-shift with zero-fill makes a perfect conversion. With linear scaling, the results are these:

Hexadecimal: 0000 8080 FFFF Binary: 0000000000000000 1000000010000000 1111111111111111 Decimal: 0 32896 65535

With left-shift and zero-fill, the results are these:

Hexadecimal: 0000 8000 FF00 Binary: 0000000000000000 1000000000000000 1111111100000000 Decimal: 0 32768 65280

The desired values are, of course, 0000, 8000 and FFFF. It is not possible for a computer to do this properly without a little extra intelligence given by the programmer.

Conversion to Greyscale vs Desaturation

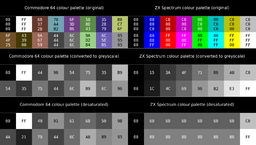

[edit]Anyone who’s spent some time tinkering with a paint program knows conversion to greyscale and desaturation yield different results, even though both end up transforming a colour image to an image with shades of grey only. With photographic images, the differences tend to be localised, hence often take a keen eye to spot. I’ve created an image demonstrating the difference much more vividly, using two old computer palettes, the Commodore 64 and ZX Spectrum palettes, which, because of their different bases, show the opposite effect for each transformation.

First some background about the two palettes:

Commodore 64

[edit]The palette of the VIC-II chip was fixed with 16 colours, calculated from YUV parameters to reasonable accuracy by Philip Timmermann [1]. (YUV is for PAL systems; parameters from YIQ, for the American NTSC, probably give close results.) The usual output device for the C64 was a composite monitor, though it could use a TV set.

For compatibility with monochrome monitors, the palette was designed to have 9 luma (Y) values.

ZX Spectrum

[edit]The minimalistic design of the ZX Spectrum meant it sent the image (6144 bytes for the display file, 768 bytes for the attributes) from the Uncommitted Logic Array to the PAL encoder to the TV set. This British computer was wedded to the PAL television system, while for NTSC systems another version of the computer had to be used (the Timex Sinclair). The Spectrum was intended to be used with a normal, locally available TV set, something which looks so strange to youngsters today with their newfangled LCD monitors with DVI output (“My walking stick, please, Humphrey”, say I).

I have yet to encounter a precise calculation of the intensities of the ZX Spectrum palette. I doubt there could be any, because the Spectrum was dependent on the TV set and its fluctuations. Most values I’ve seen are somewhere around 192%, or C0 values in sRGB, so that’s what I’ve chosen here; more information would be welcome. At any rate, the palette is distinct enough not to be confused with that of the 16-colour Microsoft Windows minimal palette, not to mention IBM16 (the CGA text mode and EGA low-res graphic modes 16-colour RGBI palette that still appears on the BIOS screen; one of the few remaining legacies of the 1981 IBM PC).

Like the Commodore 64, the ZX Spectrum makes accommodation for monochrome monitors. The palette ordering, IGRB (Intensity, Green, Red, Blue), is also an ordering according to luminance, as I found when I used mtPaint to sort the 8 basic RGB colours according to luminance; and the result of conversion to greyscale finally cements the case.

Conversion

[edit]I used the GIMP (2.2.10) to do both operations. Different applications may use different constants for conversion to greyscale or desaturation, so there can be slight variation. In particular, conversion to greyscale using ppmtopgm of the NetPBM suite sometimes yields differing from those of the GIMP by 1, while desaturation using ppmbrighten -s -100 can yield a very different result (in the desaturation of the ZX Spectrum palette, ppmbrighten gives the grey values 00, C0 and FF, and only them).

For the Commodore 64 values, there is a discrepancy between the originally grey shade #959595 and value 96 in the two converted versions. I expect the reason for this is a rounding error (take a short look at Timmermann’s page to see all the decimals involved in his calculations). I suspect the value for index 15 in the VIC-II palette is really #969696, especially when I compare to the result of my own 16-bit recalculation (9611 9611 9611), but I’ll defer to Timmermann’s hard work, on which I’m a mere piggybacker. Conversion of the Commodore 64’s colour palette to greyscale yields 12 values, not 9 as it should be, with near-duplicates (such as #353535 and #363636); I’ve normalised them to values based on a recalculation of the Y parameter, such as here, followed by applying the 0.88 gamma.

Results: Commodore 64

[edit]Conversion to greyscale leads to 9 values, the Y values of the palette, just as the designers provided for those who used a monochrome monitor.

Desaturation leads to 16 distinct shades of grey. The permutations of Y (luma), U (blue-yellow axis) and V (red-cyan axis) produce different saturation values. Here is the explanation why, in a photographic image where two colours have similar luma values, but differ in their U and V axes, conversion to greyscale will yield similar shades of grey while desaturation will produce two distinct shades of grey.

Results: ZX Spectrum

[edit]Conversion to greyscale leads to 15 (7×2 plus the same for black in both intensities) distinct shades of grey in increasing luminosity, for use on monochrome monitors. “The ZX Spectrum has eight colours to play with, and these are given numbers between 0 and 7. Although the colours look in random order, they do in fact give decreasing shades of grey on a monochrome TV” (ZX Spectrum Introduction, chapter 7).

Desaturation leads to 5 shades of grey, with values 00, 60, 80, C0 and FF. This is a result I’ve found for other RGBI palettes, such as the above mentioned minimal Windows palette (values 00, 40, 80, C0 and FF after desaturation) and IBM16 (values 00, 55, AA and FF after desaturation), as well as other palettes with uniform value distribution (such as the 64-colour hi-res EGA palette: 00, 2A, 55, 80, AA, D4 and FF).

Conclusions

[edit]The differences in the results reflect the distinction between methods of colour derivation: perceptual vs digitally uniform. Palettes based on YUV, HSL and other systems move according to axes that represent the distinctions the human eye is accustomed to perceiving; RGBI and colour-cube palettes like EGA64 and the old 216-colour web-safe palette are designed to minimise computation. In the former, shades of grey convey only a little of the information (hence the distinctiveness of traffic light colours, unless you’re unfortunate to suffer from red-green colour blindness), while in the latter, the uniformity results in the identity of saturation values.

Obviously, the best tool for controlling conversion to shades of grey is still the Channel Mixer of your paint program, if it’s got one and if you’ve got the hang of using it. If not, give desaturation a try when the “Convert to Greyscale” function yields a less than satisfactory result. You may be pleasantly surprised.

What’s left of the original IBM PC?

[edit]The original IBM PC came out in 1981. More than 25 years later, which in the computing industry is comparable to hundreds of millions of years on the geological timescale, I embark on the quest of digital palaeontology, listing which of its features can still be found on modern PC’s. Let me now taste the IBM[1]:

- Intel x86-family CPU running at 4.77 MHz

- Motherboard with slots for cards

- IBM PC keyboard

- Serial (COM) ports, according to the RS-232 standard

- Floppy disk: 5.25″, later also 3.5″

- Digital (TTL) monitor

- MDA or CGA card for the display

- BIOS

- PC speaker

- Microsoft’s DOS as the operating system, with its 640 KiB limitation

Now let’s compare to the present (September 2007 as of this writing):

CPU

[edit]The x86 family of Intel processors is still with us, with all its provisions for compatibility (real mode vs protected mode), and more to come with the 64-bit extensions (long mode and all the rest of that confusing stuff). The original mode of the IBM PC’s 8088, real mode, is active for the brief moments of the POST screens, then the modern operating system (WinXP or Linux) switches it to protected mode.

Interestingly, the x86 architecture has now drifted to its former arch-enemy, the Apple Macintosh.

Verdict: preserved under piles of updates.

Motherboard

[edit]Still with us too, though the specs have changed greatly. ISA is dead; even its successor, PCI, has been upgraded.

Verdict: preserved as an infrastructure.

IBM PC keyboard

[edit]I don’t know how much this can be considered a PC legacy, because it’s essentially a fossil from an even earlier geological age (QWERTY is more than 125 years old).

The few glaring mistakes in key placement (return and left shift) have since been corrected, bringing the keyboard in line with the rest. The function keys are the same, but with two more added (F11 and F12). As for the clicky feel, that now depends on the keyboard manufacturer. A few more keys have been added, notably the Windows key and the meny key.

Verdict: not applicable.

RS-232 serial ports

[edit]Moribund, with most new computers being sold without any. USB has taken the place of RS-232, just as it has taken the place of lots of other, later port standards (the keyboard DIN connector, the PS/2 keyboard and mouse connector, the parallel printer port, to name a few).

Verdict: fast approaching extinction.

Floppy disk

[edit]The 5.25″ disk drive is long gone. New PC’s still come with the 3.5″, whose only purpose is for disk booting in case of emergency; all PC’s now support booting from CD-ROM, so you have to be really desperate to have to use the floppy. Its capacity (1.44 MB) is laughable today; for file transfers through Sneakernet, the USB flash drive has taken its place.

Verdict: approaching extinction, delayed only by inertia.

Digital monitor

[edit]The MDA and CGA (and EGA, but that’s later) CRT monitors communicated with the graphics card digitally, in contrast to the later VGA analogue standard and monitors based on it. After over a decade of analogue monitors, today’s LCDs are back to communicating with the graphics card digitally, according to the DVI standard. However, this is a case of “what’s old is new again”, not a survival from the past.

Verdict: extinct; reincarnated, if you want to think of it that way.

Display adapter

[edit]The MDA and the CGA both have an enduring legacy that can still be seen in the BIOS and POST screens, as well as (to a lesser extent) command-line windows. From the MDA we still have the character set that was burnt into it, CP437. The CGA had a 16-colour palette that just won’t die, living on in the BIOS and POST screens, in command-line windows such as the GNOME Terminal (but not in either xterm or WinXP’s console window at default) and in the colors for text in the Linux framebuffer mode. The two legacies have become iconic, standing for the bare-bones, pre-GUI PC. Also notable is the 80×25 mode of the text mode screen (full screen or windowed), itself a legacy from punched-card days.

Verdict: preserved, and set to be a cultural icon.

BIOS

[edit]The original IBM PC BIOS provided essential startup code for the machine, a bid by IBM to retain its otherwise outsourced creation, until Compaq and others managed to outwit them by reverse engineering the BIOS. Today the BIOS provides for basic startup, initial PnP (particularly for today’s USB keyboards) and power management.

Verdict: recognisable in its changed role.

PC speaker

[edit]This ranks with CP437 and the CGA 16-colour palette as a survival and cultural icon. To be sure, many modern PC’s have a piezo speaker that’s a far cry from the loudspeaker that the original IBM PC sported, but they all come with one unless the user performs a speakerectomy operation. It beeps during the POST, as well as in the modern operating system in cases where the sound card isn’t configured to be used with a particular application or is missing entirely.

Verdict: preserved, and set to be a cultural icon.

DOS

[edit]I’ve lost count of “DOS is dead” announcements; the death of DOS isn’t an event, it’s a process, of slowly phasing out support for it: Windows 3.1 was the first to make use f the Intel 80386 features outside real mode; Windows 9x started with DOS but used protected mode in their native, 32-bit applications; Windows Me (remember that flop?) did away with the ability to go into DOS mode from it; Windows XP no longer has a DOS basis, only a Virtual DOS Machine for compatibility, which doesn’t always work well; and the 64-bit versions of Windows finally leave DOS entirely, with no support for running DOS applications, thus being on parity with Linux, which has long required emulators in order to run DOS apps.

It should be noted that command-line windows and DOS are not synoynmous. The command-line window of 64-bit Windows may look like a DOS prompt, but the only applications it runs are Windows console applications. There’s more to DOS than a black, 80×25 screen with CP437 characters in the CGA 16-colour palette.

The two most irksome limitations of DOS were the 640 Kib memory limit (which, contrary to the quote misattributed to Bill Gates, were not the result of lack of thinking ahead; Gates did think ahead, insisting that IBM should provide for memory expansion as opposed to their initial plans of giving the IBM PC 16 KiB of RAM with room for expanding to 64 KiB. The trouble was, no-one imagined the installed base would get so large, therefore enduring for so many years) and the 8.3 filename restriction. The first limitation has effectively died with the decline of the use of DOS apps; the second can still be seen when booting from DOS to a FAT32 partition, but nowadays both are rare (you’re better off booting from a Linux rescue disk, and FAT32 has given way to NTFS).

Verdict: fast approaching a state of being alive only in emulators.

Conclusion

[edit]Up until about 1995, people around me would talk of an “IBM PC Compatible”, as opposed to the older, non-IBM computers. Now, with the demise of them all (except the Mac, which itself got seriously dented by the standards unification Borg), no-one remembers the original IBM PC (heck, kids today don’t know what a command line is!). “Personal computer” is the longest title used today, but usually it’s just “computer”. The origins are of no general interest.

Only the POST screens (and the BIOS, if a user is technical enough to go into its config screen) and the beeping of the PC speaker are there as perceptible reminders of the ancient past, when BASIC interpreters roamed the earth…

“Empowerment” of RGB

[edit]The use of three primaries is derived from the biological basis, ie the sensitivity of the human eye to wavelengths corresponding to red, green and blue. It is at odds with the computing standard of aligning all values to powers of two (because multiples of 3 are never powers of 2). Even with 1-bit RGB (3 bits per pixel), there is one bit left over the power of two. What are the options for dealing with unused bits?

- Leave the bits unused

- Use the bits for a special purpose

- Forgo allocating the same number of bits for each channel

- Subset the number of available colours into a power of two by using an index to a palette

Option #1 is to pad the bits in memory, sacrificing storage for performance. The colour khaki (from Persian xâk خاك, meaning “dust”[2]), for example, has the value #C3B091 (in hex triplets), but in memory will be read as C3 B0 91 00. Since the performance penalty for misalignment in memory can be great, this makes sense for the in-memory representation; in computer files, it does not, hence optimisation for storage—24 bits per pixel are kept so, not padded to 32 bits.

Option #2 uses the bits for some purpose other than signifying the intensity of the R, G or B channel. For 1-bit RGB, the extra bit is usually used as a flag for intensity—the RGBI model, used in the CGA text-mode colour palette most famously. In the attribute area of the memory of the ZX Spectrum, 3 bits were used for the foreground colour and 3 bits for the background colour, leaving 2 bits as flags, for “bright” (intensity) and “flash” respectively, aligning neatly as 8 bits. In modern systems, the extra 8 bits left over from the use of 24 bits per pixel RGB can be used as an alpha channel, denoting the level of opacity, giving 32-bit RGBA. On some Cirrus Logic cards from the 1990’s using 15 bits per pixel, the extra bit was used as a mode switcher for a paletted-colour mode (see article: Highcolour).

Option #3 allocates more or fewer bits for one channel than another. Best known is 16-bit highcolour, which usually gave the Green channel 6 bits, as opposed to the 5 bits for Red and for Blue, because of the human eye’s greater sensitivity to green. Another example is 8-bit colour allocating 3 bits for Red and for Green but 2 bits for Blue, taking account of the human eye’s lesser sensitivity to blue in order to yield 256 possible colours. The major disadvantages of this are: the tinging of colours, and relatedly the lack of true greys; and the mathematical complexity of working with disparate values.

Option #4 takes 2↑n colours out of the available, stores them as a palette and references them by indexes in the output. The palette values are themselves straightforward RGB, usually with padding, but the indexes are mere pointers. So 256 values taken from the available range 16,777,216 colours of 24 bits per pixel RGB are each referenced by an 8-bit value for each pixel in the output. Subsetting can be carried further to conserve storage requirements: the aforementioned CGA text-mode colour palette, which is a 4-bit (16-colour) subset of the 6-bit (64-colour; 2 bits per channel, 6 bits per pixel) range, is itself subset to various 2-bit (4-colour) subsets in the CGA 320×200 graphics mode, and further to 1-bit (2-colour, monochrome) in the CGA 640×200 graphics mode.

Today, option #4 is mainly confined to iconic imagery (web comics, for example), #3 is no longer employed (and good riddance, say I), #2 is found in images with variable transparency (RGBA) and #1 is the most common case for in-memory representation of images.

Portable exponentiation

[edit]It would be absurd, even to one with a minimal knowledge of mathematics, to hear that 25 equals 32, yet that is what copying the following text and pasting it into a text editor yields:

25 = 32

In plain text, it is customary to use a visible character to denote exponentiation, usually the ASCII circumflex accent (U+005E, as in 2^5); myself, I prefer the upwards arrow (U+2191, as in 2↑5), due to habit from a certain 1980’s home computer that had that instead, but the principle is the same. But the formal way is superscription, which, as shown above, is not portable. Fortunately, CSS provides a great way to both eat one’s cake and have it. Take this snippet:

.pu-hide {

display: none;

}

.pu-super {

vertical-align: super;

font-size: smaller;

line-height: normal;

}

and then provide the HTML that refers to it:

2<span class="pu-hide">^</span><span class="pu-super">5</span> = 32

And here is the result:

25 = 32

It should be noted that here, since I can’t do an embedded stylesheet (style between <style type="text/css"></style> tags), let alone an external one, I used inline styles (<span style=""></span>), but the CSS code is exactly the same.

GUI vs. CLI: A specific comparison

[edit]Is there a reality to the bragging of command-line aficionados over today’s shell-ignorant upstarts? I’m proficient at both interfaces, so I decided to make a specific experiment, using both GIMP and Netpbm to carry out a particular operation.

The operation: take a 16-colour CGA or EGA image (ie having the palette described in the CGA article), and reset its intensity bit, so that only the first eight colours of the palette (including IBM’s distinctive brown!) are present.

With GIMP (2.2.10 is the version I use):

- Change colourmap entry with value #AA5500 to #AAAA00 → Convert to RGB → Posterise to 2 levels → In “Levels”, change output levels maximum to 170 → Convert to indexed colour → Change colourmap entry with value #AAAA00 to #AA5500

And with Netpbm (10.33.0 for Cygwin):

pngtopnm source.png | pamdepth 255 | ppmchange rgb:aa/55/00 rgb:aa/aa/00 | pamdepth 1 | pamdepth 255 | pamfunc -max 170 | ppmchange rgb:aa/aa/00 rgb:aa/55/00 | pnmtopng > target.png

I could do the Netpbm method a little differently (using maxval 3 instead of 255, with the corresponding changes in the colour values), but I wanted to keep it as similar as possible for the sake of comparison.

The results are the same for both methods, every time. I find the Netpbm way easier because of the ergonomic factor—hands stably on the keyboard, aided by tab completion, while the mouse movements and clicks required by the GIMP get tedious quickly. Of course, one can gather groups of operations in the GIMP using Script-Fu, but that requires programming, which by far offsets any gains the GUI has over the CLI.

Where the CLI has piping, in the GUI you need to do everything sequentially. Apart from that, if the GUI application has the same set of features as the CLI program collection, everything can be done in either of the two ways. CLI stands to have an advantage here, because of the relative ease of augmenting a CLI program collection, vs doing the same for a GUI application.

Where the GUI cannot compete with the CLI, as hinted before, is in batch processing. Even in a relatively easy to use batch processing feature like that of IrfanView, you’re off the point-and-click paradigm and on the older way of thinking textually. But batch processing, as Neal Stephenson tells us in a fine essay he wrote some years ago, predates both GUI and CLI in the history of human–computer interfacing.

Subpages

[edit]- Pngtutorial contains a concise PNG tutorial.

- Bdffonts contains bitmap fonts I’ve made.

- Persian is about transliteration of Persian so far, with more to be added as I think of it.

- Arabic is where my discussion and elaboration of Arabic language issues go.