User:Bridgette Castronovo/sandbox

ENGL1102 Artificial Intelligence and Quantum Computing

[edit]Rationale:

[edit]Artificial intelligence is one of the most promising technologies ever. It has the potential to change everything about the way we live. AI is broadly defined as the ability of machines to simulate human intelligence. Its origin can be traced all the way back to the 1940s when Issac Asimov published a short story titled “Runaround” about an intelligent robot inspiring many to wonder if it would be possible to create a machine that could think. A decade later, John McCarthy, a pioneer in the field, would coin the term AI. Since then, countless writers, scientists, and philosophers have speculated about the implications of technology. Some highlighting the potential dangers of the technology, such as Stanley Kubrick’s 2001: A Space Odyssey which portrays a sentient computer that turns on its owners. Others showcasing the utility of the technology such as the movie Her, which portrays a man falling in love with an AI. While there are still many concerns surrounding the technology, the progress made over the past decades has been remarkable. Massive improvements in computing power and more advanced models have enabled researchers to make leaps in the capabilities of the technology. In fact, AI is already being used across many industries driving massive productivity gains and helping save lives. Before diving into the applications of the technology, it is essential first to understand how it works. Although the idea of artificial intelligence sounds daunting, the inner-workings of it can be broken down into multiple steps: data gathering, data preparation, training, evaluation, and prediction. During the data gathering and preparation stage, the user feeds an AI algorithm data from different sources, whether it be specific databases, files, or the internet. Since usually these sources have a ton of data, the user has to clean and normalize the data so that the specific AI system can comprehend it. Once the data has been fed into the AI model, now comes the training stage. There are many different kinds of algorithms that can recognize patterns and make predictions, but all of them ultimately work in the same way: they find relationships between variables. In the training stage, there are two important variables that get tracked: weights and biases. Weights are seen as the connections between the different steps in an algorithm, while biases are additional parameters to adjust the output. First these two variables are assigned to random values, and depending on how the AI model does, it gets adjusted so that there are more accurate predictions. In each iteration of the training cycle, the weights and biases optimize the performance of an algorithm. After training, the AI model gets evaluated against another set of data that was not used for training to see if it can apply to all real-world circumstances. Finally, once the model fits each set of testing data, it can predict various circumstances. For example, if one gives a classification model a dataset about whether an image is a dog or a cat, eventually, the model will be able to look at other datasets of dogs and cats and be able to classify it correctly.

If AI is so complicated, then why do people use it? Well, some applications of AI include healthcare and human resources. In healthcare, AI can be used for medical diagnosis by analyzing patient data and identifying patterns and anomalies that may indicate a particular disease or condition. AI-generated virtual nursing assistants can also help with personalized care to patients, while drug discovery can be accelerated by using AI to analyze large volumes of data instead of the longer process of using doctors. This can help reduce time wasted on diagnosis and giving necessary care, along with overly expensive costs on healthcare since it can become more accessible. Going to human resources, AI can be used for recruitment of human resources by analyzing resumes of potential employees and identifying the best candidates for a particular job. Talent management can also be improved by using AI to identify areas where employees may need additional training or support. Employees may be able to learn the skills needed more quickly as the help will also be catered to them. Employee engagement can also be improved by using AI-powered chatbots to answer questions and provide support.

Every computer you’ve ever interacted with, from the phone or laptop you’re reading this on to the supercomputers that model the climate around you, are what’re called classical computers. All the processing that these computers do, from training AI models to processing your word documents gets broken down and processed into bits, a stream of 1s and 0s. These bits can only have either the 1 or 0 state. In modern computers, these bits are encoded in the voltage states of transistors. Quantum computers are something else entirely. Rather than using a transistor or some other two state system to encode information, their information is represented in the state of a two state quantum system, a qubit. Because we are encoding these qubits using quantum systems, they can take advantage of the weird properties of quantum systems. These weird properties include superposition, where the state of a qubit can be a combination of both 0 and 1, and entanglement, where the states of multiple qubits can be correlated together, thus allowing much greater amount of information to be stored using comparatively fewer qubits.

This is an entirely different way to do computation and is fundamentally different from classical computing. Imagine that you’ve only ever been able to drive a car or bicycle. These things can take you far and allow you to get places much faster than walking would. But imagine that you discovered a boat for the first time. A boat is completely different from a car or bicycle and can’t take you down a highway. However, it allows you to cross lakes and oceans, something that no car or bike could ever do. In this way, quantum computing is our boat to classical computing’s car. There are some things that a quantum computer can do that a classical computer can do better. However there are certain tasks that a quantum computer can do that a classical computer couldn’t ever do because of the enormity of the computational task. For tasks such as chemical simulation, optimization, and AI, QC is poised to radically speed them up.

In conclusion, AI and quantum computing are a powerful technological tools that have tremendous potential. The ability that these programs have to analyze relationships between variables and recognize patterns across extremely large data sets means that humans now have the potential to be informed with information deduced by computers. Now, AI can perform useful and interesting feats such as providing personalized online shopping recommendations based on past purchases, identify abnormalities earlier than humans in medical screenings, respond with words uniquely to a user, predict financial trends, and even select the most qualified candidate for a job. To further illustrate, included on this page you will find in depth information on 7 distinct applications of artificial intelligence that are revolutionizing fields from mathematical modeling to chatbot AI’s and telemedicine. There are seemingly limitless possibilities in AI, and as the algorithms are trained on larger and larger data sets, our algorithms will get more precise and therefore, more powerful. While quantum computing is still in it's nascent stage and is certainly behind in development compared to AI, it is a fundamentally different way to compute things and when developed promises to vastly improve many of the things we do with computers, AI being one of them.

An Overview of Artificial Intelligence

[edit]Peer-Reviewed Research Articles

[edit]"Artificial Intelligence Explained for Nonexperts" - Narges Razavian, Florian Knoll, and Krzysztof J. Geras [1] [1]

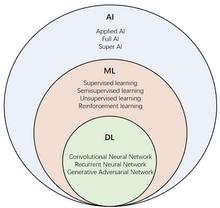

[edit]Narges Razavian, Florian Knoll, and Krzysztof J. Geras’s paper “Artificial Intelligence Explained for Nonexperts” explores the fundamentals of Artificial Intelligence. The paper introduces keywords such as Artificial Intelligence, Machine Learning, and Deep Learning and explains their meaning. It also explains their similarities and differences and provides examples of how they are used today. Additionally, the idea of supervised learning models is explained. The recent popularity of deep learning is highlighted, as well as the reasoning behind its growing utility.

After a basic explanation of the field and important terms, the paper dives into more complicated concepts. One major focus is the creation of different popular neural network architectures. One of these architectures is convolutional neural nets. The history behind their development as well as its recent advancements, is detailed. Three CNN varieties spotlighted are ResNet, U-Net, and Variational networks. The paper ends by touching on the applications of artificial intelligence and providing examples of how the technology is used today.

"A Brief History of Artificial Intelligence: On the Past, Present, and Future of Artificial Intelligence" - Michael Haenlein and Andreas Kaplan [2] [2]

[edit]Michael Haelein and Andreas Kaplan’s paper “A Brief History of Artificial Intelligence: On the Past, Present, and Future of Artificial Intelligence” explores the long, complicated history of the field and attempts to predict its future. They begin by exploring the beginning of Artificial Intelligence. They dive into Isaac Asimov’s short story “Runaround” about an intelligent robot and make an argument as to why this could be considered the birth of the idea of artificial intelligence. They then explore the contributions many early pioneers of the field made. One that they highlight is Alan Turning. Turing, who invented the first electro-mechanical computer to break enemy codes during world war two, made many significant contributions to the field of artificial intelligence, such as the Turing Test. The test was an attempt to answer the question of how to check if a computer is truly intelligent. Haelein and Kaplan then touch on the work of Donald Hebb, who developed a theory of learning called Hebbian Learning which simulates how the human brain learns. His work led to the creation of artificial neural networks. The authors then go on to detail several other major milestones before concluding with a prediction as to how artificial intelligence will change the future.

Programs / Platforms

[edit]

Pytorch is an open-source machine-learning framework. It is based on the programming language Python. The framework is also based on Torch which is an open-source machine-learning library. Torch was written in the programming language Lua and is often used for deep learning. The Pytorch framework massively simplifies making neural networks by abstracting a lot of the inner mathematics involved. The framework enables researchers to build and test artificial intelligence models quickly. Pytorch was initially built by Adam Psaszke while interning for Soumith Chintala, who was one of the main developers for Torch.

The framework is used by many researchers and companies due to its flexibility and computational power. The framework is often compared to another popular machine learning framework, TensorFlow. The advantages of Pytorch is that is much simpler to learn and has important features such as dynamically defined computational graphs that can be manipulated in real-time. Overall, Pytorch is a pivotal machine learning framework that is utilized in many artificial intelligence programs.

Google Colab is short for Google Colaboratory. Colab hosts a free web-based version of Jupyter. Jupyter is an open-source project enabling people to create artificial intelligence models. Colab enables users to build and execute Jupyter notebooks using just a web browser. Colab provides users with access to computing resources such as GPUs to train their models. There is a limit to the amount of computing resources afforded to users; however, if more is required, users can sign up for Colab Pro. The notebooks are run on a private virtual machine and can be downloaded as Jupyter notebook files. They are saved in the user’s Google drives. Currently, only python is supported, but additional languages such as R and Scala will be supported in the future. Users can choose to share the notebook in full or versions with certain parts omitted. There are some restrictions on what users are allowed to use Colab for. A few banned actions are mining cryptocurrency, password cracking, and creating deepfakes.

Engagement Opportunities

[edit]The Agency is a club at Georgia Tech focused on exploring artificial intelligence and machine learning. Every Wednesday at 6:30 pm, there are lecture meetings where other students or industry experts lecture about an AI-related topic. Additionally, every Tuesday at 6:30 pm, there are project meetings. The project meetings are split into beginner workshops and project teams. The beginner workshops walk new members through the basics of artificial intelligence. Workshops last one semester and occur during the fall and spring semesters. Over the course of the semester, new members learn how to build their own neural network using a Python library called numPy. Concepts such as forward propagation, backpropagation, and gradient descent are explored. Members are welcome to join a project team if they feel confident with the material or have completed the workshops previously. Every semester a brainstorming session occurs where members think of project ideas. The members then vote to pick the best couple of ideas and teams are formed and challenged to build their assigned idea. The club is open to both new and experienced students who are passionate about the field of artificial intelligence.

The International Conference on Machine Learning is an annual conference where experts present cutting-edge research on machine learning used in artificial intelligence, statistics, and data science. Everything from machine learning’s impact on computational biology to robotics is explored. This year the conference is being held on June 23rd through the 29th in Honolulu, Hawaii. Companies are also invited to attend to share their research or demo their products.

Students can also attend. Some are even selected to present their research. Students can register for tutorials, conference sessions, or workshops where they can expand their knowledge about artificial intelligence and learn from industry professionals. The conference works with organizations such as Women in Machine Learning, Black in AI, and LatinX in AI to increase underrepresented groups' participation. In fact, twenty percent of the funds collected by the conference goes to financial aid for students to attend and diversity and inclusion efforts.

Glossary Terms

[edit]

Machine learning is the ability of a machine to imitate human behavior. It is a subfield of artificial intelligence. Today, most artificial intelligence programs use machine learning, sometimes using the terms interchangeably. Machine learning is one way to use AI. It allows computers to learn without being explicitly programmed. To build an AI model using machine learning, a dataset is split into training data and evaluation data. The training data is used to train the programmer's machine learning model. Once the model is trained, the evaluation data is used to test how accurate the model is. The programmer can then tweak the model in different ways to improve the model’s accuracy. There are three subcategories of machine learning supervised machine learning, unsupervised machine learning, and reinforcement machine learning. Supervised machine learning models are trained using labeled datasets. Whereas, unsupervised machine learning models are trained using unlabeled datasets. Reinforcement machine learning trains models utilizing a reward system and trial and error.

Neural networks are a specific class of machine learning algorithms. They are modeled after the human brain. The human brain has millions of neurons that are connected and organized into layers. Each neuron receives a signal, performs some function, and transmits the signal to a connected neuron. Similarly, artificial neural networks are composed of millions of nodes. The neural network processes labeled data by having each node perform some unique function, process the information in some way, and send the information to a connected node. Together these layers of nodes work together to train the neural network. Neural networks are the structures on that deep learning is based. In fact, deep learning networks are multi-layered neural networks. Neural networks can be trained to solve incredible problems such as image recognition, natural language processing, and medical analysis. When layered together, they can solve even more complex problems and is the technique frequently used in artificial intelligence research today.

Application: Natural Language Processing

[edit]Glossary Terms

[edit]Intersection between NLP, computing, and AI

In terms of a general overview, NLP or natural language processing is a sub field of machine learning and artificial intelligence that deals with creating programs that can respond to human language using human language. To accomplish this, the computer programmer uses principles of computational linguistics to analyze the textual human input and extract the meaning within a set of grammatical rules dictated by the language. Then a variety of computer structures and algorithms process that input and try to find connections between the words to understand their meaning and to prepare an appropriate response. Additionally, Natural language processing is done in Python which dictates the syntax and logic of the NLP programs. NLP is a rapidly developing field because humans AI/machine learning algorithms are improving rapidly and allowing for more complex applications. Pertaining to the field of NLP specifically, advances in technology have allowed for the creation of capable chatbots that can converse with humans in addition, programs such as google translate that can translate one language to another, and even programs that can create original written works like demonstrated by ChatGPT.

Sentiment Analysis in natural language processing is the way that computers understand the emotional meaning of the input they receive from the user. This process is very complex as human language is composed of many confounding variables such as tone, jokes, irony, sarcasm, and ambiguous language. This process specifically utilizes text mining to extract the meaning from the text and classify it as positive, negative, or neutral based on if that particular piece of information is displaying the corresponding human emotion. This allows the programmer or the company employing the programmer to receive feedback on a particular service. For example, a marketing director may use sentiment Analysis to classify feedback received on the launch of a new product. It would be nearly impossible for a large company to independently review all of the written critiques about a product, but sentiment analysis algorithms working in conjunction with NLP models can extrapolate the meaning of written reviews and quantify them. This ability is extremely valuable to the process of revision within a company, and with the help of sentiment analysis, it is easy to measure traditionally difficult to quantify verbal communications.

SemClinBr - a multi-institutional and multi-specialty semantically annotated corpus for Portuguese clinical NLP tasks

[edit]In the field of clinical NLP, the ability to analyze written patient information is invaluable. In order to do that, NLP specialists created annotated corpora which are essentially guidelines and tags that the AI algorithms will use to process the data. However, the vast majority of the annotated corpora are done in English which means that patient data written in another language is data inaccessible to the NLP algorithms. However, because NLP algorithms are built upon the ability to train upon huge quantities of data, this limitation is hindering innovation in the field of clinical NLP. To try and help with this issue, a group of researchers developed an annotated Corpora in Portuguese in addition to web service designed to instruct others on how to do the same. This research is an important step in globalizing powerful AI algorithms in medical technology and extends the research of the current NLP in biomedical innovation and research. This annotated Corpora will allow for Portuguese clinical data to be analyzed by NLP algorithms which will increase their efficiency and capabilities, and therefore make them more powerful.

A Natural Language Processing (NLP) Evaluation on COVID-19 Rumor Dataset Using Deep Learning Techniques. [7]

[edit]

This research study was conducted in order to introduce models capable of detecting false information spread online about the Covid-19 pandemic so that the computer could flag the fake data and alert the user to a potential source of misinformation. The algorithms were trained using large amounts of data from Twitter and the internet in order to find relationships indicating that harmful messaging related to Covid-19 was being spread. In order to accomplish this, the team of researchers created two models one implementing the neural network LSTM (Long Short-Term Memory) and one implementing (Temporal Convolution Networks or TCN). The study determined that the TCN approach was more effective in finding and flagging sources that presented information which the researchers classified based on veracity, stance, and sentiment. As such, they implemented the second model in the second phase of the experiment and then used deep learning models to quantify how effective the models were in flagging problematic language in the data sets. This research has significant future applications because as the internet becomes and increasingly uses source for individual news, the ability to implement a program that can identify harmful or misleading information could be extremely useful.

Programs / Platforms

[edit]Amazon Alexa

[edit]

One extremely interesting example of NLP inside of a technology with which many of us are familiar with is Amazon's Alexa device. Essentially, Alexa is a virtual assistant that can respond to a user's verbal requests and supply them with a variety of information and perform various tasks such as telling the user the weather, playing a song, creating an alarm, or recommending places for dinner. This application is possible because of the intersection of natural language processing and AI as the Alexa programmers used extremely complicated deep learning models to train the AI on huge amounts of user input data so that the user issues a verbal command, the AI can extrapolate the meaning of the request and then perform the desired task like a human assistant would. This application uses the word "Alexa" as a trigger phrase that prompts the device to take in the user's next words as input. This program is one such example of devices in the field of NLP that hundreds of thousands of people use every day that they may not have realized uses advance natural language processing algorithms to interact with the user.

ChatGPT

[edit]ChatGPT is perhaps the most well know NLP application in the world because of its incredible ability to interact with a human user and to generate responses that mimic human intelligence extremely well. This AI chatbot is so powerful in comparison to other chatbots because it was trained on an extremely large amount of data (over 570 GB). This application effectively takes in a user input in the form of a written question or command and then responds accordingly. Because of its extensive training on neurolinguistic models, the application can perform incredible tasks like write poems, paragraphs, and even essays that are original. ChatGPT is one of the many programs that allows the average person to see the power of AI and machine learning algorithms and has been a part of the increasing interest in the field of AI as people start to realize the potential for AI driven applications to change the way that we interact with technology.

**Image created by ChatGPT's image editor with constraints "cute robot"**

Engagement Opportunities

[edit]Another way that students looking to gain more exposure to the field of natural language processing is by looking to join student groups and programs that are orientated towards helping students learn about current research and applications. One such group supported by Georgia Tech is Georgia Tech’s NLP and AI seminars which is a series of interactive talks hosted by guest speakers doing relevant work in research or industry. These talks occur every Friday at noon and begin with a presenter who talks about their work for around 45 minutes. At the conclusion of the talk, they will open the room to discussion and students, faculty, and presenters can interact. Luch is also provided which makes the experience a casual, fun, and accessible way for undergraduate students who have an interest in NLP to learn about a potential career in the field of natural language processing. This format is especially beneficial to undergraduate students because natural language processing is a complicated application of AI and not something that students could effectively interact with on their own at the standard undergraduate level. Because these talks require no previous knowledge on the part of the student, they are perfect for introducing people to the topic which can help students decide if they want to delve further into the discipline of NLP.

Georgia Tech offers the class 4650 Natural Language Processing which is the introductory class that exposes students to the sub-discipline of AI and machine learning that is NPL. The goal of this glass is to introduce students to the field of NLP and how computer scientists can use machine learning algorithms to understand human language and generate intelligent responses that mimic human intelligence. To accomplish that goal, this class is designed with the goal of teaching students’ linguistics fundamentals. These fundamentals specifically are related to syntax, semantics, and distributional properties of language. Because NLP is based heavily on machine learning models, this class introduces students to classifiers, sequence taggers, and deep learning models. 4650 uses python as its primary coding language and requires significant math prerequisites such as multivariable calculus in order to implement the necessary machine learning algorithms for the goal of NPL application. In addition, the class has four major homework assignments that students work upon to develop their NLP skills which counts for 55% of the overall grade.

Application: Finance and Machine Learning

[edit]Peer-Reviewed Research Articles

[edit]This peer-reviewed article, written by M. Ludkovski, discusses the relevance of quantitative finance within statistics and artificial intelligence. The motivation to discuss these topics lies in analyzing input-output relationships through regression techniques such as gradient boosting and random forests.

Ludkovski goes into depth into each machine learning method that is used within quantitative finance; he explains the mathematical basis for each method and how it actually trains the data to make predictions. He also focuses on two emerging areas in machine learning and finance: stochastic control and reinforcement learning.

This article not only discusses the artificial intelligence-based techniques used within this field but also the statistical theory behind them. In particular, it looks at loss functions and other mathematical equations to find the hyper-parameters for the machine learning model.

In general, the overall goal of this article is to demonstrate how statistical learning in quantitative finance has grown over the years in different applications such as options pricing.

"Machine learning solutions to challenges in finance: An application to the pricing of financial products" - Lirong Gan, Huamao Wang, Zhaojun Yang[11]

[edit]In this article, Lirong Gan, Huamao Wang, and Zhaojun Yang all discuss the importance of using machine learning to price average options, which are popular financial products among corporations due to their low prices and insensitive payoffs.

In particular, this article separates options into arithmetic and geometric. Arithmetic options are based on the average price of an asset over time, while geometric options are based on the average price of an asset at a certain point in time. Surprisingly, the machine learning method used in this article is a model-free approach.

To use this approach, the authors of the paper derive mathematical equations for assets, interest rates, and payoffs. Furthermore, the data to find the best price for average options is computer generated through a simulation. Using these values and data, the price of an average option can be represented. With these equations, the paper applies a deep learning algorithm using neural networks, which comprise of multiple processing layers in order to analyze data to get an output.

The authors emphasize how artificial intelligence is in a new era when it comes to improving computing performance, especially in cases of regression; AI can help the finance world greatly, especially for investment managers and traders who want to predict trends.

Programs / Platforms

[edit]Anaconda is an open-source distribution that contains over 1,000 packages that focus on data science and machine learning applications. Furthermore, Anaconda supports various languages, but in finance applications, largely Python and R are used. One of the most appealing factors of this platform is how users can manage and create virtual environments, which can help ensure reproducibility and manage dependencies.

Furthermore, many data scientists and machine learning engineers use Anaconda's Jupyter Notebook, a web-based computing environment that allows users to create documents containing code and visualizations. Furthermore, this code can be shared with other engineers, allowing easy collaboration. Going back to finance, Anaconda provides powerful tools that can be used within finance to build and train machine learning models for various scenarios (i.e. predicting earnings, assessing credit risk, and analyzing stock trends).

Overall, Anaconda is a rapidly growing tool for data analysis and artificial intelligence. Its versatility and power make this platform extremely useful for researchers who would want to work with any kind of data.

GitHub is a platform that allows developers to collaborate on development projects and host code. To do this, GitHub uses a distributed version control system called Git in which managing code and tracking changes becomes much easier. There are also many other features within GitHub such as allowing managers to review code and track issues, which makes this platform useful for those who want to collaborate.

Within artificial intelligence, GitHub has multiple repositories of open-source projects that incorporate AI, whether they be machine learning libraries or algorithms. Furthermore, one of the most important parts of artificial intelligence is the ability to reproduce code because it can ensure that models can be replicated and thus verified by others. Through GitHub, researchers can share their code and data openly for others to see.

This platform is especially critical in a financial setting because to successfully run a machine learning model for finance, there has to be a lot of data for each firm. GitHub allows researchers to store that data in a secure repository, which is useful in cases where multiple people are working on the same program or a singular computer does not have enough storage to hold all the data.

Engagement Opportunities

[edit]Student Review of VIP: https://youtu.be/xwJ1xp5Zn5M

Georgia Tech offers students an opportunity to explore a subset of artificial intelligence (more specifically, machine learning) within financial markets. For those who are interested in seeing how machine learning techniques are employed in quantitative trading to predict earnings and other derivatives, this project is appealing.

While this project group focuses on financial markets, it is also useful in honing skills in machine learning, especially within different algorithms, especially classic regression. This vertically integrated project (VIP for short) uses Python and R for coding these predictive algorithms.

The end goal of this VIP is to ultimately replicate a peer-reviewed paper and propose improvements to the techniques used in the paper. Finally, the student will write their own paper with the improvements suggested and hopefully present it at a conference.

Overall, although challenging, this VIP is a rewarding experience for those who are interested in seeing how artificial intelligence, specifically machine learning, can be used in a financial setting.

Georgia Tech’s Trading Club provides those who are interested in quantitative trading and research. The club provides insight into the trading interview process, covering a variety of topics such as Python, statistics, and derivatives.

Through the trading curriculum within the club, one can dive into various machine learning such as regression and boosting with Python. In this way, this club is useful for those who want to work with machine learning within financial markets.

Furthermore, this club provides the opportunity to work on related projects in quantitative finance, discretionary trading, and market research. In particular, the quantitative finance sub-team involves using data analytics and machine learning to create trading strategies for market insights.

To join this club, there are communication platforms that are available to join such as the Slack and GroupMe on the club website, which is linked in the subheading of this entry. In general, Trading Club can be a great place to learn how machine learning and markets work together through the curriculum and various projects.

Glossary Terms

[edit]

Compustat is a detailed database of financial information that is used by researchers and investors in analyzing trends within firms and the market. There are many types of data within this database as well such as balance sheets, income statements, cash flow statements, and more. Furthermore, this database includes tools for data visualization, which can allow users to compare financial performance across companies.

One of the most useful features of Compustat is that the data is standardized and consistent, which is valuable for those who want to use that data to build machine learning models. Through an API key, researchers can pull in the data and read it through different libraries within Python and R such as pandas. From there, this data can be put in a regression model to predict future earnings for a specific firm.

In general, Compustat is a valuable resource to gain insight into the market and how companies are performing financially.

Regression is a fundamental technique used in artificial intelligence applications to predict numerical values based on a given set of training data. In particular, it finds the relationship between one or multiple input variables and a target variable.

There are two types of regression: linear and nonlinear. Within linear regression, a direct relationship between the input and target variable is assumed, while in nonlinear, more complicated relationships can be captured. Many forms of regression can be used in both linear and nonlinear settings such as decision trees.

Regression is used in multiple different applications, whether it be natural language processing or financial modeling. Within financial modeling, analysts can use regression to predict trends about a firm or the market, whether it be earnings or stock prices through a time series analysis. This is useful in investment decisions as investors can predict how a firm will fare long-term.

In general, regression is an essential technique within artificial intelligence as it can allow computers to predict values based on a given set of data.

Application: The Mathematics of Deep Learning

[edit]Peer Reviewed Research Articles

[edit]"Advancing Mathematics by Guiding Human Intuition with AI" - A. Davies, P. Velickovic, L.Buesing, S.Blackwell, D. Zheng, N. Tomasev, R.Tanburn, P. Battaglia, C. Blundell, A. Juhasz, M. Lackenby, G. Williamson, D. Hassabis, P. Kohli[16]

[edit]This article proposes a process of using machine learning AI to discover potential patterns between mathematical objects, conceptualizing them with attribution techniques and using those observations made to guide the human intuition. The work may prove useful in leveraging the respective strengths between mathematicians and machine learning.

The introduction of computers to generate data and test conjectures gave mathematicians a new understanding of problems that they were previously incapable of expanding on, but while computational techniques have become consistently useful in other parts of the mathematical process, AI systems have not yet established their place. Previous systems for generating conjectures have either contributed useful research conjectures through methods that do not easily generalize to other mathematical subjects and areas, or have demonstrated novel, general methods for finding conjectures that have not yet yielded valuable mathematical results.

Machine learning in AI offers a collection of techniques that can effectively detect patterns in data and has increasingly demonstrated utility in scientific disciplines. In mathematics, it has been shown that AI can be used as a valuable tool by finding counterexamples to existing conjectures, increasing calculation speed, generating symbolic solutions and detecting the existence of structure in mathematical objects. In this work, they show that AI can also be used to help with the discovery of theorems and conjectures at the frontline of mathematical research. This extends work using supervised learning to discover patterns by focusing on enabling mathematicians to understand the learned functions and find helpful mathematical insight. They propose a framework for augmenting the standard mathematician’s tool kit with important and powerful pattern recognition and interpretation methods from machine learning. It also demonstrates its value by showing how it led the researchers to two fundamental new discoveries, one in topology and another in representation theory. The contribution shows how mature machine learning methods can be integrated into existing mathematical workflows to achieve useful results.

"Systematic review of predictive mathematical models of COVID-19 epidemic" - S. Shankar, S. Mohakuda, A. Kumar, P.S. Nazneen, A. Yadav, K. Chatterjee, K. Chatterjee[17]

[edit]This article publishes various mathematical models that were used to predict the epidemiological consequences of COVID-19. With advances of information technology and fast computational methods, mathematical models generated by AI were able to to predict the impact COVID-19 would have on society at a global level. This includes publications of models doubling almost every 20 days for the pandemic. The models used specific techniques, assumptions and data gathered from real cases of the pandemic. The AI used those variables to predict those outcomes and calculate the overall impact. Models developed by the AI in the early stages might have had a greater impact on planning and allocation of health care resources than others. The skills adapted from the numerous mathematical models generated by the AI could be helpful for any future unexpected issues for society.

Mathematical AI work in predictions of global issues as the pandemic is important since the skills and knowledge gained from the experience may help for the next global event. Math predictions with AI can also help with forecasting the spread of the virus, since the AI models can analyze the large amounts of data and providing accurate predictions on how the virus will spread to different regions. AI mathematical predictions can also help in predicting resource needs by analyzing data on hospitalization rates such as ventilator usage, and other factors. This information can help healthcare providers plan to allocate resources more effectively.

Programs/Platforms

[edit]

MATLAB

[edit]MATLAB is a programming language and numerical computation platform used mainly in the physics, math, and engineering fields. It provides many tools and operations useful for difficult computations and tasks such as signal processing, machine learning, and data analysis. MATLAB also integrates visualization, computation, and programming in an easier environment for non-programmers to express solutions to problems in a more understandable mathematical notation.

Some uses include scientific engineering and graphics, modeling, simulation, prototyping, algorithmic development, and data analysis.

MATLAB is used very frequently for many students at Georgia Tech, especially for engineering majors. MATLAB can be used for computations such as creating arrays, vectors, and matrices. The course helps students prepare for advanced studies, since they'll be able to tackle more ambitious projects later on. The course for computing for engineers is 1371, a course required for all engineering majors at Georgia Tech and is a prerequisite for many advanced level courses.

Theano

[edit]History of Theano

Theano was the greek wife to Pythagoras, the famous mathematician and philosopher to create the Pythagorean theorem. She was also his student. Theano ran the Pythagorean school in southern Italy in the late sixth century following her husband's death. She is credited and most known for having written exemplary treatises on mathematics, physics, and child psychology. Some of her most important work is said to be the elucidation of the principle of the Golden Mean.

Theano in AI and Computer Science

Theano is a library in Python (programming language) that allows users to evaluate mathematical operations such as multidimensional arrays in deep learning projects. The library is extremely useful since it is capable of taking structures and turning them into efficient code that utilizes some numerical python and native libraries.

Some advantages of Theano include stability optimization (Theano locates unstable expressions and uses stable means to evaluate them), execution speed optimization, and symbolic differentiation (Theano is intelligent enough to locate computing gradients and create symbolic graphs for them).

Engagement Opportunities

[edit]

The Big O Club is the theoretical computer science club at Georgia Tech. They discuss topics such as greedy algorithms, Big O notation, Gaussian and Markov Chains, Approximation Algorithms, symmetric methods, etc through their various blog posts. For example, one of their posts is titled, "The probabilistic method," which covers popular proofs within combinatorics. This mathematical concept is critical for computer science as it helps design useful algorithms and make data compression more efficient. Furthermore, this club discusses other mathematical concepts such as Markov Chains, cryptography, and many different types of probability. Although classes such as Linear Algebra and Discrete Mathematics can definitely strengthen one's math background in these topics, this club makes sure to go even more in-depth, which is useful for those who want to learn the proper theory behind computing.

Understanding these concepts is extremely useful in AI applications. In particular, these mathematical methods and algorithms are critical when optimally coding data extraction features of AI. If one is interested in this club, they meet Friday's at the Georgia Tech College of Computing Building (CCB) 103.

The RoboJackets club is the competitive robotics club at Georgia Tech. They are a multidisciplinary that enables students to have hands-on experience with all aspects of robotics, such as CAD design, mechanical training, firmware training, electrical training, and software trainng. The software training is designed to get members up to date and give them real experience writing code using languages such as C++ and ROS. They will also teach some of the fundamental concepts of robotics that’ll be useful no matter which RoboJackets team a member joins: vision processing, machine learning, motion planning, motion control, and more. Ultimately, the environment encourages both breadth and depth, so the members can work together in group environments as well as pursue independent projects in robotics.

One of the teams on the RoboJackets include the BattleBots, in which two bots are pitted against each other in a gladiator style area. The bots are designed to be able to take damage from another bot in the same weight class. They focus heavily on the mechanical components alongside electrical components and controls. Another team on the RoboJackets is the RoboNav team; they are tasked with the construction of an intelligent autonomous robot that navigates through an obstacle course in various surrounding conditions on its own. The robot uses a neural network LiDar data to observe the surroundings.

No experience is required and they compete in collegiate level competitions every year.

Glossary Terms

[edit]

Markov Chains are a fundamental concept in both mathematics and AI. Markov chains are a type of stochastic process that models a sequence of random events determining the probability of each event depends only on the outcome of the previous event. In a more detailed, higher level, they are a set of states and transitional probabilities between those states. They are usually represented as a matrix in which each element represents the transitional probability from one state to another.

In AI, they are often used in NLP (natural language processing), machine translation, speech recognition, and other applications. They are often used for tasks such as language modeling, in which the goal is to predict the probability distribution of the next word in a sequence when given the previous words. By training a Markov chain on a large text, it is possible to build a language model that can create new text similar to the input text.

Markov chains are also used in reinforcement learning, in which they are used to model transitions between states of MDP(Markov Decision Process). In MDP, the agent takes the actions that affect the state of the environment, and the goal is to find a policy that maximizes the reward signal.

Ultimately, Markov chains are a powerful mathematical AI used for modeling the probabilities of certain stochastic processes and are widely used in all AI.

Pattern recognition refers to the process of recognizing patterns or regularities in data, such as images, text, or sound. It is a fundamental task in machine learning, which is the branch of AI concerned with teaching machines to learn from data. In pattern recognition, an algorithm is trained on a dataset of labeled examples, where each example is associated with a particular pattern or category. The algorithm learns to recognize patterns by identifying common features in the data and using them to classify new examples. For example, in image recognition, a machine learning algorithm might be trained on a dataset of labeled images of cats and dogs. The algorithm would learn to recognize the patterns that distinguish cats from dogs, such as the shape of their ears, the color of their fur, and so on. Once trained, the algorithm could be used to classify new images as either cats or dogs. Pattern recognition is used in a wide range of AI applications, from computer vision and speech recognition to fraud detection and predictive maintenance.

Quantum Computing

[edit]Quantum Computing Opportunities at GT

[edit]Quantum Computing Classes

[edit]Georgia Tech is increasingly offering more ways to learn about and engage with quantum computing. The most obvious way to engage with QC would be to take a class on it. The most notable example of this would be PHYS 4782, a higher level introduction to QC that focuses on developing the theoretical background necessary to understand QC fully. Other relevant classes include CS4803 and PHYS4813 (Quantum Technologies). The CS class will be less mathematically rigorous but will cater more to those looking to develop applications rather than designing new quantum algorithms or working on the theory of quantum computation.

Lastly, for those who want to help develop QC technologies and research, there are research opportunities at GT related to quantum science. In the CS department professors such as Dr. Qureshi and Dr. Conte have CS projects involving QC. In the physics department there are many professors who do quantum physics related research. GTRI also has a quantum systems division with research specifically focused on quantum computing and sensing related projects. They hire student assistants periodically and are a great place to develop many of the skills necessary to research and develop technologies related to QC. These research opportunities are all on the more fundamental side of QC development such as making better hardware or developing better compilers for quantum circuits rather than a focus on the applications of QC such as AI (though are important nonetheless).

Quantum Computing Association

[edit]For students looking to get a more varied and less committed experience with QC, there is the quantum computing association at GT. This club meets every week from 6-7 PM on Thursdays at Georgia Tech's College of Computing (CCB) 340.

This organization serves multiple purposes: creating a community of those interested in quantum computing, connecting students with resources related to quantum computing, and growing students' skills in the subject.

QCA holds weekly meetings with talks on quantum computing from students, scientists, and QC start-ups. This club focuses on exposing students to a wide variety of topics and people working in QC. Furthermore, there are many workshops and seminars where those interested can talk to professionals in the field such as professors and start-up founders.

If one is interested in joining this organization, there is a Slack that displays meeting information. However, if there are any questions, then one can email dgeorge44@gatech.edu.

Relevant Research Articles

[edit]This paper gives an overview about the basics of Quantum Machine Learning (QML). It explores the basic algorithms and architectures associated within QML such as Quantum Neural Networks (QNN) and Quantum Kernels, describing at a high level their implementation and applications. Applications for QML are also discussed and the currently known ones include quantum simulation, enhanced quantum computing, quantum machine perception, and improved classical data analysis. In practice, QML is a broad term that encompasses all of the tasks shown in described earlier. For example, one can apply machine learning to quantum applications like discovering quantum algorithms or optimizing quantum experiments or one can use a quantum neural network to process either classical or quantum information. Even classical tasks can be viewed as QML when they are quantum inspired. We note that the focus of this article will be on quantum neural networks, quantum deep learning, and quantum kernels, even though the field of QML is quite broad and goes beyond these topics. After the invention of the laser, it was called a solution in search of a problem. To some degree, the situation with QML is similar. The complete list of applications of QML is not fully known. Nevertheless, it is possible to speculate that all the areas described will be impacted by QML. For example, QML will likely benefit chemistry, materials science, sensing and metrology, classical data analysis, quantum error correction, and quantum algorithm design. Some of these applications produce data that is inherently quantum mechanical, and hence it is natural to apply QML (rather than classical ML) to them.

Programs for Quantum Computing

[edit]

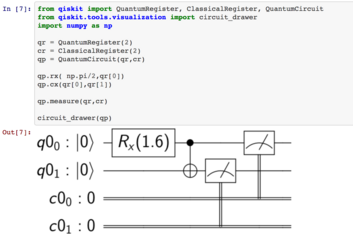

Qiskit

[edit]Qiskit is an open source software development kit developed by IBM (SDK) that allows users to create and run programs to run on a quantum computer at the level of pulses, circuits, or application modules. Written in python, the qiskit workflow consists primarily of 4 steps: 1. Design: The user first designs a quantum circuit. This quantum circuit could represent an algorithm to solve a particular problem or a benchmark test of a quantum computer.

2. Compile: The quantum circuit gets compiled using qiskit's compilation functions. This ensures that the circuit can get broken down into elementary 1 and 2 qubit gates that can be run on a real quantum computer or simulator.

3. Run: The user can then run the quantum circuit on a backend. This backend could be a quantum simulator, a program used to simulate how a quantum circuit would run on an ideal quantum computer and can normally be run locally, or an actual quantum computer such as the ones IBM have (this is done over the cloud). With an IBM account, one can run quantum circuits with up to 7 qubits on a real quantum computer for free.

4. Results: The user then gets the results of their program running on a backend and can compute summary statistics and visualize the results of these experiments. Qiskit is the most used quantum SDK in the world and has many resources for learning about quantum computing topics with their Qiskit textbook which is available for free.

Pennylane is an open-source software framework developed by quantum computing start-up Xanadu for quantum machine learning, quantum chemistry, and quantum computing, with the ability to run on all hardware. Pennylane is a Python package that allows users to create differentiable quantum circuits, meaning that quantum circuits can have parameters that can be tuned and adjusted based on the results of running the circuit. This is one of the most important differences between Pennylane and Qiskit.

This framework is sponsored by multiple companies, including Amazon, NVDIA, and Microsoft. Furthermore, the framework provides resources to learn different aspects of Quantum Computing through various articles. There are many plug-ins that one can use with this program such as Qulacs (which is written in C or C++), which allows users to simulate large, high-speed quantum circuits.

This framework is extremely useful for beginners who want to delve into this field, especially the software side.

Glossary Terms

[edit]

A pictographic way of representing a quantum algorithm. Each horizontal line represents a qubit and any operations performed on the single qubit are placed along the line as labeled squares. Entangling operations such as multi-qubit gates involve vertical lines between qubits or a rectangle encompassing multiple sets of qubits. Quantum circuits may also have other operations included in their diagram such as measurements on a qubit or rails for representing classical bits as well as blocks for quantum algorithms with tunable parameters (this would be especially relevant for quantum machine learning).

These circuits are used with various algorithms such as Grover's algorithm that finds data in an unsorted database. Going back to quantum machine learning, quantum circuits can be used to make quantum neural networks, which can manipulate qubits to allow computing machines to learn from a dataset. Quantum neural networks have the advantage of being faster than normal neural networks, which is critical in optimization problems, thus emphasizing the importance of understanding quantum circuits within the field of machine learning.

Hybrid Quantum Algorithm

[edit]A hybrid algorithm is an algorithm that combines both classical and quantum algorithms. These algorithms have a part of them that runs on a quantum computer and another that runs on a classical computer. Hybrid algorithms have the most promise to offer a computational advantage in the near term for certain tasks because they allow the quantum computer to perform fewer redundant computations. This is essential for current quantum computers because they suffer from errors and decoherence thus it is beneficial to minimize the number of computations that they need to do.

There are various examples of hybrid quantum algorithms such as the Variational Quantum Eigensolver, which is used to find the state energy of a molecule, and the Quantum Approximate Optimization, which is used to find the minimum function that is expressed in a quadratic optimization problem.

These algorithms are important in the field of Quantum Computing because they allow for more efficient solutions to complex problems.

Conclusion

[edit]As explained in this page, artificial intelligence is an extremely complicated field of technology and as a result is frequently misunderstood. Hopefully, this page can help explain not only how general artificial intelligence algorithms work but provide insight into the ways in which these algorithms are applied to revolutionize fields as diverse a quantum computing and predictive financial modeling. As AI continues to develop and as these algorithms are given access to larger and larger quantities of data, these applications will only continue to advance which will expand the impact that this emerging technology has on our society as a whole. In terms of limitations on this website, our team was limited by constraints such as minimal experience with the subject matter as the team is composed of first year undergraduate students and AI is an advanced concept taught in upper-level coursework. Similarly, this website is not designed to fully explain the fields of artificial intelligence and quantum computing, but there are various links to pertinent work being done in the field that the user access for more complete and accurate information. Although the team has no plans to continue to update this website, it currently serves as a concise and effective introduction to AI, quantum computing, and the applications of those fields.

Citations

[edit]- ^ Razavian N, Knoll F, Geras KJ. Artificial Intelligence Explained for Nonexperts. Semin Musculoskelet Radiol. 2020 Feb;24(1):3-11. doi: 10.1055/s-0039-3401041. Epub 2020 Jan 28. PMID: 31991447; PMCID: PMC7393604.

- ^ Haenlein, M., & Kaplan, A. (2019). A Brief History of Artificial Intelligence: On the Past, Present, and Future of Artificial Intelligence. California Management Review, 61(4), 5–14. https://doi.org/10.1177/0008125619864925

- ^ PyTorch. (2023, February 3). In Wikipedia. https://ru.wikipedia.org/wiki/PyTorch

- ^ Google. “Google Colaboratory SVG Logo.” Wikimedia, Wikimedia Commons, 21 Aug. 2021, https://commons.wikimedia.org/wiki/File:Google_Colaboratory_SVG_Logo.svg. Accessed 12 Apr. 2023.

- ^ Brown, Sara. “Machine Learning, Explained.” MIT Sloan, MIT, 21 Apr. 2021, https://mitsloan.mit.edu/ideas-made-to-matter/machine-learning-explained#:~:text=What%20is%20machine%20learning%3F,to%20how%20%20humans%20%20solve%20%20problems.

- ^ Fatima, Rubia; Samad Shaikh, Naila; Riaz, Adnan; Ahmad, Sadique; El-Affendi, Mohammed A.; Alyamani, Khaled A. Z.; Nabeel, Muhammad; Ali Khan, Javed; Yasin, Affan; Latif, Rana M. Amir (2022-09-14). "A Natural Language Processing (NLP) Evaluation on COVID-19 Rumour Dataset Using Deep Learning Techniques". Computational Intelligence & Neuroscience: 1–17. doi:10.1155/2022/6561622.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Oliveira, Lucas Emanuel Silva e; Peters, Ana Carolina; da Silva, Adalniza Moura Pucca; Gebeluca, Caroline Pilatti; Gumiel, Yohan Bonescki; Cintho, Lilian Mie Mukai; Carvalho, Deborah Ribeiro; Al Hasan, Sadid; Moro, Claudia Maria Cabral (2022-05-08). "SemClinBr - a multi-institutional and multi-specialty semantically annotated corpus for Portuguese clinical NLP tasks". Journal of Biomedical Semantics. 13 (1): 1–19. doi:10.1186/s13326-022-00269-1.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ "NLP and AI Seminars | ML (Machine Learning) at Georgia Tech". ml.gatech.edu. Retrieved 2023-04-14.

- ^ www.cc.gatech.edu https://www.cc.gatech.edu/classes/AY2021/cs4650_spring/. Retrieved 2023-04-14.

{{cite web}}: Missing or empty|title=(help) - ^ Ludkovski, M. (2023-03-10). "Statistical Machine Learning for Quantitative Finance". Annual Review of Statistics and Its Application. 10 (1): 271–295. doi:10.1146/annurev-statistics-032921-042409. ISSN 2326-8298.

- ^ Gan, Lirong; Wang, Huamao; Yang, Zhaojun (2020-04-01). "Machine learning solutions to challenges in finance: An application to the pricing of financial products". Technological Forecasting and Social Change. 153: 119928. doi:10.1016/j.techfore.2020.119928. ISSN 0040-1625.

- ^ "Anaconda | The World's Most Popular Data Science Platform". Anaconda. Retrieved 2023-04-10.

- ^ "GitHub: Let's build from here". GitHub. Retrieved 2023-04-10.

- ^ "Machine Learning for Financial Markets | Vertically Integrated Projects |". www.vip.gatech.edu. Retrieved 2023-04-10.

- ^ "Georgia Tech Trading | Trading Club At Georgia Tech". Trading Club @ GT. Retrieved 2023-04-10.

- ^ "Advancing Mathematics By Guiding Human Intuition with AI". Retrieved 2021-12-02.

- ^ Shankar, Subramanian; Mohakuda, Sourya Sourabh; Kumar, Ankit; Nazneen, P. S.; Yadav, Arun Kumar; Chatterjee, Kaushik; Chatterjee, Kaustuv (2021-07-01). "Systematic review of predictive mathematical models of COVID-19 epidemic". Medical Journal Armed Forces India. SPECIAL ISSUE: COVID-19 - NAVIGATING THROUGH CHALLENGES. 77: S385–S392. doi:10.1016/j.mjafi.2021.05.005. ISSN 0377-1237.

- ^ Cerezo, M., Verdon, G., Huang, HY. et al. Challenges and opportunities in quantum machine learning. Nat Comput Sci 2, 567–576 (2022). https://doi.org/10.1038/s43588-022-00311-3

- ^ "Pennylane". pennylane.aiundefined. Retrieved 2023-04-17.