User:19sully96/sandbox

Canonical Ensemble edits

[edit]| Statistical mechanics |

|---|

|

Free energy, ensemble averages, and exact differentials

[edit]- The partial derivatives of the function F(N, V, T) give important canonical ensemble average quantities:

- the average pressure is[1]

- the Gibbs entropy is[1]

- the partial derivative ∂F/∂N is approximately related to chemical potential, although the concept of chemical equilibrium does not exactly apply to canonical ensembles of small systems.[note 1]

- and the average energy is[1]

- the average pressure is[1]

- Exact differential: From the above expressions, it can be seen that the function F(V, T), for a given N, has the exact differential[1]

- First law of thermodynamics: Substituting the above relationship for ⟨E⟩ into the exact differential of F, an equation similar to the first law of thermodynamics is found, except with average signs on some of the quantities:[1]

- Energy fluctuations: The energy in the system has uncertainty in the canonical ensemble.

- The variance of the energy is[1]

- The standard deviation of the energy is related to the heat capacity by [2]

- The relative energy fluctuations decrease with system size by extensivity since ⟨E⟩ ∝ N and C ∝ N, giving [3]

- The variance of the energy is[1]

Boltzmann edits

[edit]

In statistical mechanics and mathematics, a Boltzmann distribution (also called Gibbs distribution[4]) is a probability distribution or probability measure that gives the probability that a system will be in a certain state as a function of that state's energy and the temperature of the system. It is also a frequency distribution of particles in a system.[citation needed] The distribution is expressed in the form

where pi is the probability of the system being in state i, εi is the energy of that state, and a constant kT of the distribution is the product of Boltzmann's constant k and thermodynamic temperature T. The symbol denotes proportionality (see § The distribution for the proportionality constant).

The term system here has a very wide meaning; it can range from a single atom to a macroscopic system such as a natural gas storage tank. Because of this the Boltzmann distribution can be used to solve a very wide variety of problems. The distribution shows that states with lower energy will always have a higher probability of being occupied than the states with higher energy.[5]

The ratio of probabilities of two states is known as the Boltzmann factor and characteristically only depends on the states' energy difference:

The Boltzmann distribution is named after Ludwig Boltzmann who first formulated it in 1868 during his studies of the statistical mechanics of gases in thermal equilibrium. Boltzmann's statistical work is borne out in his paper “On the Relationship between the Second Fundamental Theorem of the Mechanical Theory of Heat and Probability Calculations Regarding the Conditions for Thermal Equilibrium"[6] The distribution was later investigated extensively, in its modern generic form, by Josiah Willard Gibbs in 1902.[1]: Ch.IV

The Boltzmann distribution should not be confused with the Maxwell–Boltzmann distribution. The former gives the probability that a system will be in a certain state as a function of that state's energy;[5] in contrast, the latter is used to describe particle speeds in idealized gases.

The distribution

[edit]The Boltzmann distribution is a probability distribution that gives the probability of a certain state as a function of that state's energy and temperature of the system to which the distribution is applied.[7] It is given as

where pi is the probability of state i, εi the energy of state i, k the Boltzmann constant, T the temperature of the system and M is the number of all states accessible to the system of interest.[7][5] Implied parentheses around the denominator kT are omitted for brevity. The normalization denominator Q (denoted by some authors by Z) is the canonical partition function

It results from the constraint that the probabilities of all accessible states must add up to 1.

The Boltzmann distribution is the distribution that maximizes the entropy

subject to the constraint that equals a particular mean energy value (which can be proven using Lagrange multipliers).

The partition function can be calculated if we know the energies of the states accessible to the system of interest. For atoms the partition function values can be found in the NIST Atomic Spectra Database.[8]

The distribution shows that states with lower energy will always have a higher probability of being occupied than the states with higher energy. It can also give us the quantitative relationship between the probabilities of the two states being occupied. The ratio of probabilities for states i and j is given as

Ratios of hydrogen excited states to the ground state in solar atmospheres versus temperature. The fraction of atoms in one state versus another as a function of temperature is given by a Boltzmann factor where the energies correspond to the energy levels of the hydrogen atom. For the sun, at a temperature of about 5800 K, the fraction of hydrogen in the first excited state compared to the ground state is , meaning that for every billion atoms in the ground state there is one atom in the first excited state. This is particularly useful in understanding the strength of stellar spectral lines, however, to gain a full understanding of spectral lines one needs to take into account ionization. To do this, the Saha ionization equation is needed.

where pi is the probability of state i, pj the probability of state j, and εi and εj are the energies of states i and j, respectively.

The Boltzmann distribution is often used to describe the distribution of particles, such as atoms or molecules, over energy states accessible to them. If we have a system consisting of many particles, the probability of a particle being in state i is practically the probability that, if we pick a random particle from that system and check what state it is in, we will find it is in state i. This probability is equal to the number of particles in state i divided by the total number of particles in the system, that is the fraction of particles that occupy state i.

where Ni is the number of particles in state i and N is the total number of particles in the system. We may use the Boltzmann distribution to find this probability that is, as we have seen, equal to the fraction of particles that are in state i. So the equation that gives the fraction of particles in state i as a function of the energy of that state is [5]

This equation is of great importance to spectroscopy. In spectroscopy we observe a spectral line of atoms or molecules that we are interested in going from one state to another.[5][9] In order for this to be possible, there must be some particles in the first state to undergo the transition. We may find that this condition is fulfilled by finding the fraction of particles in the first state. If it is negligible, the transition is very likely not to be observed at the temperature for which the calculation was done. In general, a larger fraction of molecules in the first state means a higher number of transitions to the second state.[10] This gives a stronger spectral line. However, there are other factors that influence the intensity of a spectral line, such as whether it is caused by an allowed or a forbidden transition.

The Boltzmann distribution is related to the softmax function commonly used in machine learning.

Category:Statistical mechanics

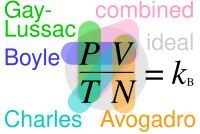

Ideal gas law edits

[edit]| Thermodynamics |

|---|

|

Derivations

[edit]Empirical

[edit]The empirical laws that led to the derivation of the ideal gas law were discovered with experiments that changed only 2 state variables of the gas and kept every other one constant.

All the possible gas laws that could have been discovered with this kind of setup are:

or (1) known as Boyle's law

or (2) known as Charles's law

or (3) known as Avogadro's law

or (4) known as Gay-Lussac's law

or (5)

or (6)

Where "P" stands for pressure, "V" for volume, "N" for number of particles in the gas and "T" for temperature; Where are not actual constants but are in this context because of each equation requiring only the parameters explicitly noted in it changing.

To derive the ideal gas law one does not need to know all 6 formulas, one can just know 3 and with those derive the rest or just one more to be able to get the ideal gas law, which needs 4.

Since each formula only holds when only the state variables involved in said formula change while the others remain constant, we cannot simply use algebra and directly combine them all. I.e. Boyle did his experiments while keeping N and T constant and this must be taken into account.

Keeping this in mind, to carry the derivation on correctly, one must imagine the gas being altered by one process at a time. The derivation using 4 formulas can look like this:

at first the gas has parameters

Say, starting to change only pressure and volume, according to Boyle's law, then:

(7) After this process, the gas has parameters

Using then Eq. (5) to change the number of particles in the gas and the temperature,

(8) After this process, the gas has parameters

Using then Eq. (6) to change the pressure and the number of particles,

(9) After this process, the gas has parameters

Using then Charles's law to change the volume and temperature of the gas,

(10) After this process, the gas has parameters

Using simple algebra on equations (7), (8), (9) and (10) yields the result:

or , Where stands for Boltzmann's constant.

Another equivalent result, using the fact that ,where "n" is the number of moles in the gas and "R" is the universal gas constant, is:

, which is known as the ideal gas law.

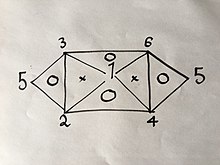

If you know or have found with an experiment 3 of the 6 formulas, you can easily derive the rest using the same method explained above; but due to the properties of said equations, namely that they only have 2 variables in them, they can't be any 3 formulas. For example, if you were to have Eqs. (1), (2) and (4) you would not be able to get any more because combining any two of them will give you the third; But if you had Eqs. (1), (2) and (3) you would be able to get all 6 Equations without having to do the rest of the experiments because combining (1) and (2) will yield (4), then (1) and (3) will yield (6), then (4) and (6) will yield (5), as well as would the combination of (2) and (3) as is visually explained in the following visual relation:

Where the numbers represent the gas laws numbered above.

If you were to use the same method used above on 2 of the 3 laws on the vertices of one triangle that has a "O" inside it, you would get the third.

For example:

Change only pressure and volume first: (1´)

then only volume and temperature: (2´)

then as we can choose any value for , if we set , Eq. (2´) becomes: (3´)

combining equations (1´) and (3´) yields , which is Eq. (4), of which we had no prior knowledge until this derivation.

Theoretical

[edit]Kinetic theory

[edit]The ideal gas law can also be derived from first principles using the kinetic theory of gases, in which several simplifying assumptions are made, chief among which are that the molecules, or atoms, of the gas are point masses, possessing mass but no significant volume, and undergo only elastic collisions with each other and the sides of the container in which both linear momentum and kinetic energy are conserved.

Statistical mechanics

[edit]Let q = (qx, qy, qz) and p = (px, py, pz) denote the position vector and momentum vector of a particle of an ideal gas, respectively. Let F denote the net force on that particle. Then the time-averaged kinetic energy of the particle is:

where the first equality is Newton's second law, and the second line uses Hamilton's equations and the equipartition theorem. Summing over a system of N particles yields

By Newton's third law and the ideal gas assumption, the net force of the system is the force applied by the walls of the container, and this force is given by the pressure P of the gas. Hence

where dS is the infinitesimal area element along the walls of the container. Since the divergence of the position vector q is

the divergence theorem implies that

where dV is an infinitesimal volume within the container and V is the total volume of the container.

Putting these equalities together yields

which immediately implies the ideal gas law for N particles:

where n = N/NA is the number of moles of gas and R = NAkB is the gas constant.

Canonical Ensemble

[edit]Assuming the particles making up the gas are non-interacting with each other, are confined to a fixed volume and are in thermal equilibrium, one can use the tools of the canonical ensemble to derive the ideal gas law. It is also possible to repeat similar derivations using the microcanonical and grand canonical ensembles. This derivation will follow generally the steps given by Kardar in "Statistical Physics of Particles."[11]

The canonical partition function of a system of classical, identical particles with mass in a three dimensional box is given by

,

where is the Hamiltonian, is Planck's constant, and serves as a normalization factor.

Since an ideal gas is assumed to have no interactions between particles, the potential is just the hard box potential,

.

Thus the integral over all of phase space in the partition function reduces to

,

where is the volume of the box.

The last equality comes from the fact that the particles are identical. Since the integral in the last equality is Gaussian, it is relatively easy to compute.

Defining to be the characteristic length for at temperature , the partition function reduces further to

.

Now that the canonical partition function of an ideal gas has been calculated, one can solve for the free energy (specifically the Helmholtz free energy) and then use thermodynamics to obtain the ideal gas law.

The free energy is given by

,

where the approximation comes from using Stirling's approximation for factorials. Substituting in for and using simple logarithm rules yields the result

.

Now that the free energy has been calculated, one can use the main thermodynamic identity and take partial derivatives to obtain the ideal gas law.

Starting with the identity for pressure,

which is the ideal gas law.

See also

[edit]- Van der Waals equation – Gas equation of state which accounts for non-ideal gas behavior

- Boltzmann constant – Physical constant relating particle kinetic energy with temperature

- Configuration integral – Function in thermodynamics and statistical physics

- Dynamic pressure – Kinetic energy per unit volume of a fluid

- Internal energy – Energy contained within a system

References

[edit]- ^ a b c d e f g Gibbs, Josiah Willard (1902). Elementary Principles in Statistical Mechanics. New York: Charles Scribner's Sons.

- ^ Schroeder, Daniel. An introduction to thermal physics. Addison Wesley. ISBN 0201380277.

- ^ Kardar, Mehran. (2007). Statistical physics of particles. New York, NY: Cambridge University Press. ISBN 9780521873420. OCLC 148639922.

- ^ Landau, Lev Davidovich; Lifshitz, Evgeny Mikhailovich (1980) [1976]. Statistical Physics. Course of Theoretical Physics. Vol. 5 (3 ed.). Oxford: Pergamon Press. ISBN 0-7506-3372-7.

{{cite book}}: Unknown parameter|last-author-amp=ignored (|name-list-style=suggested) (help) Translated by J.B. Sykes and M.J. Kearsley. See section 28 - ^ a b c d e Atkins, P. W. (2010) Quanta, W. H. Freeman and Company, New York

- ^ http://crystal.med.upenn.edu/sharp-lab-pdfs/2015SharpMatschinsky_Boltz1877_Entropy17.pdf

- ^ a b McQuarrie, A. (2000) Statistical Mechanics, University Science Books, California

- ^ NIST Atomic Spectra Database Levels Form at nist.gov

- ^ Atkins, P. W.; de Paula J. (2009) Physical Chemistry, 9th edition, Oxford University Press, Oxford, UK

- ^ Skoog, D. A.; Holler, F. J.; Crouch, S. R. (2006) Principles of Instrumental Analysis, Brooks/Cole, Boston, MA

- ^ Kardar, Mehran. (2007). Statistical physics of particles. New York, NY: Cambridge University Press. ISBN 9780521873420. OCLC 148639922.

Further reading

[edit]- Davis; Masten (2002). Principles of Environmental Engineering and Science. New York: McGraw-Hill. ISBN 0-07-235053-9.

External links

[edit]- "Website giving credit to Benoît Paul Émile Clapeyron, (1799–1864) in 1834". Archived from the original on July 5, 2007.

- Configuration integral (statistical mechanics) where an alternative statistical mechanics derivation of the ideal-gas law, using the relationship between the Helmholtz free energy and the partition function, but without using the equipartition theorem, is provided. Vu-Quoc, L., Configuration integral (statistical mechanics), 2008. this wiki site is down; see this article in the web archive on 2012 April 28.

- pv=nrt calculator ideal gas law calculator - Engineering Units online calculator

- Gas equations in detail

Category:Gas laws

Category:Ideal gas

Category:Equations of state

Category:1834 introductions

Cite error: There are <ref group=note> tags on this page, but the references will not show without a {{reflist|group=note}} template (see the help page).

![{\displaystyle ={\frac {V^{N}}{h^{3N}N!}}\prod _{i=1}^{N}\int e^{{\big (}-{\frac {1}{kT}}{\frac {p_{i}^{2}}{2m}}{\big )}}d^{3}p_{i}={\frac {V^{N}}{h^{3N}N!}}{\bigg [}\int e^{{\big (}-{\frac {1}{kT}}{\frac {p_{1}^{2}}{2m}}{\big )}}d^{3}p_{1}{\bigg ]}^{N}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2fe79843fd8aa4d9e8f51721aa91a521eaab7f35)

![{\displaystyle Z={\frac {V^{N}}{h^{3N}N!}}{\bigg [}{\big (}2\pi mkT{\big )}^{\frac {3}{2}}{\bigg ]}^{N}={\frac {1}{N!}}{\bigg (}{\frac {V}{\lambda ^{3}}}{\bigg )}^{N}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0c0b19186d6308ccdbba45257c40b266e8ca0560)

![{\displaystyle =-NkT\log {\bigg [}{\frac {V}{\lambda ^{3}}}{\bigg ]}+kT\log {{\big [}N!{\big ]}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7d0b751a0692f9e83516581fe5bfd3a0ac7fbc4d)

![{\displaystyle \approx -NkT\ln {\bigg [}{\frac {V}{\lambda ^{3}}}{\bigg ]}+NkT\ln {{\big [}N{\big ]}}-NkT}](https://wikimedia.org/api/rest_v1/media/math/render/svg/66a1eb3199ad1c57cc74207c75583d89411b689b)

![{\displaystyle F=-NkT{\bigg (}\ln {{\bigg [}{\frac {V}{N}}{\bigg ]}}+{\frac {3}{2}}\ln {{\bigg [}{\frac {2\pi mkT}{h^{2}}}{\bigg ]}}+1{\bigg )}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/dd66c5421ee4411f184277779e501182a7ca30f1)