Talk:Newton's method/Archive 1

| This is an archive of past discussions about Newton's method. Do not edit the contents of this page. If you wish to start a new discussion or revive an old one, please do so on the current talk page. |

| Archive 1 |

Generalisations

shouldn't this:

- One may use Newton's method also to solve systems of n (non-linear) equations...

read like this:

- One may use Newton's method also to solve systems of k (non-linear) equations...

I don't understand this section well enough to be confident in this change (unsigned post by anon)

- You are right. I just fixed that. Oleg Alexandrov 22:49, 3 August 2005 (UTC)

insufficient hypotheses?

The article currently states:

- One can prove that, if f ' is continuous, and if the unknown zero x is isolated, then there exists a neighborhood of x such that for all starting values x0 in that neighborhood, the sequence {xn} will converge towards x.

This doesn't seem to be strong enough. Consider the following example: Let g(x) = x^2 * sin^2( 1/x ). Then g(x) is a continuous non-negative function with infinitely many zeros in each neighborhood of 0, namely at each point x = 1/(n pi) for n an integer. Take f(x) to be the integral from 0 to x of g(x). Then f is differentiable, f ' = g is continuous, and 0 is an isolated zero of f. (This last being true since for any a>0 there are values 0<x<a for which g(x)>0, and for all 0<x<a g(x) >= 0, so f(a) = integral of g(x) from x=0 to a is >0. Similary for a<0.) Then for any starting point at one of these zeroes 1/(n pi) of f ', the Newton method immediately fails with a division by zero. So there is no neighborhood of zero in which all starting values give a sequence converging towards 0.

I'm not sure right now what the right conditions should be. Hmmm... --anon

- Good point indeed. And that function can be made as smooth as one would like, even infinitely smooth, if one multiplies sin^2( 1/x ) by exp(-1/x^2) instead of x^2. So, asking for more smoothness will not solve things. So I guess one must ask that either f'(x0)≠0, or if it does equal zero, then x0 be a an isolated root of f'(x) also. That should be sufficient I guess. Oleg Alexandrov (talk) 08:01, 11 January 2006 (UTC)

- I have my doubts about Oleg's guess. Anyway, I removed it from the article for the moment. -- Jitse Niesen (talk) 14:00, 11 January 2006 (UTC)

- Come on, it's a guess, man. You should not say you have doubts (that's the same as thing the algorithm terminates), you should but your better guess in, and eventually we will converge towards the right thing! :) Do you at least agree that smoothness won't help things. Oleg Alexandrov (talk) 17:03, 11 January 2006 (UTC)

- Actually, I think smoothness would help, for sufficiently large values of smoothness ... If you want my guess: Newton's method converges locally if f is analytic. Proved by Stephen Smale, perhaps? Anyway, I found one theorem which I put in. -- Jitse Niesen (talk) 18:30, 11 January 2006 (UTC)

The well know hypothesis is that the derivative of f must be nonzero on a neighborhood of the isolated solution, or in the vector case that the Jacobian matrix of f is nonsingular on a neighborhood of the isolated solution. The example above clearly violates this. The same hypothesis is needed in the inverse function theorem to prove that a solution is isolated in the first place. With continuous differentiability, it is enough that the Jacobian is nonsingular at the solution itself, which then implies that it much be nonsingular on a sufficiently small neighborhood (see Theorem 9.2, Munkres, Analysis on Manifolds, Westview Press, 1991). I have not corrected the article b/c I don't know how. Hope this helps. — Preceding unsigned comment added by 198.21.229.183 (talk • contribs) 14:57, 16 September 2014

numerical methods

i am currently in a numerical methods class, i looked through this article and it seems to be missing this aspect of appliction, while the current equation form is correct, to fix up this page to make it more robust, i am going to add a section on using newtons method with functions with many roots to find the roots. im not sure what its called right now, but ill be back. —The preceding unsigned comment was added by Mikefadock (talk • contribs) . (Dude)

- If you want to find many roots, you need first to locate where the roots are using the bisection method, or something. I think that kind of material would be more appropriate at root-finding algorithms or something rather than Newton's method. Oleg Alexandrov (talk) 02:35, 3 March 2006 (UTC)

The method i am referring to is the iterave method for pinning down roots which are far from zero, starting from newtons method, we have x_one=x_not-f(xnot)/f'(xnot) replacing f(xnot) with g(alpha)-alpha and f'(fnot)=g'(alpha)-1 whereby alpha is the root to be found. I feel this should be included, or at minimum a notation of general situations where newtons method fails and links to the alternatives. From the perspective of a student, i spent 1/2 hour just trying to figure out why newtons method would not work on sin(x)^2, having a quick note stating that "some functions will not work with newtons method, sin(x)^2 other multipul root questions ect. ... please see this section for explanation" . Dude 02:48, 5 March 2006 (UTC)

Inclusion of the conditions for convergence would also be cool.(M=max_(x is a member of r)|f''(x)| / 2*min_(x is a member of r)|f'(x)| , M|alpha-xnot|<1 for convergence). A new section could be added. I really don't know the limit of how large a page can get before it becomes unmanagable. (i'll add it btw, but i was thinking that doing a quick check for thoughts on the matter would be best.) Dude 02:59, 5 March 2006 (UTC)

- I don't understand your alternative method at all (what is g?). Does it have a name? Alternative methods are listed in root-finding algorithm.

- I don't think the failure of Newton's method has anything to do with multiple roots. The article does mention that Newton's method may fail ("Newton's method will usually converge provided the initial guess is close enough to the unknown zero.", and also in the section "Practical considerations"). However, the presentation can certainly be improved.

- The convergence result you mentioned would indeed be nice to add (of course, a reference should also be included), for instance to the "Analysis" section. I guess the article can grow twice as big before we should consider splitting it. -- Jitse Niesen (talk) 05:46, 5 March 2006 (UTC)

- I'll throw something together in tex and put it up on my user page, you can look at it there, it will explain the substitution better than i have explained it here. I'll drop in the convergence stuff tommorow or later today.

- Convergence fails spectactularily for newtons method under very specific conditions, im plowing through my textbook now to find them....(the function must be thrice differentiable, continuous in f,f',f ' ' , f' cannot equal zero at the root. There exists a region around the root for which convergence is quadratic(accuracy doubles with each new iteration), a larger region for linear convergence, and another in which no convergence occurs.) I feel the article should include the methods for finding the regions in which convergence occurs. Not just simply stating "it occurs close enough to the zero". Give me a little time to patch this together and it should come out a much better resource. Dude 18:42, 5 March 2006 (UTC) —Preceding unsigned (sic) comment added by Mikefadock (talk • contribs) 18:42, 5 March 2006

- Just a question, is that your own research, or is it published material? Oleg Alexandrov (talk) 01:24, 6 March 2006 (UTC)

- An Introduction to Numerical Methods nad Analysis, James F Epperson, A text for my numerical methods class. It covers root finding algorithms, theory, error analysis ... ect. I cannot copy out of it(copyright and everything) so I have to first understand the material, and then present it in an understandable way. To answer your question, published. Dude 01:09, 8 March 2006 (UTC) —Preceding unsigned (sic) comment added by Mikefadock (talk • contribs) 01:09, 8 March 2006

Brief Derivation of the Formula

Instead of just presenting the formula

x_n+1 = x_n - f(x_n)/f'(x_n)

I think that a very brief derivation of this would be enlightening. I for one, understand a formula much better when I can see where it came from

slope of tangent = f(x_n+1)-f(x_n)/(x_n+1 - x_n) = f'(x_n) {from defn of derivative)

But f(x_n+1) = 0 so by simple algebra we can derive

x_n+1 = x_n - f(x)/f'(x)

--unisigned

- There is already a picture illustrating that. I would support adding the derivation as long as it is well written and indeed rather short. Oleg Alexandrov (talk) 02:44, 14 October 2006 (UTC)

eh, I just went ahead and added the derivation because I had to think about it for a couple seconds. Hope nobody minds. Earlh 01:36, 13 March 2007 (UTC)

Counterexamples

In the article, the first counterexample has f(0) = 0, and then says that f'(0) = 1. If f'(x) = d(0)/dx = 0 at x = 0, then f'(0) = 0, not 1. — metaprimer (talk) 13:06, 27 October 2007 (UTC)

- You cannot say that f'(x) = d(0)/dx = 0 at x = 0. I know it looks convincing, but it's still not correct. For instance, consider the function

- This is just a complicated way to write , so . However, your reasoning would show that .

- The function f in the counterexample is more complicated, so it's not so easy to compute . You need to use the definition of the derivative as a limit. -- Jitse Niesen (talk) 17:39, 27 October 2007 (UTC)

- Ah, I see now. Perhaps this should be briefly mentioned - that the limit of f(x) as x -> c is seperate from f(c) and therefore f'(0) is concerned with f(x) at x != 0. — metaprimer (talk) 19:35, 27 October 2007 (UTC)

Complex functions

In this article, Newton's method is only defined for functions R → R. If there is an extension of the method to complex analysis, a definition might help. Illegal604 (talk) 11:42, 9 October 2008 (UTC)

- Here is an example of solving system of 2 complex equations with Newton method in Maxima. --Adam majewski (talk) 16:35, 13 October 2008 (UTC)

Division by zero = infinity?

The second equation in the "Counter examples" section needs a little adjustment; last time I checked 0 - 1/0 is not infinity. —Preceding unsigned comment added by 71.141.120.47 (talk • contribs)

- That was added by an incompetent editor whose edits I have now reverted. JRSpriggs (talk) 16:46, 30 October 2008 (UTC)

Discontinuous derivative example

It should be noted that in the analysis given does not preclude the possibility that some iterate may hit the root "by chance". So it isn't possible to conclude that the iterates always diverge, but only that there exists some starting point in any neighborhood of the root such that the iterates diverge. I rephrased the sentence which seemed to imply the former.--RDBury (talk) 20:58, 25 April 2009 (UTC)

Removed image

I removed the image under Generalizations since it seemed only tangentially related to the topic. The image showed the Mandlebrot set in schematic form.--RDBury (talk) 21:47, 25 April 2009 (UTC)

Counterexamples removed by JRSpriggs

I'd like to discuss the counterexamples I added ([1] and [2]) and that were briskly removed by user JRSpriggs as "incompetent and unnecessary".

- I added a short comment at the beginning of the section, stating that the method does not guarantee convergence. It seems to me an important point for anyone wishing to learn about the algorithm.

- For starters, I corrected the misleading statement of points being "too far away" into simply "bad", since the stability of the algorithm it is not (always) a question of mere distance from the solution but of the shape of the function and of the starting point. It is fairly easy to construct functions where only starting points distant enough can converge to the solution.

- I added a class of simple functions that can never converge no matter the starting point, i.e. . Either prove I am wrong, which does indeed happen fairly often, or explain why this should not be relevant.

- I divided the counterexamples by issues, as the list was starting to grow.

- In the "Discontinuous derivative" section, I corrected a statement that held that f'(0) was 1, when in fact it was undefined. Looking at the cos(2/x) term should be enough to convince oneself of that. I also removed a related claim that the function was differentiable everywhere, even though that kind of functions is the poster child of continuous functions that have undefined derivatives.

- Some innocent typographical corrections, such as using \left and \right in the LaTeX code, were also caught in the revert.

I would like to know exactly in which way these edits of mine are "incompetent and unnecessary". 193.175.53.21 (talk) 10:50, 12 November 2008 (UTC)

- The derivative is one at zero. The definition of derivative is

- which in our case means

-

- remembering that the sine is bounded by -1 and +1 regardless of its argument.

- Most of the rest of what you added was duplicative of what was already there.

- JRSpriggs (talk) 21:06, 11 December 2008 (UTC)

- The definition of converge implies that if Newton's method does not converge on a neighborhood of a solution, it does not converge to it from anywhere. There may be isolated points (or, in strictly artificial cases, line segments) on which the method hits the solution exactly, but if it misses an unstable solution ever so slightly, the method will diverge. Septentrionalis PMAnderson 14:00, 23 June 2009 (UTC)

Analysis

This section needs to be either removed or redone. It contains a lot of terms like "vanishes" and such. —Preceding unsigned comment added by 67.170.61.196 (talk) 08:57, 10 December 2008 (UTC)

- What is wrong with that? "Vanishes" has a recognised meaning, and is more concise than some alternatives. JamesBWatson (talk) 14:12, 23 June 2009 (UTC)

Values of X0?

If you have absolutely no idea about a potential value of X0 whatsoever, does it make any significant difference? I tried the example equation f(x) = cos(x) − x3 with the X0 value of 100, and it still came up with the same answer, it just took more steps. So, are there cases where the deviation of the X0 from an actual root value will make a significant difference to calculating the root approximation?

Also, can this method be used to find multiple roots of a continuous function?Nonagonal Spider 05:54, 27 March 2007 (UTC)

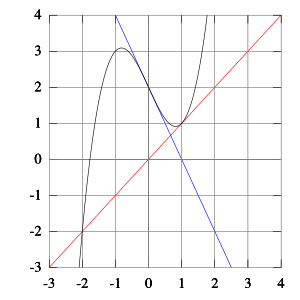

- Consider f(x) = x/(x2 + 1). If you are too far away from zero, the method will just take you further away. JRSpriggs 10:17, 27 March 2007 (UTC)

- Newton-Raphson makes no guarantees whatsoever. The more your function looks like a linear one, the more likely you are to get a solution and good progress toward it, but that's a fairly generic criterion. In the case of your function, cos(x)-x³, the plot (try e.g. plot2d( cos(x)-x^3, [x,0,100]); in Maxima) does not have many surprises, so starting from x0=100 is ok; in fact I think you could start from 109, or any other positive value, and have just a few iterations more. Other functions, like the one indicated by JRSpriggs, have a "region of attraction" to the solution; others may have even odder behaviour yet (e.g. chaos, no converging starting points at all, whatever). In short, you can say little about convergence unless you know at least something about the function.

- As for the multiple roots, you could start the method from different points, but what you are really doing is trial and error. I do not think there is any numerical method that can tell you how many solutions an unknown f(x) has, so this is information you should have to start with; also, the algorithm could converge to some solutions easily, to others not so easily, and to some not at all. 193.175.53.21 (talk) 15:15, 12 November 2008 (UTC)

- See Sturm's theorem, which will give the number of solutions of a polynomial, in the reals or in any range; the roots of a good enough polynomial approximation (if one exists) should be comparable to the roots of the original function. Septentrionalis PMAnderson 16:22, 24 June 2009 (UTC)

General cleanup.

I'm working on some general cleanup of this article. The first paragraph of the article gets a little sidetracked.

The following is qualitative information. Though it is important, I believe it should be included later in the page.

Newton's method can often converge remarkably quickly, especially if the iteration begins "sufficiently near" the desired root. Just how near "sufficiently near" needs to be, and just how quickly "remarkably quickly" can be, depends on the problem (detailed below). Unfortunately, when iteration begins far from the desired root, Newton's method can fail to converge with little warning; thus, implementations often include a routine that attempts to detect and overcome possible convergence failures.

The first paragraph should be concise, state what the method does, and classify the method. Failure analysis and practical implementation should be addressed later in the page.

Kyle.drerup (talk) 04:49, 12 November 2010 (UTC)

Newtons Method JavaScript Implementation

I have implemented Newtons Method for a homework at school, and if you guys find it relevant, add it to the page. http://amasad-math.appspot.com/ (SOURCE CODE INCLUDED)

What it actually does is, takes K variables and K functions, then uses WolframAlpha API to evaluate indefinite differentials (tried to do it by means of a parse-tree on JS but couldn't perfect it, also found in the source), to construct the Jacobean Matrix, it does that asynchronously and without blocking the application (Maybe a good example of event-based programming), after the whole matrix is constructed the user is asked to input initial guesses. Afterwards iteration starts, and each one (the new vector 'X') is shown on the screen , the program will either stop after 50 iterations, or when the Euclidean norm(Y) < 10e-7: Y = update as in Y = INVERSE(JACOBIAN(x)) * -F(x) . Any bugs or notes is appreciated. — Preceding unsigned comment added by Amjad.masad (talk • contribs) 00:09, 27 January 2011 (UTC)

pitfalls of the Newton-Raphson method

pitfalls of the Newton-Raphson method:

An infection point(f(x)=0) at the vicinity of a root causes divergence. A local maximum or minumum causes oscillations. It may jump from one location to another. A zero slope causes division by zero.

- Right, the method is not perfect. But as long as you start close enough to the root you will always be fine (of course, if the slope at the root is zero, the convergence will be slower). Oleg Alexandrov 20:04, 14 Mar 2005 (UTC)\

- Not true. For example, try solving , starting from any non-zero real number: you will find the method diverges. JamesBWatson (talk) 10:51, 23 June 2009 (UTC)

- The second derivative of is not finite at the root, so the method is expected to fail, as is already described in the Analysis section. Jasondet (talk) 05:39, 6 September 2011 (UTC)

Clarify Whose Root We Are Finding

Since the first sentence in the section "The Newton-Raphson method in one variable:" mentions both the function f(x) and it's derivative f'(x), it would be best if that sentence stated that the purpose of the method is to find a roof of f(x) (rather than f'(x)).

Tashiro (talk) 04:18, 18 April 2011 (UTC)

Basins of attraction for real functions

While the section on complex functions has some information on basins of attraction, the sections on real functions do not. When there are multiple real roots, I recall that the basins of attraction can have an interesting structure, but I can't recall details or sources. Does someone know about this? Duoduoduo (talk) 20:46, 8 December 2011 (UTC)

- Found and inserted. Duoduoduo (talk) 00:18, 9 December 2011 (UTC)

Conditions for convergence

The section on bad starting points says

- In some cases the conditions on the function that are necessary for convergence are satisfied,....

But as far as I can see, the article only gives conditions for quadratic convergence, not for convergence itself. Can someone rectify this? Duoduoduo (talk) 21:14, 8 December 2011 (UTC)

- It should probably say "necessary for quadratic convergence are satisfied". Newton's method can behave chaotically and region of convergence is usually fractal-like, see Newton fractal. So I'm guessing there really isn't any test for convergence outside the region of quadratic convergence that works any better than just iterating the map to see if it gets close to a solution.--RDBury (talk) 23:09, 8 December 2011 (UTC)

- But the article mentions linear convergence in the case of multiple roots. So there must be conditions for linear or better convergence. Duoduoduo (talk) 23:53, 8 December 2011 (UTC)

- Multiple roots is a special case. You could probably generalize the theorem on quadratic convergence to handle multiple roots or roots that are close together fairly easily and I imagine it's been published at some point. As a practical issue though it probably wouldn't be very useful since you would need to know ahead of time that there are roots that are close together. So I still think that quadratic convergence is what was meant in the the passage.

- But the article mentions linear convergence in the case of multiple roots. So there must be conditions for linear or better convergence. Duoduoduo (talk) 23:53, 8 December 2011 (UTC)

- As an example, look at the z^3-2z+2 example in the text and try to find the set of all z where the iteration alternates between 0 and 1 in the limit (and therefore diverges) rather than converging to one of the roots. There is a neighborhood of 0 and smaller neighborhoods of +1 and two other points, then there are still smaller regions which map to these, and so on. The end result is a fractal like pattern whose boundary is the Julia set for the iteration. As far as I know the most practical way to test for membership in the set to iterate the function until you reach one of the basins of attraction, but the number of iterations needed can be arbitrarily high.--RDBury (talk) 14:57, 9 December 2011 (UTC)

Minus or plus in the function of the diagram?

I changed the minus sign to a plus sign in the function in the caption of this diagram, giving the edit summary

- correction in caption: blowing up the image shows that –1 (and not 1) is a root; so the function is x ^ 5 + 1

My edit has been reverted with this non-explanatory edit summary:

- Minus was correct for this diagram

Click on the diagram to blow it up. Since "darker means more iterations to converge", you can see that –1 is indeed a root that is converged to. Indeed you can see that the five points that are converged to are the five fifth roots of –1, which occur at the lightest points at the outer edges of the five central "balloons". The function has these roots; the function does not. So why would the original minus sign in the function be correct? Duoduoduo (talk) 22:19, 9 December 2011 (UTC)

- According to the text of the program which produced the diagram (which text is in the file containing the image), "#define POLY(z) z*z*z*z*z + (-1)". This means that the function is z5-1.

- Since there are no multiple roots to either of the two function which we are discussing, I would expect that the number of iterations to convergence would be small near one of the roots and larger elsewhere. That is consistent with the image which shows the light spots in the basins at the primitive fifth roots of +1. Notice that z5-1=0 is the same as z5=+1.

- One might expect little fragments of basins clustered around a root of the derivative of the function, but not around the roots of the function itself. This is contrary to what you have said.

- Here I am assuming that +1 is directly to the right of 0 which is the center of the picture where the five spokes meet. JRSpriggs (talk) 00:37, 10 December 2011 (UTC)

- I did see that the file says "#define POLY(z) z*z*z*z*z + (-1)". But maybe there is something in the program which causes the displayed picture to be a mirror image, around the vertical axis, of what is intended.

- You say "One might expect little fragments of basins clustered around a root of the derivative of the function, but not around the roots of the function itself. This is contrary to what you have said." I'm not sure what you mean here -- the derivative of either function is , all of whose roots are zero, so this is irrelevant to our discussion.

- The question remains: why are there very light colored spot at the fifth roots of –1? If the image is correct and is for , I don't see any reason why these would be there. Duoduoduo (talk) 17:01, 10 December 2011 (UTC)

- Not sure what you're referring to since it appears to me that the light spots are at the fifth roots of 1: 1 itself (to the right of the origin) and four more at angles 2nπ/5.--RDBury (talk) 18:40, 10 December 2011 (UTC)

- Left click on the image to enlarge it. (Or enlarge it more by left clicking again.) There are five "balloons" sticking out from the origin, in the directions of the five fifth roots of -1. Each balloon has three colors -- look in the center color of any one of the balloons. If you start near the origin, that color is dark, but as you go away from the origin within the center color of the balloon, it gets lighter, lighter, lightest. Duoduoduo (talk) 19:53, 10 December 2011 (UTC)

- Those are points that get mapped to a fifth roo1 of 1 on the first iterations. There are three points that map to 1 on the first iterations, the solutions of 4x3+3x2+2x+1=0, one of these is negative which would account for the yellow bright spot to the left of the origin. The biggest yellow bright spot is to the right of the origin and its center is +1.--RDBury (talk) 21:10, 10 December 2011 (UTC)

Thanks! That explains it. (For the benefit of other (current and future) readers of this talk page: RDBury obtained his equation 4x3+3x2+2x+1=0 by taking Newton's formula and setting the next iterate equal to 1, giving a fifth degree equation, two of whose roots are the duplicate root 1, and factoring out the duplicate roots.) Duoduoduo (talk) 22:59, 10 December 2011 (UTC)

Initial condition too far from root

The article says in a couple of places (Newton's method#Bad starting points and Newton's method#Poor initial estimate) that convergence may not occur if the initial estimate is far from any root. But isn't it true that for a real function, almost all initial conditions lead to convergence if a real root exists? I seem to recall this, but I don't have a source. Duoduoduo (talk) 21:07, 8 December 2011 (UTC)

Well, not for all real functions. E.g., if f(x) goes asymptotically to zero as x goes to infinity, for sufficiently large initial point the process diverges to infinity. But I still have a feeling that almost all initial points converge to a root for any polynomial function that has a real root. Anyone know? Duoduoduo (talk) 15:56, 9 December 2011 (UTC)

- No, you can also get stable cycles, that is, start points close to the cycle points will also converge to the cycles. Isn't there an example of degree 3 in the counter examples? But certainly, points from far away move into the range of the roots, even in the complex plane.--LutzL (talk) —Preceding undated comment added 17:32, 9 December 2011 (UTC).

- I'm skeptical that the cycles are ever stable for a polynomial function. For example, the article shows this example of a 2-cycle for a cubic. By blowing it up and tracing a couple of iterations from very near one of the 2-cycle points, one can see that the cycle is repelling.

- Is there a good source that talks about these issues? Duoduoduo (talk) 20:44, 9 December 2011 (UTC)

- Try doing the calculations again. Actually 0 us a critical point for the iteration so points near it converge quadratically to the cycle (in some easily definable sense). For example with the starting point .1 I get iterates 0.1, 1.014213198, 0.079655766, 1.00909874, 0.052226527, 1.003965185, 0.023329436, 1.000804353, 0.004806795, 1.000034548, 0.000207252, 1.000000064, 3.86529E-07, 1, ... . For a random cubic (say with coefficients selected randomly between -10 and 10) there is a small but positive probability there are stable 2 cycles.--RDBury (talk) 20:43, 10 December 2011 (UTC)

- You're right -- that's interesting. If you start from 1.1, it diverges. So the basin of attraction around 1 is smaller than that around zero.

- Stability can be proved by denoting the Newton iterative function as g, and noting that which is less than one in magnitude. Duoduoduo (talk) 16:27, 12 December 2011 (UTC)

Newton's method an example of recursion theory, history

I'm hunting about for references re the history of the notion of recursion. Newton's method is a gorgeous example of recursion, i.e. "successive approximation". The Newton-Raphson method is used to solve (resolve) the convergence of SPICE simulations (didn't see that in the article; if anyone wants a reference lemme know, I've got an ancient one). About as far as I've gotten in my hunt for sources is in Dedekind 1887 "The Nature and Meaning of Numbers" I see " . . . that therefore the definition by induction (or recursion) is determinate and consistent (126)" (p. 33 in facsimile of the Beman translation, Open Court Press 1901). In this article, I didn't see a definitive "link" (i.e. an intellectual one) to the notion of recursion, which is the basis (writ ironic) of the whole process. Bill Wvbailey (talk) 23:31, 24 February 2012 (UTC)

The order of convergence of Newton Method

Here we give another method to explain the order of convergence of Newton method.

Theorem: Let be a solution of the equation . Suppose that, : and is continuous on the open interval I containing :. Then for belongs to interval , the sequence defined by : converges at least quadratically to .

By using this theorem above, We suppose that is continuous on an open interval containing , and , is not 0. Since , then we know that , and . The theorem implies that Newton's method converges at least quadratically to .

Pa648412 (talk) 05:46, 17 September 2012 (UTC)

- I fixed some formatting. Where on the page should it go?

- Do you have a source or proof for this theorem? It is not that useful if you do not.

- Maybe the theorem should go on the Fixed-point iteration page.

- Mjmohio (talk) 19:09, 18 September 2012 (UTC)

Convergence rate proof

User: Elvek, Date: September 24, 2010

Hello, I am working on an assignment in a numerical methods class, which is to make a contribution to a numerical methods related page. My proposal for an addition to the wikipedia page on Newton's method is about the convergence rate of the method. A short explanation and the rational for the addition of this material are as follow. (a) Proposed addition to the wikipage on Newton's method By reading the page on the Newton's method, one can notice that there is no proof of the convergence rate. Although there are many ways in which this method can fail, the main advantage of this method over many other methods is that it's convergence rate is quadratic, provided that certain conditions are satisfied. It would be convenient to have a proof directly displayed or attached as a file via a link. This would also help students dealing with this or similar subjects to see the general approach how a convergence rate of a numerical method can be analyzed, such that similar analysis can be used to analyze other numerical methods.

The proposed convergence rate proof is shown next, which can be generalized for multivariable cases.

(b) Proof of quadratic convergence for the Newton's iterative method The function can be represented by a Taylor series expansion about a point that is considered to be close to a root of f(x). Let us denote this root as . The Taylor series expansion of f(x) about an is:

| (1) |

For ; , since is the root. Then, (1) becomes:

| (2) |

Using the Lagrange form of the Taylor series expansion remainder

the equation (2) becomes

It should be noted that the point stands for the initial guess, but the same form of expansion is valid for Taylor series expansion about an arbitrary point obtained after n iterations

| (3) |

By dividing equation (3) by and rearranging the expression, following can be obtained:

| (4) |

Now, the goal is to bring in the new iteration n+1 and relate it to the old one n. At this point, the Newton's formula can be used to transform the expression (4). The Newton's formula is given by

| (5) |

The intention is to eliminate the (generaly unknown) root from (4) via using formula (5) and get the error terms involved in the relation. Equation (4) can be adjusted for expression (5):

That is,

| (6) |

After taking absolute value (scalar function of a scalar variable is considered here) of both sides of (6), following is obtained:

| (7) |

Equation (7) shows that the convergence rate is quadratic if "no problem" occurs with the first derivative (e.g. the casses when the first derivative at the root is zero, such as and the initial guess is not "too far away" from the root, such that Finally, (7) can be expressed in the following way:

where M would be the supremum on the interval between and of the variable coefficient of

This is the proposed material and other users' suggestions to improve it would be appreciated. Thanks very much (by Elvek, September 26, 2010).

- Be sure to mention the hypothesis (needed for the Lagrange form of the remainder) that the function has a second derivative in an interval surrounding the root and its approximations. Also say explicitly that you are setting m=1. The second derivative is bounded in the interval. The first derivative is bounded away from zero in the interval. JRSpriggs (talk) 19:01, 24 September 2010 (UTC)

- Also, to stay in the interval. JRSpriggs (talk) 11:29, 27 September 2010 (UTC)

Elvek, on October 3, 2010: Thanks very much JRSpriggs on your suggestions, I added the material and made corrections accordingly.

example

I 'd like to share my solution for the Numerical Analysis class homewrok questions. so people can see how to apply above method to find the rate of convergence of the Newton's method.

Let . Then Taylor expansion of at is

Now we evaluate at such that .

.

Thus,

Since , it is equivalent to

That is,

divide by on both sides, we have

When n goes to infinity, goes to and goes to , where .

Hence, we can set the limit to find the rate of convergence as below;

,

where

Therefore, we conclude that converges to of order of 2(quadratic convergence) with the rate of convergence 0.288675. Jl214707 (talk) 06:07, 17 September 2012 (UTC)Jl214707

- Where does this go in the article?

- What are you trying to accomplish with this example?

- It seems kind of long without accomplishing much more than the general proof.

- Mjmohio (talk) 19:45, 18 September 2012 (UTC)

Stopping rule of iteration in Newton's method

When applying Newton's method in computer programs, there are several criteria can be used to stop iteration: setting up a fixed number of iterations, comparing the residual with a desired error tolerance, checking the iteration step size or the step size relative to the root being found. For these different criteria, one concern is that whether a scaling of the function affects searching roots. For example, the roots of and are the same. But a program may stop differently and thus find different roots. This is an issue of scale invariance. The criteria such as step size doen't stop scale properly; whereas a fixed number of iterations and the interval length relative to the root being found are invariant. Cite error: There are <ref> tags on this page without content in them (see the help page).Lxm1117 (talk) 16:03, 13 September 2012 (UTC)

- I don't understand the last sentence; it seems to parse as "X does, whereas Y and Z do." Is there a missing "not"?

- There was a typo in my last sentence. It should be "The criteria such as step size does not stop scale properly".

- Clarify scale invariance for all the stopping criteria you mention.

- This idea was mentioned by Hamming (1973) in his book Numerical Methods for Scientists and Engineers. It means that the stopping rule should be invariant if the starting value is scaled by an amount and also accordingly the successive values.So in general a relative measure instead of an absolute measure should fit. Several stopping rules usually adopted, for example step size and residual size , vary with scaling. While a fixed number of iterations dose not change with scaling. Hamming suggested using the size of step relative to the estimated zero, which is a relative measure.

- Can you say some stopping criteria are better than others? Are there references?

- Scale invariant stopping criteria should be preferred. But seems that rules such as step size are also widely adopted. I read the idea in the book but do not see it mentioned often in other sources.

- Mjmohio (talk) 00:17, 19 September 2012 (UTC)

Questionable reversion

To Paul August: You reverted this edit in which 86.140.58.200 (talk · contribs) changed "known" to "unknown". Could you please take another look at that. Why would one need to estimate something which is already known? JRSpriggs (talk) 20:08, 18 September 2012 (UTC)

Yeah, I apologize, I just reverted to the change to unknown, which I would not have done had I seen this. However, I say it *IS* questionable, one does not go from "known" to "on the other hand if it's known" etc. — Preceding unsigned comment added by 78.150.127.18 (talk) 16:54, 20 September 2012 (UTC)

stationary point handling

Currently the article says that if a stationary point occurs then the algorithm stops because of division by zero and someone just added that this limit the use of the method. Actually, the implementation can handle this by detecting that the derivative is zero and moving to a nearby point where the derivative isn't zero (unless the derivative is zero for a large region). How much text do we want to spend discussing how to handle this case? RJFJR (talk) 16:43, 30 September 2012 (UTC)

Change to Newton-Raphson method?

Someone started a second section on this topic, instead of continuing in the same section. To minimize confusion I am combining the two together into one.

In all fairness to Raphson, this should be the Newton-Raphson method. Pizza Puzzle —Preceding undated comment added 21:47, 25 June 2003.

I do agree with the above mentioned.Nijdam 16:01, 17 March 2006 (UTC)

I think there is a case for saying that "in all fairness" this should be Simpson's method, as neither Newton nor Raphson gave the method described in the article: they gave algebraic methods with a similar effect, but conceptually very different indeed, and applicable to a far more restricted range of functions. However, of the 2 names which are commonly accepted for this method, "the Newton-Raphson method" is clearly better than "Newton's method", so I shall move the page. JamesBWatson (talk) 10:29, 23 June 2009 (UTC)

- Following the move of the page agreed above, the following was posted at WikiProject Mathematics:

- JamesBWatson (talk · contribs) has unilaterally moved Newton's method to Newton–Raphson method. This is contrary to our policy of using the most common name in English. JRSpriggs (talk) 10:45, 23 June 2009 (UTC)

- Raise this at WT:WPM. Both names are widely used from what I know. Oleg Alexandrov (talk) 10:46, 23 June 2009 (UTC)

- <<above copied from Oleg's talk page>>

- Following the move of the page agreed above, the following was posted at WikiProject Mathematics:

- A discussion followed, which can be seen there, and in response I explained that I had not "unilaterally" moved the article, as it had been discussed (above) with no opposition, and I had not realised that someone had started a second discussion o the topic, and that I was not sure which name was more common. I then moved the article back. JamesBWatson (talk) 15:09, 25 June 2009 (UTC)

Here the second section on this topic starts.

This has been suggested several times. Do you think we should move? I've placed this on WP:RM Borisblue 05:21, 22 April 2006 (UTC)

- What are the reasons for moving? I think "Newton's method" is used more frequently than "Newton-Raphson method". For instance, a search on MathSciNet for papers with "Newton method" in the title returns 1558 hits, and a search for papers with "Newton-Raphson method" in the title returns 44 hits. -- Jitse Niesen (talk) 04:04, 24 April 2006 (UTC)

- In German, at least, it's only ever known as Newtonsches Näherungsverfahren, not as Newton-Raphsonsches Näherungsverfahren... —Nightstallion (?) Seen this already? 07:30, 27 April 2006 (UTC)

- Well, one argument in favor is that Raphson actually invented it first, I believe. That's the whole reason people bother to say something as awkward as Newton-Raphson. On the other hand, they're both dead, and we're alive and we have to say it. So there's that... Birge 02:45, 17 October 2006 (UTC)

This is the English version of the article. Surely German naming shouldn't have any bearing on the article name? I've always heard it called Newton-Raphson. 86.173.231.214 (talk) 20:16, 27 March 2012 (UTC)

- You surely did note that this is a very old discussion? Newton-Raphson is sometimes used for the one-dimensional method, Newton-Kantorovich sometimes for the multi-dimensional, Newton's method covers all of it.--LutzL (talk) 10:04, 28 March 2012 (UTC)

Just throwing in a note here that sources I've looked at that actually go into what it should be named prefer "Newton-Raphson". For example, Equations By Florian Cajori, Erland Samuel Bring 1907, p 193-195, "...the "Newton-Raphson" method would be a designation more nearly representing the facts of history than is "Newton's method"" — Preceding unsigned comment added by Allisgod (talk • contribs) 23:11, 10 December 2012 (UTC)

C++ code

I really apologize for the initial posts.. they were in wrong place so this is my first contribution to wiki. If you need an open source code in C++ you can check http://bytesthink.com/blog/?p=22. Thanks!! — Preceding unsigned comment added by Manoelramon (talk • contribs) 19:34, 23 January 2013 (UTC)

- Generally, Wikipedia is not the place to advertise anything -- even if it is free. Wikipedia typically frowns on using blogs because they are not reliable sources; anybody can write anything in their blog. Opinions in blogs (eg, Goldschmidt comment) are not the secondary sources that WP seeks. The blog used WP as its main reference, so using it as an EL has an odd element of circular support. WP is also leery of editors who insert links to their own works; they may be more interested in furthering their own interests rather than WP's; see WP:COI.

- WP wants to you and others to add content, but you should add content where you have a neutral point of view.

- Glrx (talk) 06:07, 24 January 2013 (UTC)

Refimprove

Hi, I've added a refimprove tag to the article. This is a very good article in my view but almost all of it is un-sourced, hence my addition of the tag. Regards. Gaba (talk) 16:02, 19 November 2013 (UTC)

What the heck?!

OK, so I came here looking to see if there was any kind of semi-layman explanation for this thing I only otherwise saw in unexplained computer code using programming functions I'm currently unfamiliar with. And I was disappointed.

Laying all the degree-level mathspeak aside for a moment... given that said code amounted to about six lines of no more than 20 characters' width, and carried out only one or two otherwise simple-looking operations per line (and thus DIDN'T seem to use any actual calculus - just subtraction and division, for the most part, but with the reasoning behind it obfuscated), can someone please put an easily-understood explanation of how you carry out this approximation either in the article or here on the talk page? In plain English? As in, if your input value is X, your initial guess is Y, and the output is Z (or indeed, starting values are X0, Y0 and Z0, increasing up to Xn etc as you iterate), how do you arrive at the first estimated value of Z, and then iterate towards a more precise one from there?

First person to use the words "function", "derivation", "tangent", any letter followed by a degree-minute mark, or an unexposited reference to another "theorem" or "method" gets a boot to the head, with the size and weight of the boot and the spikiness of the sole in direct proportion to how many of those offenses are committed all at once.

It is, after all, supposed to be a simple and easily conducted approximation of the root. If I can arrive at a better approximation, faster, just using guesswork and long division, without having to break out a dictionary of mathematics to understand what the Dickens is being discussed, then something's surely gone wrong.

An example incorporating a functional ISO BASIC program that only uses fundamental mathematical operators gets additional credit.

Multitudinous thanks in advance. 193.63.174.211 (talk) 14:09, 13 January 2014 (UTC)

- (For example, say I am Teh Thick, and want to try and work out what the root of 6.25 might be, knowing only that it must be more than 1 (as 1x1 = 1) but less than 4 (as 4x4 = 16) and having never done my 25x tables ... how does this method end up converging towards 2.5, any better than it would with my own amateur way of just cutting the range into halves and recalculating to see which side of the line it falls, if it doesn't in fact land on it exactly?) 193.63.174.211 (talk) 14:15, 13 January 2014 (UTC)

...JESUS CHRIST. OK, forgive my profanity, but I just found this on an external site:

Newton's square root equation

The equation to use in this method is:

√ N ≈ ½(N/A + A) , where:

N is a positive number of which you want to find the square root

√ is the square root sign

≈ means "approximately equal to..."

A is your educated guess

Therefore, if, say N = 121 and you guess at A = 10, you can enter the values into the equation:

√ 121 ≈ ½(121/10 + 10) = ½(12.1 +10) = ½(22.1) = 11.05

That is pretty close to the correct answer of 11.

This means, as far as I can tell, that you make an initial guess (Xn), and if it is not correct, then you make another guess (Xn+1) which is the mean of (your guess added to (the object number divided by your last guess)). And then you keep going until a satisfactory level of precision is achieved. THAT'S ALL IT SEEMS TO BE.

Why must things therefore be so complicated?! That's four lines of text. Four. And a single line of equation using very plain algebraic language.

Or have I got it all wrong? 193.63.174.211 (talk) 14:25, 13 January 2014 (UTC)

- The difference is that what you quote is merely a recipe for applying the method in one particular case, whereas the Wikipedia article seeks to explain the general method, which is applicable in a far wider range of cases. It is completely impossible to explain the method without using concepts such as "function" and "tangent", because they are fundamental to the method. And if you don't want an explanation of the method, but are just after a set of easy instructions on how to use it to find a square root, then you are in the wrong place, because Wikipedia is not a "how to..." guide. JamesBWatson (talk) 14:37, 13 January 2014 (UTC)

- 193.63.174.211, perhaps you would be better served if you got yourself the collected works of Viete of 1591, as published around 1650. There he has a chapter on numerical approximations of roots of equations up to degree 4 (or even higher?) avoiding any minus sign. Lots of special cases for that reason, and the idea of a function was also still in its infancy, derivatives and tangents were still unknown.--LutzL (talk) 18:18, 13 January 2014 (UTC)

- Source: F. Cajori (1929): A History of Mathematics, page 137--LutzL (talk) 16:49, 14 January 2014 (UTC)

- Derivatives were still unknown? Yes. Tangents were still unknown? No. There was already a significant body of mathematics relating to tangents to curves, some of it going back at least as far as Euclid, to my knowledge. JamesBWatson (talk) 16:52, 14 January 2014 (UTC)

- For more information about the algorithm you mentioned (applying Newton's method to calculating square roots), please see Methods of computing square roots#Babylonian method and the lead of that article. JRSpriggs (talk) 09:00, 14 January 2014 (UTC)

...

OK, well, I suppose that would sort of help, but still, is it necessary to have to go read centuries-old fundamental texts on this sort of thing when the thing that sent me looking up how it worked was a/ otherwise very simple, just with some functions whose actual operation was obscured, b/ implemented because the semi-scripting language was so simplistic that it didn't itself include support for square root calculations, so I doubt it used calculus.

Also, is there no hope of a simplified, first-principles sort of explanation, but with the attached caveat that "this only applies in these (XYZ) (general?) cases, and the detailed method described below should be followed to ensure universal success"? How much of what's written here was even known to Newton?

There's a massive problem with a lot of the math pages on WP where even when they're discussing things that otherwise seem simple and are casually tossed into conversation by people who are familiar with them (without any explanation, as if everyone should know it) are written up in such impenetrable, jargon-heavy, eye-watering formula infested copytext that someone who doesn't already have at least foundation degree maths (I have (UK) AS-level, and some half remembered shreds of calculus from a mandatory module within a vocational diploma) may as well just not even bother. Which seems rather more like an explanation that would belong in a textbook, not a general encyclopaedia. Isn't there an expectation (socially / de facto if not actually mandatory / de jure) of, say, an easily understood - even if minced into near oblivion - layman's version in the lede paragraph? WP may not be a how-to guide, but it's not a niche textbook either; I don't need to be a history graduate to understand the history pages, can even understand at least some of the physics (yes, I know it's just "applied maths"), chemistry, etc. I've been familiar with print encyclopaedias from well before I'd even heard of the internet, and it never seemed that hard, even poring over the 6pt text as a pre-teen. And I think my "mistake" (I'm still not entirely sure what mistake I made other than I made one, btw) shows that some clarification may be in order, because a person of otherwise normal intelligence came to look up the method, got frustrated trying to figure it out here, went and googled for something else, and settled on one that is apparently only applicable to a limited set of circumstances without having any idea what those were, or that it was even the case.

I mean, having looked up the explanation given on the other webpage, and comparing it with the first paras of this article itself, I can see the resemblance and figure out what is what ... but some bits are obscure enough that although there is the odd bit of bluetext, including for "function" it's hard to know what to click first or if that page will be any less confusing.

x0 and x1 are easy enough to get (thanks to the returning shreds of the aforementioned school/college room maths, between a quarter and half a lifetime ago), as the initial "wild" guess and the improved estimate of the root; "f" and "function" are placeholders for "y" and "known variable"; and "f'" is... er...

No, wait, what?

OK, perhaps f =/= y. And these aren't so similar. Unless f(x) is "y2 - x" and... no, still can't make it work.

\*abandons attempt*

Look, instead of the snarky bickering and such, is it possible to just get a simple-as-possible explanation, if that's even a thing? Even if just here on the talk page? Even if it comes with a qualification that it only holds "in a particular circumstance" (what this ephemeral set of conditions may be, I have no idea at the moment; I can already see that the numbers in question have to be "reals", which without clicking through to another possibly jargonariffic page I'm going to take a stab as meaning "positive and with no imaginary component", so what's the other requirement?) ??

If not, I might have to make a proposal that pages like this come with a "warning: abandon all hope of understanding this unless you're already qualified in the field, or have four hours to kill looking up all the references" banner ;-) ... which, y'know, would be fair. Some things take time to learn. I'm just having trouble figuring out why it would be the case here.

PS all things like tangents etc can be worked out from fundamental principles, right? And whilst I wouldn't go so far as to demand a detailed explanation of those inline with the text, maybe there's a way to write out the method that incorporates the longhand working-out of said tangent without having to name it as such? (IDK ... wibbling a bit at this point if I'm honest) 193.63.174.211 (talk) 09:29, 15 January 2014 (UTC)

- But the first principles explanation is just that of the article: you got a function and an iteration point, you compute a good or even the best linear approximation to that function in that point. Then compute the root of that linear approximation, replace the iteration point with it, and repeat the whole process. For the same task you can take different functions, for the square root of a you have f(x)=x2-a or f(x)=x-a/x or f(x)=1-a/x2 that all lead to a different iteration formula, and different global behavior of the iteration, but all have the same final quadratic convergence behavior (if convergence occurs).--LutzL (talk) 12:08, 15 January 2014 (UTC)

Section structure of the article

There are, apart from the justifiable request for more references and the questionable request for more inline citations, other problems with the current structure:

- The lead now has a long, unnecessary paragraph with formulas that are duplicated in the directly following description section. It would be sufficient to mention the "solution of the linear approximation", or "root of the tangent" in the lead.

- The description section appears a bit lengthy. If one goes to that length, a short derivation of the error terms based on the quadratic approximation in the root could be added, but leaving the proof-level estimates for the "analysis" section.

- The history section is now very well written (missing time periods for the persian/arab mathematicians). But current consent seems to be to relegate historical remarks to the end of the article?

- "Practical considerations" is a horror story on why not to use the method. In this prominent place it seems highly demotivational. Its main intent is also duplicated in the "failure analysis" section.

- "Examples" and "pseudo code" are currently the last sections. In the spirit that the article should progress from popular science to university level, they should be placed somewhere before the section on the analysis of the method and applications to higher dimensions and complex numbers.

- Analysis of multiplicities and over-relaxation is also an advanced topic that has its logical place after the analysis section. A counter-example for a double root (f(x)=x²) can be placed and explained in the example section. There should only be one place discussing multiplicites, and it should have better references (if at all) than an (incompletely cited, in multiple ways) exercise in some text book.

--LutzL (talk) 09:39, 5 May 2014 (UTC)

- You have good reasons to make changes, so go ahead and make some. Glrx (talk) 21:52, 8 May 2014 (UTC)

Assessment comment

The comment(s) below were originally left at Talk:Newton's method/Comments, and are posted here for posterity. Following several discussions in past years, these subpages are now deprecated. The comments may be irrelevant or outdated; if so, please feel free to remove this section.

| The structure of the sections practical considerations, counterexamples and analysis needs an overhaul. Add a bit on line search to make method robust. Newton-Kantorovich theorem. Section on systems needs to be expanded greatly; can grow in a separate article. -- Jitse Niesen (talk) 12:46, 24 May 2007 (UTC) |

Last edited at 12:46, 24 May 2007 (UTC). Substituted at 02:23, 5 May 2016 (UTC)

The .gif in the description section is not in English.

Just thought I'd make everyone aware, it says "funktion" and "tangente". — Preceding unsigned comment added by 5.66.18.14 (talk) 23:36, 2 May 2015 (UTC)

I just wanted to add that the GIF is absolutely incredible...very illustrative of Newton's method. — Preceding unsigned comment added by 72.141.163.52 (talk) 22:51, 3 December 2018 (UTC)

I wish there were some way to pause the animation in the gif. I find it very frustrating that the plot changes before I've had a chance to understand what the hell it was showing.

Topic heading changes

Hey there, the heading "Generalizations --> Nonlinear systems of equations" is inappropriate for a number of reasons.

Firstly, Newton's method is (almost by definition) a method for finding the roots of non-linear equations (since finding the roots of linear equations does not involve the derivative / iteration). Hence, the term "non-linear" is redundant in the heading.

I think a heading of just "systems of equations" would be OK except that the body of the text specifically describes a single vector-valued function F : ℝk → ℝk. Further, I think it is more intuitive to think of things in this way, rather than as a system of equations, since the univariate N-R method finds the root(s) of a single real-valued function, and thus the natural extension is to find the "vectorised" roots of a single function that is now vector-valued.

--Statisticsmonkey (talk) 05:41, 27 August 2021 (UTC)

Fixed. I have also changed other headings accordingly. D.Lazard (talk) 07:12, 27 August 2021 (UTC)

Fixed. I have also changed other headings accordingly. D.Lazard (talk) 07:12, 27 August 2021 (UTC)

Choice of styles

The Manual of Styles states that "[w]here more than one style or format is acceptable under MoS, one should be used consistently within an article". There is currently no consistency of mathematical style in this article as there is a lot of both the latex math style as well as the HTML style (see Manual of Style : Mathematics for reference). I think the latex style is much better and I propose that we standardise the math style accordingly by replacing any HTML math style with latex math style.

- There were many discussions among mathematics editors about this subject, and the present consensus seems the following: Avoid sanserif html (abcdef). If html is used for a formula, it must be enclosed inside {{math}} or {{mvar}}. If a formula contains special characters, such as , latex is preferred, because of a bad rendering on some browsers. For displayed formulas, latex is preferred. Otherwise, the used style must be kept unless if the article section is edited for other reasons (one reason is that, changing the style induces errors frequently). Moreover, many editors prefer {{mvar}} for isolated variables.

- It seems that this article follows these rules (I have not checked this in details), so the style of the formulas must not be changed per MOS:VAR. D.Lazard (talk) 07:42, 11 September 2021 (UTC)

Meaning of “sufficiently close” is unclear

I was reading through this article just now and am confused by the three conditions under "The term sufficiently close in this context means the following:". The first condition is “Taylor approximation is accurate enough such that we can ignore higher order terms”; this invokes an entirely different unanswered question of what it means for the Taylor approximation to be “accurate enough.” Is this condition equivalent to the other two conditions described by formulas, or do all three conditions have to hold independently for “sufficiently close” to be true? rdl381 (talk) 14:52, 25 October 2022 (UTC)

- I rewrote the conditions to make them correct, simpler, and clearer. How is it now? Are you satisfied? JRSpriggs (talk) 21:04, 25 October 2022 (UTC)

- Yes, I think it’s clear now. Thanks! Is it correct to merge conditions 1 and 3 as follows?

- for all , where is the interval for some such that

- This way isn’t used before its meaning is explained. — Preceding unsigned comment added by Rdl381 (talk • contribs) 15:55, 27 October 2022 (UTC)

- Since all the conditions are needed, I do not think that the order is particularly significant. I think it would be better to focus on getting x0 closer to α rather than increasing r to try to include it. We do not want M to get so large that the last condition cannot be met. JRSpriggs (talk) 21:53, 27 October 2022 (UTC)

![{\displaystyle {\sqrt[{n}]{|x|}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c224f6360172998ac463c8ef8fd663995d559a5b)

![{\displaystyle {\sqrt[{3}]{x}}=0}](https://wikimedia.org/api/rest_v1/media/math/render/svg/526e75bd9a36db1cccdb4c7f692de1bfb85163b3)

![{\displaystyle {\sqrt[{3}]{x}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9a55f866116e7a86823816615dd98fcccde75473)

![{\displaystyle R_{m}={\frac {1}{(m+1)!}}f^{(m+1)}(\xi )(\alpha -x_{0})^{(m+1)},{\text{ where }}\xi \in [x_{0},\alpha ]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1d20b2036e5aaf59ca378ae99531822d559482a0)

![{\displaystyle [\alpha -r,\alpha +r]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/03103ca4ac47a6f59e9638760af0e032ca75ba88)